1 Introduction

These days, universities and knowledge centers offer a wide range of public engagement activities like museum exhibitions, nature walks and science festivals. An important aim of these activities is to increase visitors’ interest in research, which may develop into a long-term intrinsic motivation to engage in science [Allen et al., 2008; Bell et al., 2018; Salmi & Thuneberg, 2019]. Given the considerable effort and attention devoted to such science communication activities, it is important to understand their effectiveness. Impact evaluations help to gain more insight into whether and how activities contribute to the public’s understanding and awareness of science [Watermeyer & Lewis, 2015].

Despite the increased recognition of science communication activities, however, robust measurements of impact remain scarce, causing a lack in empirical knowledge on the crucial elements that drive science communication impact [Jensen, 2014; Jensen & Buckley, 2014]. With this research, we aimed to contribute to the field by identifying active ingredients of a science festival. By active ingredients, we refer to elements that are responsible for creating a desired impact on visitors. The term is borrowed from medical and pharmaceutical science, where it denotes the critical component of an intervention that is responsible for producing the desired change in outcomes [e.g., fluoride in toothpaste, Li & Julian, 2012].

1.1 Objective

The academic literature offers various toolkits that help researchers and practitioners set up and improve their science communication activities [e.g., American Association for the Advancement of Science, n.d.; Bell et al., 2018; Imperial College London, n.d.]. These literatures are extremely valuable to understand the process of science learning, but they are also rather extensive and scattered, which makes it difficult to identify the core issues and to develop concise and efficient assessment tools that capture key variables [Bell et al., 2018; Fransman, 2018]. This problem can be addressed by integrating insights from different frameworks and extracting broader underlying mechanisms [Thompson, 2004].

Our research aimed to address this problem by conducting a quantitative survey study at a science festival that measured a wide set of variables known to enhance science communication impact (i.e., predictive processes), and extracting their underlying factors (i.e., active ingredients). Data reduction was achieved using exploratory factor analysis, a statistical method commonly used in psychology, biology, and other empirical sciences to condense data of large numbers of variables into a smaller number of factors [Thompson, 2004]. Data reduction is theoretically valuable, as it creates structure in the data and helps to generate and refine theory [Williams, Onsman & Brown, 2010]. It also has practical benefits, as it helps to identify and remove overlap between variables, resulting in a limited set of variables that can be measured and analyzed more easily [Thompson, 2004]. A further aim of this research was to assess the power of the active ingredients for predicting impact. Therefore, we also measured a wide set of outcomes in our survey and used factor analysis to extract their respective underlying factors (i.e., impacts). We conducted regression analyses to evaluate and compare the extent to which the active ingredients predicted the impacts.

Data were collected at a science festival (i.e., Betweter Festival in The Netherlands, more information follows in the Method section). Science festivals include a variety of activities like academic lectures, live experiments, debate and dialogue events, characterizing the mix of goals and methods of the contemporary landscape of science communication [Jensen & Buckley, 2014]. Below, we discuss the theoretical basis for the development of the survey.

1.2 Predictive processes

The overarching goals of the Betweter Festival are to increase visitors’ interest in, and curiosity about scientific research, which are common goals for science communication events [e.g., Bell et al., 2018; Salmi & Thuneberg, 2019]. Given these goals, we based the survey on theories defining psychological processes that stimulate human motivation and interest in academic learning. We also incorporated insights from several toolkits on effective science communication. Processes and elements commonly cited in these theories and frameworks as predictors of motivation and academic learning served as the basis for the survey items.

A central theme in the consulted theories and frameworks is that learning environments should be stimulating and challenging, but also clear and easy to follow, allowing visitors to progress towards mastery of skills and knowledge [Csíkszentmihályi, 1990; Deci & Ryan, 1985, 2012; Jones, 2009]. Another recurring theme is the importance of empowerment, which can be promoted by allowing visitors to actively participate, form opinions and experiences, and engage in dialogue and reflection [Bell et al., 2018; Deci & Ryan, 1985, 2012; Jones, 2009]. There is also broad consensus that fostering social contact among visitors, and between visitors and scientists, raises intrinsic motivation and involvement [Bell et al., 2018; Deci & Ryan, 1985, 2012; Falk & Dierking, 2004]. Furthermore, learning activities should align with learners’ personal experience, interests, and self-concept, and help them achieve their private goals [Falk & Dierking, 2004; Jones, 2009]. Finally, the literature suggests that eliciting emotion is a good strategy to spark motivation and learning [e.g., creating suspense, humor, touching people’s hearts; Bell et al., 2018; Falk & Dierking, 2004; Imperial College London, n.d.; Oliver & Raney, 2011]. Therefore, we incorporated all these predictors in our survey.

1.3 Outcomes

We use the term impact (or impacts) to refer to the various outcomes that visiting a science festival may have for visitors. We selected outcomes that are commonly mentioned in academic studies and frameworks on science communications. The first two outcomes we included were increased knowledge and more understanding [e.g., Allen et al., 2008; Burns, O’Connor & Stocklmayer, 2003]. Knowledge usually refers to proficiency in a specific topic or theme (e.g., the Big Bang Theory), while understanding usually refers to the general process of scientific research and reasoning. A third outcome we incorporated was increased engagement, which refers to the sense that science is personally significant and interesting [Allen et al., 2008; Sánchez-Mora, 2016]. A fourth outcome was intellectual stimulation, referring to increased curiosity and excitement to learn more about science [Sánchez-Mora, 2016; National Research Council, 2009]. A fifth outcome was reduced distance, which denotes feeling closer to science and scientists [Sánchez-Mora, 2016; National Research Council, 2009].

1.4 Overview of the research

To recap, our objective was to collect data on predictive processes and outcomes among festival visitors, and to distill underlying active ingredients and impacts. Furthermore, we aimed to analyze the relationships between active ingredients and impacts. By doing this, we hoped to get a deeper understanding of the mechanisms behind a successful science festival.

It is important to note that our research focused on self-reported processes and outcomes. Impact researchers have rightly pointed out that simply asking a person whether she has been affected by an activity is insufficient to establish true impact [Jensen, 2014]. Yet, we found self-reported change to be interesting and theoretically valuable in this case. The predictive processes refer to subjective experiences of pleasure, feeling at ease, feeling challenged, et cetera. As experiences and feelings are inherently perceptual in nature, self-report items are a valid assessment in this case [Chan, 2009].

Furthermore, we note that our research approach cannot establish causal links between active ingredients and impacts. The study should be considered as an exploratory analysis that may be supplemented in the future with the design of an experimental study that establishes the causal influence of the active ingredients on the impacts.

2 Method

2.1 Procedure

2.1.1 Setting

The case study examined was the Betweter Festival, a yearly arts and science festival organized by Utrecht University, The Netherlands. We collected survey data at the 2019 and 2021 editions (the 2020 edition was canceled due to the corona pandemic). Our research focused on the science programming, which included academic talks, dialogues with scientists, live experiments or demonstrations, and mixed contributions from academics and artists (e.g., a scientific talk set to music). The target audience of the festival are adults who, according to the website, “want to know how the world works, are not afraid of new experiences and like to be amazed”. Visitors buy a ticket to enter the festival, and once inside can self-select their individual pathways through the festival and encounter a wide range of science engagement activities on a ‘drop in’ basis. A schedule and brief descriptions of the activities are provided to visitors on the festival website and in a booklet handed to them at the entrance. Examples of activity titles include ‘Can quantum theory explain our consciousness?’, ‘Is there any future in poetry?’ and ‘How can we achieve sexual equality between men and women?’.

2.1.2 Data collection

Participants were recruited by interviewers who walked around the festival and invited visitors to participate in an academic survey about “their experience of the Betweter Festival”. Participants completed the survey on iPads the interviewers carried with them, or by scanning a QR code on their own device. After providing informed consent and providing demographic details, participants picked one science activity they had visited at the festival from a dropdown list (e.g., a lecture they had seen or an experiment they had participated in). They completed the survey items with this science activity in mind. This approach was chosen because the variety of festival activities would make it impossible to focus on all festival activities at the same time.

Interviewers were instructed to report irregulates (e.g., distracting interruptions, participants who seemed drunk) to be able to exclude these data from the analyses. However, no irregulates were reported. Visitors participated voluntarily and received no financial compensation. Filling out the survey took on average 7–10 minutes. The study is part of a broader research program of which the procedure was approved by the Ethics Review Board of the Faculty of Social and Behavioural Sciences of Utrecht University (registration number 21-453).

2.1.3 Strategy for statistical analysis

In the first step, we subjected the data from the predictive process items to an exploratory factor analysis to identify underlying active ingredients. In a second step, we subjected the data from the outcome items to an exploratory factor analysis to identify impacts. In both factor analyses, we used principal component analysis and oblimin rotation. In the third step, we used linear regression to assess the extent to which the active ingredients predicted the impacts, using the means of the active ingredients as predictors and the means of the impacts as criteria.

2.1.4 Participants

Four-hundred-and-fifty-six festival visitors completed the survey, representing over 11 percent of the approximately 4000 visitors across the two festival years. Of the respondents, (57.5%) identified as female, (39.9%) as male, and 12 (2.6%) as neither. Their age ranged between 17 and 74 years (, ). The sample was highly educated, with 64.3% university graduates and 21.3% higher professional education graduates.

As mentioned before, participants completed the survey while keeping in mind one festival activity they had participated in. Participants focused on an academic talk (, 50.7%), a dialogue with a scientist (, 21.1%), a combined science and art performance (, 21.5%), or a live experiment or demonstration (, 6.8%).

2.2 Measures

2.2.1 Predictive process items

In 2019, the measurement included 16 statements to which participants could indicate their agreement on Likert scales ranging from 1 (Strongly disagree) to 7 (Strongly agree). We needed a concise survey that captured all eight predictive processes. Since no validated survey was available for this purpose, we developed items based on theory and previous studies defining the predictive processes. Clarity: “The content was easy to follow” and “The content was clear” [Csíkszentmihályi, 1990; Deci & Ryan, 1985, 2012]; Active involvement: “I actively participated in the activity” and “I was invited to do something” [Deci & Ryan, 1985, 2012; Jones, 2009]; Intellectual challenge: “It challenged me intellectually” and “I got the best out of myself” [Csíkszentmihályi, 1990; Jones, 2009]; Emotional appeal: “It was funny” and “I was touched emotionally” [Falk & Dierking, 2004; Oliver & Raney, 2011]; Self-relevance: “I learned something about myself” and “It connected to my personal life” [Falk & Dierking, 2004; Jones, 2009]; Dialogue and Reflection: “It got me thinking” and “There was room for dialogue” [Deci & Ryan, 1985, 2012; Jones, 2009]; Social contact: “I had personal contact with other people” and “I shared the experience with others” [Deci & Ryan, 1985, 2012; Falk & Dierking, 2004]; Safety: “There was a friendly atmosphere” and “I felt at ease” [Csíkszentmihályi, 1990; Jones, 2009].

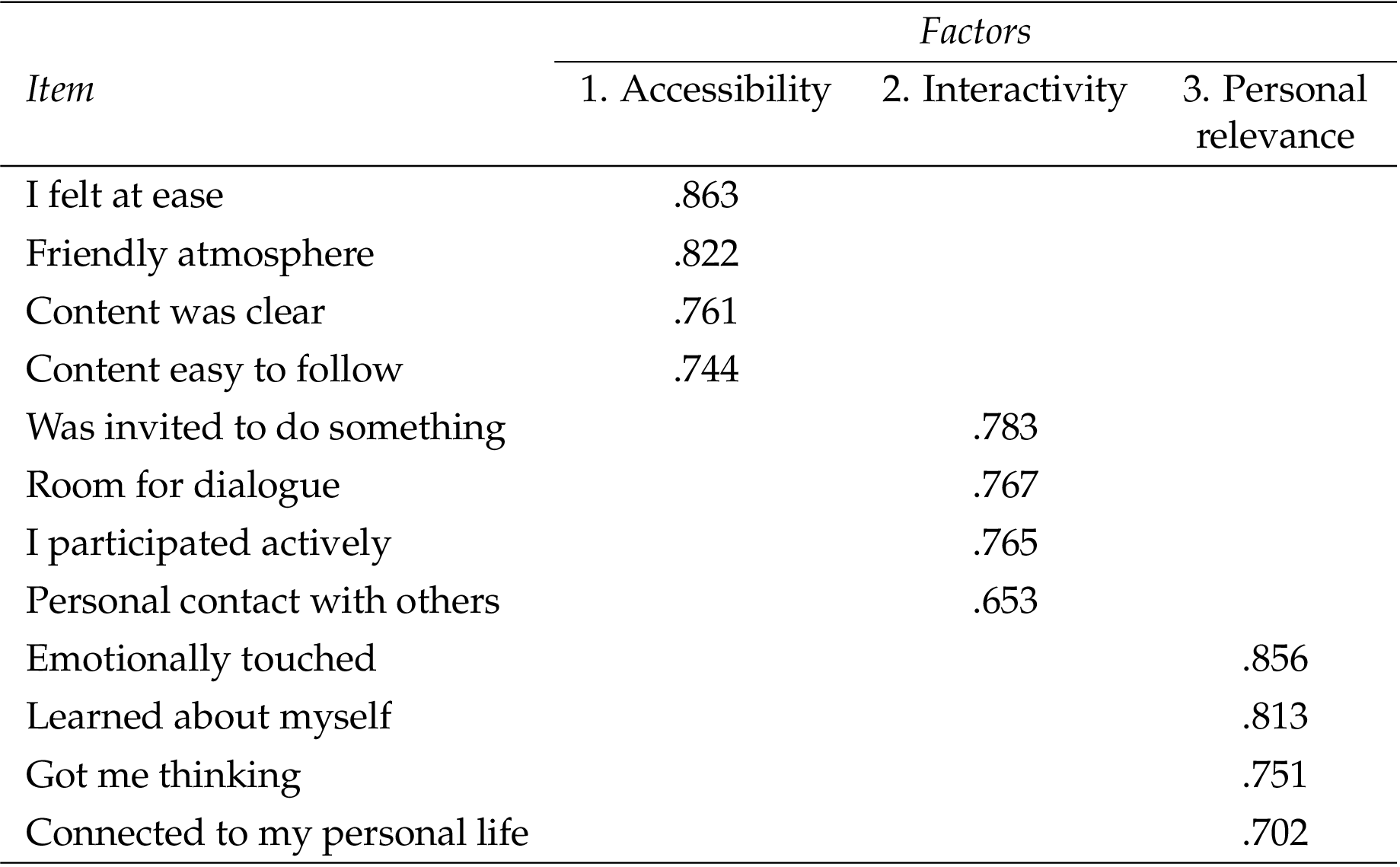

After the 2019 edition, we analyzed the data and identified three underlying active ingredients: accessibility, interactivity, and personal relevance (see Results). In 2021, we retained only the 12 items with the highest factor loadings on the three active ingredients, while adding four new items. The 2021 results showed that the new items did not improve the interpretability or predictive power of the survey, and therefore, the four new items were deemed redundant. For clarity and brevity, we limit our Results section to the 12 items that were most useful (see Table 1).

2.2.2 Outcome items

In 2019, the measurement included 10 statements to which participants could indicate their agreement on Likert scales ranging from 1 (Strongly disagree) to 7 (Strongly agree). We needed a concise survey that captured self-reported changes on five different outcomes. Since no validated survey was available that met these needs, we developed items based on formulations in the academic literature. Knowledge gain: “I gained new knowledge” and “I learned something new” [Allen et al., 2008; National Research Council, 2009]. Increased engagement: “I see better why science is relevant” and “My interest in research has increased” [Allen et al., 2008; National Research Council, 2009]; More understanding: “I better understand what research is” and “I have a better idea of science” [Allen et al., 2008; National Research Council, 2009]; Intellectual stimulation: “I have become curious” and “I know better what I do not know” [Sánchez-Mora, 2016; National Research Council, 2009]; Reduced distance: “Science feels more familiar” and “I experience less distance from scientists” [Sánchez-Mora, 2016; National Research Council, 2009].

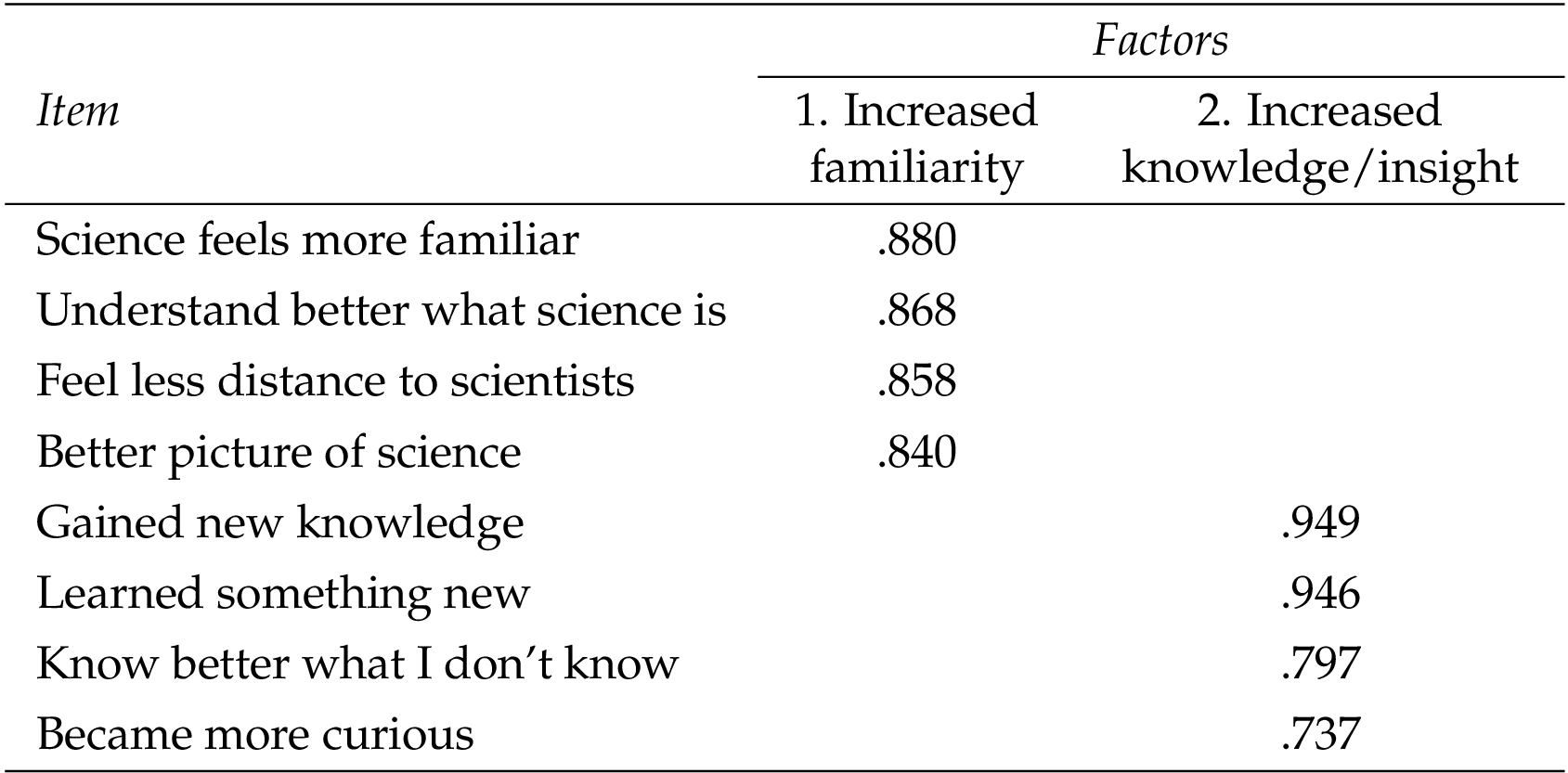

After the 2019 edition, we analyzed the data and identified two underlying impacts: increased familiarity and increased knowledge/insight (see Results). In 2021, we retained only the 8 items with the highest factor loadings on the two impacts. The remainder of the paper will focus on these 8 items (see Table 2).

3 Results

3.1 Factor analysis on predictive process items

The factor analysis identified three underlying factors (active ingredients) that together explained 61.9% of the variation in the predictive processes data. The factor solution is depicted in Table 1. The eigenvalues of the first, second, and third factor were 3.95, 1.95, and 1.53, respectively. The next step in a factor analysis is to interpret the factors, which is a subjective, inductive process of finding a theoretically meaningful label that reflects the content of the items loading highly on this factor [Williams et al., 2010]. The items that loaded highly on the first extracted factor measured feeling at ease, experiencing a friendly atmosphere, and being able to understand the content (see Table 1). Hence, the first active ingredient clustered the items on comprehensibility and safety. It seems to reflect the extent to which the activity and the scientists came across as open, comprehensible, and approachable. We labeled this active ingredient ‘accessibility’.

The items that loaded highly on the second factor measured being invited to participate in the activity, experiencing room for dialogue, contributing actively, and having personal contact with others. Hence, this factor reflects the extent to which visitors experienced interactive involvement with the activity and with other people. Therefore, the second active ingredient was labeled ‘interactivity’.

The items that loaded highly on the third factor measured being emotionally touched, learning about the self, feeling thoughtful and feeling a connection to one’s personal life. Hence, the third active ingredient reflected an overall experience of self-relevance. It also includes an aspect of being emotionally ‘moved’, which signals that the activity touched visitors’ deeper concerns or core values [Cova & Deonna, 2014]. Therefore, we labelled the third active ingredient ‘personal relevance’.

3.2 Factor analysis of outcome items

The factor analysis of the outcome items identified two underlying factors (impacts) that together explained 75.6% of the variation in the data. The factor solution is depicted in Table 2. The eigenvalues of the first and second factor were 4.63 and 1.42, respectively.

The items with the highest loadings on the first factor referred to feeling more familiarity with science, more understanding, feeling less distance to scientists, and having a better picture of science. Hence, this factor reflects a feeling of closeness with science and scientists. We labeled this impact ‘increased familiarity’.

The items with the highest loadings on the second factor were about gaining new knowledge, learning something new, becoming aware of one’s knowledge gaps, and becoming more curious. Hence, this factor clustered the items on knowledge gain and intellectual stimulation. We labeled this impact ‘increased knowledge/insight’.

3.3 Predictive value of active ingredients

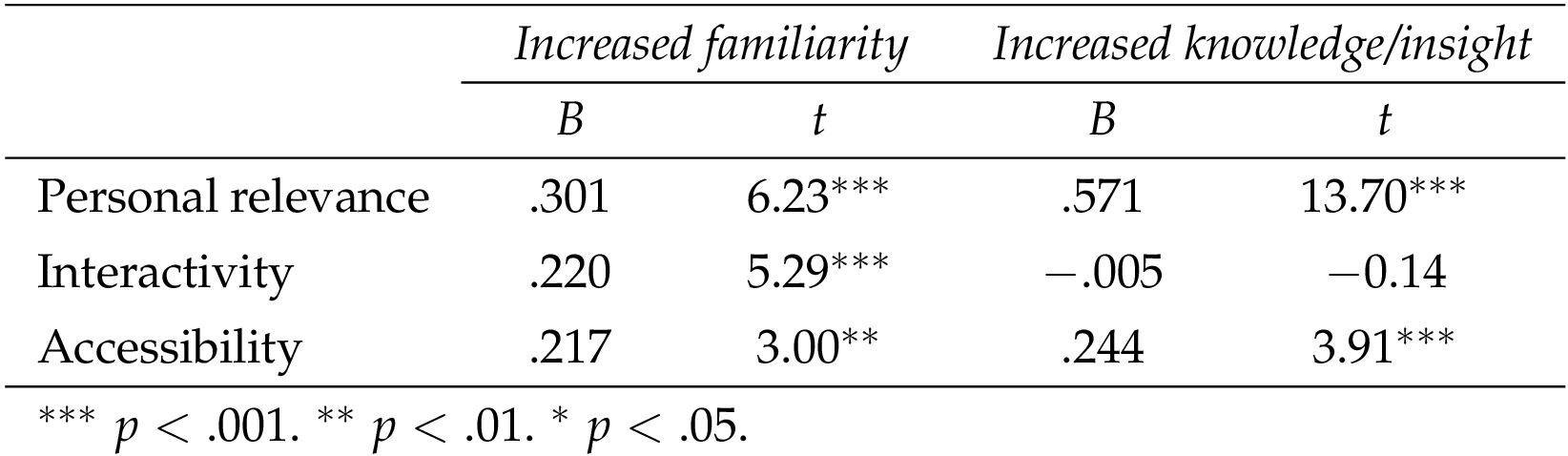

The items of the three active ingredients showed good reliability (Cronbach’s alphas of accessibility, interactivity, and personal relevance were .82, .73, and .80, respectively), as did the items of the two impacts (Cronbach’s alphas of increased familiarity and increased knowledge/insight were .89 and .89, respectively). We therefore calculated means for each factor.

The means were then entered in regression analyses to evaluate the power of the active ingredients to predict impacts. The results are summarized in Table 3. Personal relevance, interactivity and accessibility all significantly and positively predicted increased familiarity. Personal relevance and accessibility significantly and positively predicted self-reported increased knowledge/insight, whereas interactivity was not a significant predictor of self-reported increased knowledge/insight. The regression analyses suggest that personal relevance was the most powerful active ingredient, as it was the strongest predictor of both increased familiarity and increased knowledge/insight.

4 Discussion

This research identified active ingredients and impacts of a science festival. Exploratory factor analyses of survey data suggested that the festival has two types of impacts on the visitors, namely increased familiarity with science and scientists, and increased knowledge/insight. These results confirm that the science festival not only served to educate people, but also to reduce distance and raise feelings of familiarity between scientists and visitors [Sánchez-Mora, 2016; National Research Council, 2009].

The results further showed that three active ingredients predicted impact, namely personal relevance, interactivity, and accessibility. The most powerful active ingredient was personal relevance, or the sense that the activity touches on one’s emotions and personal life. The importance of this active ingredient is reminiscent of the self-relevance effect, a tendency for people to encode information more deeply when the self is implicated in the information [e.g., Scheller & Sui, 2022]. It also converges with research on persuasion showing that personal relevance is key to activating central route information processing, which denotes processing information with high motivation and forming lasting impressions and behavioral consequences [Petty & Cacioppo, 1986].

Given the importance of personal relevance, it is useful for science educators to know how it can be achieved. A common way to stimulate a sense of personal relevance is by demonstrating how a given topic has important consequences for the audience [Petty & Cacioppo, 1986; Wagner & Petty, 2011]. Our factor analysis indicated that the sense of personal relevance may also be increased by touching on people’s emotions and providing them opportunities to learn about and reflect upon themselves. These insights support the current trend of communicating science via art, which is believed to foster an emotional bond between visitors and the scientific material, ultimately promoting greater learning outcomes [Davies, Halpern, Horst, Kirby & Lewenstein, 2019].

A second active ingredient was accessibility. The results showed that accessibility predicted increased familiarity and increased knowledge and insight. This makes sense as accessibility — for example by translating complex findings into understandable formats, providing clear examples, avoiding scientific jargon — is a standard recommendation in any guideline for science communication [e.g., American Association for the Advancement of Science, n.d.; Bell et al., 2018]. An important insight from our analysis was that accessibility benefits from a friendly atmosphere that puts visitors at ease and creates a constructive environment for learning. Without this cooperative atmosphere, visitors may become vigilant, worried about making a good impression and avoiding making mistakes, which impairs their learning and development [Csíkszentmihályi, 1990; Jones, 2009].

The third active ingredient was interactivity, which comprises a mixture of promoting active participation, dialogue, and social connection. We found that a higher level of interactivity predicted increased familiarity with science and scientists, which aligns with other research showing that two-way communication facilitates understanding and trust. For example, Contact Theory [Allport, 1954] holds that sheer personal contact may generate mutual understanding and empathy. However, research on Contact Theory also showed that this positive impact only arises when the interacting individuals or groups have equal status within the contact situation, engage in cooperation, have common goals, and contact is supported by relevant authorities, law or custom [Pettigrew & Tropp, 2008]. Science communicators may benefit from taking these factors into account, for example by alternating moments of ‘talking’ and ‘listening’ to audience members (to create equal status) and by co-creating concrete products or outcomes with audience members (to have a common goal).

Interactivity did not predict increased knowledge or insight. This result does not mean that interactive activities always fail to produce cognitive learning. It only means that in the context of this science festival, activities with high perceived interactivity were not particularly impactful on improving knowledge and insight. It is possible that festival activities that focused primarily on interaction (e.g., live experiments and dialogues) happened to place less emphasis on the transfer of scientific knowledge and insights. Additional data on science communication activities that vary in interactivity are needed to investigate this question further.

4.1 Limitations

This survey study provided new insights in the success factors of a science festival, but also suffered from important limitations. To establish the causal influence of the active ingredients on the impacts, experimental research is needed that may include, for example, a pre and post measurement of the active ingredients and/or a control group of individuals who did not attend the festival.

Furthermore, the research was essentially a case study. To discover whether the findings are generalizable, comparisons with other science festivals are needed, preferably at different places and attracting different audiences (e.g., participants who are less educated or from a different culture). Our sampling consisted mainly of people who were well educated and — since they chose to attend the science festival in the first place — were likely more interested in science than most audiences. Although these problems may not be readily avoided in future studies on science festivals, these limitations are important to keep in mind.

Furthermore, our procedure dictated that respondents kept one festival activity in mind while completing the survey. Most participants focused on an academic talk; an activity characterized by low interactivity, which further limits the generalizability. Additionally, we only measured the immediate impact of the festival on visitors’ knowledge, attitudes, and behavior. To expand our understanding of the societal impact of science festivals, future research should consider long-term impacts beyond the on-site responses collected in this study.

Despite these limitations, the study yielded useful exploratory insights on the active ingredients and impacts of a science festival and explored new ways to investigate these processes. The insights help to enhance the quality of this science festival and may help science communicators design and conduct impact studies in the context of their own public event.

Acknowledgments

The authors are grateful to Jessie Waalwijk for her help in organizing the study, and to Anne Land-Zandstra and Ward Peeters for providing helpful comments on the manuscript. The research was partly funded by the Utrecht University Open Science Programme.

References

-

Allen, S., Campbell, P. B., Dierking, L. D., Flagg, B. N., Friedman, A. J., Garibay, C., … Ucko, D. A. (2008). Framework for evaluating impacts of informal science education projects. Report from a National Science Foundation workshop. The National Science Foundation, Division of Research on Learning in Formal and Informal Settings. Retrieved from https://www.informalscience.org/framework-evaluating-impacts-informal-science-education-projects

-

Allport, G. W. (1954). The nature of prejudice. New York, NY, U.S.A.: Doubleday Anchor Books.

-

American Association for the Advancement of Science (n.d.). AAAS communication toolkit. Retrieved July 30, 2022, from https://www.aaas.org/resources/communication-toolkit

-

Bell, L., Lowenthal, C., Sittenfeld, D., Todd, K., Pfeifle, S. & Kunz Kollmann, E. (2018). Public engagement with science: a guide to creating conversations among publics and scientists for mutual learning and societal decision-making. Museum of Science. Retrieved July 30, 2022, from https://www.nisenet.org/sites/default/files/pes_guide_09_13pdf.pdf

-

Burns, T. W., O’Connor, D. J. & Stocklmayer, S. M. (2003). Science communication: a contemporary definition. Public Understanding of Science 12 (2), 183–202. doi:10.1177/09636625030122004

-

Chan, D. (2009). So why ask me? Are self-report data really that bad? In C. E. Lance & R. J. Vandenberg (Eds.), Statistical and methodological myths and urban legends: doctrine, verity and fable in the organizational and social sciences (pp. 309–336). doi:10.4324/9780203867266

-

Cova, F. & Deonna, J. A. (2014). Being moved. Philosophical Studies 169 (3), 447–466. doi:10.1007/s11098-013-0192-9

-

Csíkszentmihályi, M. (1990). Flow: the psychology of optimal experience. New York, NY, U.S.A.: Harper & Row.

-

Davies, S. R., Halpern, M., Horst, M., Kirby, D. & Lewenstein, B. (2019). Science stories as culture: experience, identity, narrative and emotion in public communication of science. JCOM 18 (05), A01. doi:10.22323/2.18050201

-

Deci, E. L. & Ryan, R. M. (1985). Intrinsic motivation and self-determination in human behavior. New York, NY, U.S.A.: Plenum Press.

-

Deci, E. L. & Ryan, R. M. (2012). Self-determination theory. In P. A. M. Van Lange, A. W. Kruglanski & E. T. Higgins (Eds.), Handbook of theories of social psychology. Volume 1 (pp. 416–437). doi:10.4135/9781446249215.n21

-

Falk, J. H. & Dierking, L. D. (2004). The contextual model of learning. In G. Anderson (Ed.), Reinventing the museum: historical and contemporary perspectives on the paradigm shift (pp. 139–142). Oxford, U.K.: AltaMira Press.

-

Fransman, J. (2018). Charting a course to an emerging field of ‘research engagement studies’: a conceptual meta-synthesis. Research for All 2 (2), 185–229. doi:10.18546/RFA.02.2.02

-

Imperial College London (n.d.). Engagement toolkit. Retrieved July 30, 2022, from https://www.imperial.ac.uk/be-inspired/societal-engagement/resources-and-case-studies/engagement-toolkit/

-

Jensen, E. (2014). The problems with science communication evaluation. JCOM 13 (01), C04. doi:10.22323/2.13010304

-

Jensen, E. & Buckley, N. (2014). Why people attend science festivals: interests, motivations and self-reported benefits of public engagement with research. Public Understanding of Science 23 (5), 557–573. doi:10.1177/0963662512458624

-

Jones, B. D. (2009). Motivating students to engage in learning: the MUSIC model of academic motivation. International Journal of Teaching and Learning in Higher Education 21 (2), 272–285. Retrieved July 30, 2022, from https://files.eric.ed.gov/fulltext/EJ899315.pdf

-

Li, J. & Julian, M. M. (2012). Developmental relationships as the active ingredient: a unifying working hypothesis of “what works” across intervention settings. American Journal of Orthopsychiatry 82 (2), 157–166. doi:10.1111/j.1939-0025.2012.01151.x

-

National Research Council (2009). Learning science in informal environments: people, places, and pursuits. doi:10.17226/12190

-

Oliver, M. B. & Raney, A. A. (2011). Entertainment as pleasurable and meaningful: identifying hedonic and eudaimonic motivations for entertainment consumption. Journal of Communication 61 (5), 984–1004. doi:10.1111/j.1460-2466.2011.01585.x

-

Pettigrew, T. F. & Tropp, L. R. (2008). How does intergroup contact reduce prejudice? Meta-analytic tests of three mediators. European Journal of Social Psychology 38 (6), 922–934. doi:10.1002/ejsp.504

-

Petty, R. E. & Cacioppo, J. T. (1986). The Elaboration Likelihood Model of persuasion. Advances in Experimental Social Psychology 19, 123–205. doi:10.1016/S0065-2601(08)60214-2

-

Salmi, H. & Thuneberg, H. (2019). The role of self-determination in informal and formal science learning contexts. Learning Environments Research 22 (1), 43–63. doi:10.1007/s10984-018-9266-0

-

Sánchez-Mora, M. C. (2016). Towards a taxonomy for public communication of science activities. JCOM 15 (02), Y01. doi:10.22323/2.15020401

-

Scheller, M. & Sui, J. (2022). The power of the self: anchoring information processing across contexts. Journal of Experimental Psychology: Human Perception and Performance 48 (9), 1001–1021. doi:10.1037/xhp0001017

-

Thompson, B. (2004). Exploratory and confirmatory factor analysis: understanding concepts and applications. doi:10.1037/10694-000

-

Wagner, B. C. & Petty, R. E. (2011). The elaboration likelihood model of persuasion: thoughtful and non-thoughtful social influence. In D. Chadee (Ed.), Theories in social psychology (pp. 96–116). Oxford, U.K.: Wiley-Blackwell.

-

Watermeyer, R. & Lewis, J. (2015). Public engagement in higher education: the state of the art. In J. Case & J. Huisman (Eds.), Investigating higher education: a critical review of research contributions (pp. 42–60). London, U.K.: Routledge.

-

Williams, B., Onsman, A. & Brown, T. (2010). Exploratory factor analysis: a five-step guide for novices. Australasian Journal of Paramedicine 8, 1–13. doi:10.33151/ajp.8.3.93

Authors

Madelijn Strick is an Associate Professor in Social Psychology. She is an expert on

measuring the impact and active ingredients of science communication and public

engagement. Madelijn has published extensively on the role of humor, being moved,

and storytelling in communication and behavior change. She is co-founder and

principal investigator of the IMPACTLAB, a collaborative research center of Utrecht

University and Leiden University that develops knowledge on science communication

impact.

@MadelijnStrick E-mail: m.strick@uu.nl

Stephanie Helfferich is science writer and project manager in the Programme Office of

the Centre for Science and Culture of Utrecht University. She supports and initiates the

development of public engagement activities by academics and support staff at Utrecht

University. She also designs, implements, and analyzes impact measurements for public

engagement activities at Utrecht University. Stephanie is a member of the supervisory

committee of the IMPACTLAB.

@stephhelff E-mail: s.helfferich@uu.nl