1 The emergence of ChatGPT and generative AI

The novelty of ChatGPT is quickly and impressively demonstrated by the fact that I don’t have to introduce it here — ChatGPT can do that itself: “I’m a large language model (LLM) trained by OpenAI”, it responds when prompted, “designed to answer questions, provide information, and engage in conversations on a wide variety of topics” (ChatGPT based on GPT-3, Feb 26, 2023, prompt “Can you introduce yourself in 2 sentences, please?”). The underlying GPT (for “Generative Pre-trained Transformer”) is an autoregressive LLM that, combined with ChatGPT’s dialogue functionality, provides original, human-like responses to user prompts based on supervised and reinforcement learning techniques that involved extensive digital training data as well as human feedback and response evaluations. After its public launch on November 30, 2022, the chatbot’s first version (based on the GPT-3 model) reached a million users in less than a week and 100 million users by January 2023, arguably one of the fastest rollouts of any technology in history.

ChatGPT has since become the poster child of a broader development: the rise of generative AI that generates novel outputs based on training data. Generative AI can translate text (like DeepL), create imagery (like DALL.E, Midjourney or Stable Diffusion), imitate voices (like VALL-E) — or generate textual responses like ChatGPT and its competitors like BARD, Anthropic Claude, AI Sydney or others that will have come out until this essay is published (for an overview of the landscape of large(r) generative AI companies see Mu [2023]).

Such tools have proliferated in recent months and are widely diagnosed to fundamentally impact, even “disrupt” many realms of life [e.g. Johnson, 2022]: the economy [Eloundou, Manning, Mishkin & Rock, 2023], programming [Peng, Kalliamvakou, Cihon & Demirer, 2023], journalism [Pavlik, 2023], art [Eisikovits & Stubbs, 2023], music [Nicolaou, 2023], sports [Goldberg, 2023] and other fields.

Generative AI will fundamentally influence academia and science as well. On the one hand, it will likely impact all aspects of research, from identifying research gaps and generating hypotheses based on literature reviews over data collection, annotation or writing code all the way to summarising findings, writing them up and presenting them visually [Stokel-Walker & Van Noorden, 2023]. Scholarly abstracts and papers have already been co-produced with AI tools, and the status of AI co-authors is discussed in the scientific community [e.g. Flanagin, Bibbins-Domingo, Berkwits & Christiansen, 2023]. Concerns about an AI-powered, exponential increase of the already challenging publish-or-perish problem in scholarly publishing have risen along with fears of increasing and harder-to-detect fraud [Curtis & ChatGPT, 2023; Thorp, 2023].

On the other hand, generative AI will impact teaching. It can generate syllabi for classes and study programs, exam questions and evaluations for teachers [Gleason, 2022]. It can serve as a partner for students to further dialogical learning [Mollick, 2023d], structure their texts and summarise scholarly literature for them. Also, it has already shown that it can pass exams and might be able to write homework and theses, triggering an arms race with plagiarism detectors, but also urging higher education institutions to reflect on the competencies and skills they should teach in the first place [Barnett, 2023; Choi, Hickman, Monahan & Schwarcz, 2023].

2 Generative AI and the practice of science communication

Apart from research and teaching — the first two “missions” of the academic world — generative AI will also influence science communication, the “third mission”. Science communication, conceptualised broadly, is a field of practice that includes the public communication of individual scientists, the (often strategic) communication of scientific organisations such as PR or marketing, science journalism and other forms of science-related public communication [Davies & Horst, 2016; Schäfer, Kristiansen & Bonfadelli, 2015]. Practitioners in the field pursue different aims and models, with the “public understanding of science” and dialogue models arguably the most prominent [Akin, 2017; Bucchi, 2008].

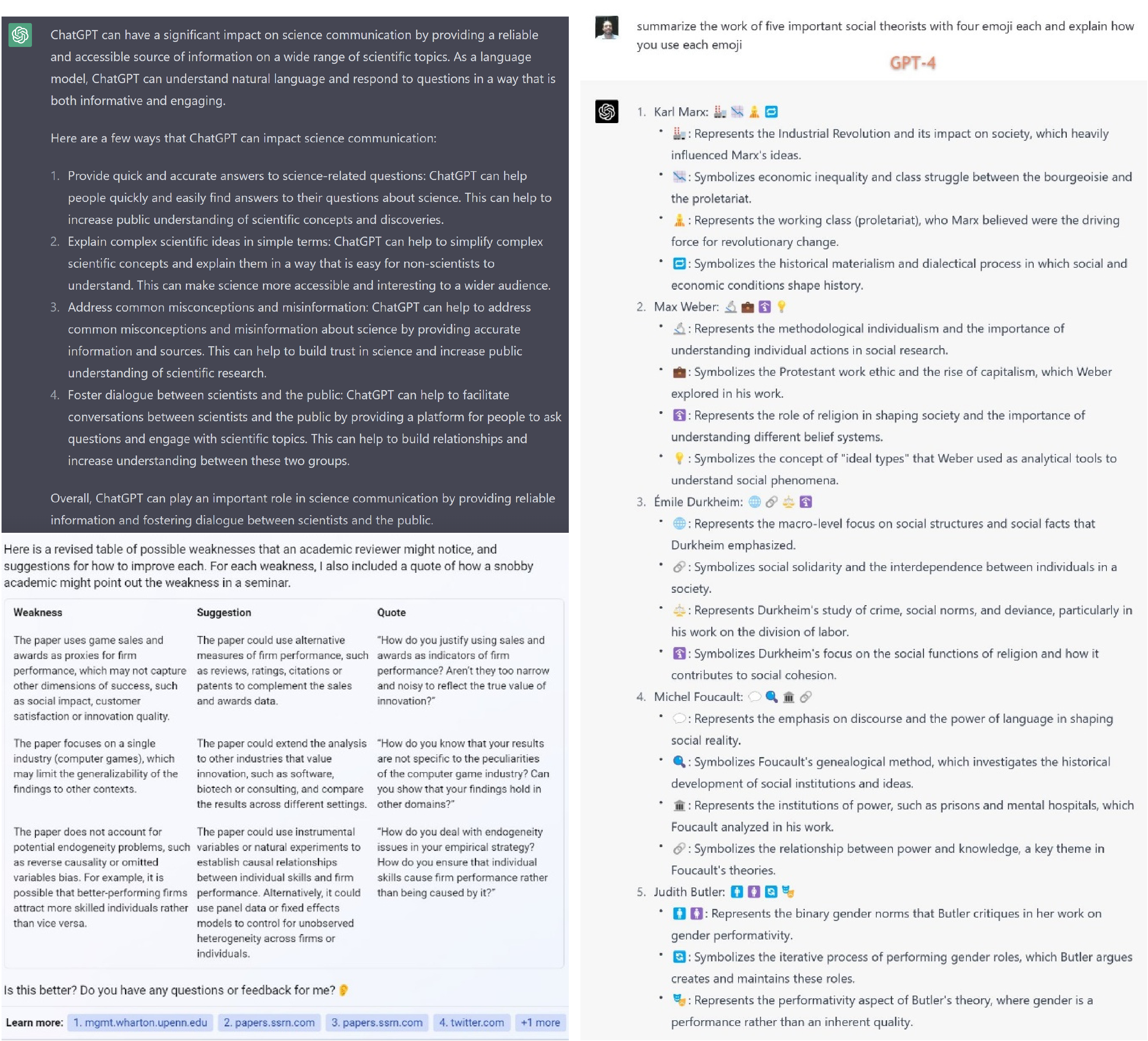

ChatGPT itself, when prompted, states that it will “have a significant impact” on many aspects of this field by “providing a reliable and accessible source of information on a wide range of scientific topics” and helping “increase public understanding of scientific concepts and discoveries”, by addressing “common misconceptions and misinformation about science by providing accurate information and sources” and building “trust in science”, and by fostering “dialogue between scientists and the public by providing a platform for people to ask questions and engage with scientific topics” (Figure 1).

Practitioners and scholars of science communication have also begun to reflect upon the influence of generative AI on science communication practice, and they seem to agree that it will be significant. As often with new technologies, and notwithstanding the many current unknowns and uncertainties, the amplitude of optimistic and pessimistic takes in this discussion is considerable and ranges from positive to dystopian.

Optimists emphasise the enormous spectrum of possible applications for generative AI in science communication. They point to its “potential to significantly streamline and automate the process of generating content ideas” [Wesche, 2023] and to its continuously improving translational ability: its capability for summarising scholarly publications and findings [Gravel, D’Amours-Gravel & Osmanlliu, n.d.] and “explaining complicated issues simply” [Hegelbach, 2023, cf. Figure 1]. This can be utilised by science communicators to write news articles, social media posts or media releases, generate slogans and headlines for communication campaigns, translate scientific content into Wikipedia entries etc. Academic and scientific organisations are already pondering the use of generative AI in their communication and outreach efforts [Myklebust, 2023] and media and blog articles describe how practitioners are beginning to use generative AI to generate texts [e.g. Broader Impacts Productions, 2023].

And while ChatGPT, currently, answers in written text only, a move towards multimodality seems to be coming soon via VisualChatGPT [Koch & Hahn, 2023]. Already, savvy users have shown that generative AI can provide multimodal replies such as emojis, illustrations and infographics, spoken language and even videos [e.g. synthesia, 2023, see Figure 1], when different tools are blended. Generative AI has even been shown to produce games [e.g. Nash, 2023], which would allow for tailor-made gamification in science communication even for users without advanced programming skills.

Others have emphasised the usefulness of generative AI from a user perspective. After all, it gives users interested in science-related topics immediate responses on specific questions [iTechnoLabs, 2023]. It allows them to scale the linguistic complexity, terminology and overall comprehensibility up and down according to their own subjective needs, to ask repeated and even seemingly ‘dumb’ questions [Angelis et al., 2023]. It enables them to utilise the turn-taking characteristic of tools like ChatGPT to enter into an actual conversation, and to inquire about aspects of the answer they did not entirely understand until they receive responses they find fully satisfactory [cf. Goedecke, Koester & ChatGPT, 2023]. In principle, therefore, generative AI can provide dialogical science communication at scale, and herein lies a tantalising potential for broadening, even democratising dialogical science communication that was, so far, often limited to small groups or users with a prior affinity for science.

The potential of generative AI for the practice of science communication is already considerable. And it will undoubtedly broaden further as new tools emerge, users discover their full spectrum of options, and as different tools are blended into one another (which has started with the inclusion of plugins into ChatGPT, e.g. Barsee [2023]). In addition, available tools will become easier to use and integrated more naturally into established software environments like search engines (a step that Bing or you.com are already taking) or operating systems.

Pessimists, on other hand, identified a number of, partly substantial, challenges. Response accuracy — how accurate ChatGPT’s responses are from a scientific standpoint — was the first one. It was discussed immediately after ChatGPT went public, partly because the launch of Meta’s Galactica LLM that was specifically trained on scientific texts had failed only weeks before due to accuracy problems [Heaven, 2022]. Accordingly, it was repeatedly pointed out that ChatGPT was a “stochastic parrot” [Doctorow, 2023; Sarraju et al., 2023] that merely approximated the content of its replies according to its training data without a deeper understanding of such content, that this approximation also applied to numbers and references which often ended up being wrong or fictitious, and that therefore, ChatGPT had pronounced weaknesses in an area crucial for science communication. Fittingly, early studies showed that ChatGPT replies were often of “limited quality” [Gravel et al., n.d.] from a scientific standpoint. But considerable improvements in these respects are already visible across the variants of GPT — e.g. from GPT-3 to the GPT-4 version that Microsoft’s search engine Bing uses — and “many of the things we thought AI would be bad at for a while (complex integration of data sources, "learning" and improving by being told to look online for examples, seemingly creative suggestions based on research, etc.) are already possible” [Mollick, 2023c]. In addition, tools like Perplexity.AI try to combine GPT with Google Scholar to provide replies that are better grounded in scientific evidence [Shabanov, 2023] and might eventually even help fact-checking [Hoes, Altay & Bermeo, 2023]. Still, practitioners should remain wary of the limitations of generative AI, which may be due to specifics of its training data (which in the case of GPT-3 famously ended in 2021, for example, and which in the case of other machine-learning tools often displayed pronounced biases, cf. Kordzadeh and Ghasemaghaei [2021]), its fundamental working principles or its output modalities.

Others are concerned about an “AI-driven infodemic” due to the “ability of LLMs to rapidly produce vast amounts of text” [Angelis et al., 2023, p. 1]. Generative AI might lead to a flood of information and a “pollution of our knowledge pool” [Nerlich, 2023] in which users — and, paradoxically, even the AI tools they might ask for help in the future — may have difficulty finding reliable information about science. Reliable content might be drowned in a sea of approximated, mediocre information [Haven, 2022], and the addition of mis- or disinformation to this information environment could even exacerbate this. Generative AI itself could become a powerful driver of such mis- and disinformation, and attempts to “jailbreak” ChatGPT have already shown that some users will find ways to bypass built-in restrictions [e.g. King, 2023]. Generative AI could then produce “wrongness at scale”, as it “can get more wrong, faster — and with greater apparent certitude and less transparency — than any innovation in recent memory” [Ulken, 2022]. And a “potential convergence of knowledge fabrication, fake science and knowledge dilution” [Nerlich, 2023] would be particularly problematic in a communication ecosystem where antagonistic positions towards science are prominent and have risen in importance [e.g. Mede & Schäfer, 2020; Rutjens, Heine, Sutton & van Harreveld, 2018].

Furthermore, generative AI has raised concerns about job security in many sectors, with early studies showing that AI tools could make “about 15% of all worker tasks in the US […] significantly faster at the same level of quality[, and when] incorporating software and tooling built on top of LLMs, this share increases to between 47 and 56%” [Eloundou et al., 2023, p. 1]. The potential to hand over certain writing, visualising or even programming tasks to generative AI coupled with the problematic financial situation of scientific and higher education institutions in some countries [Estermann, Bennetot Pruvot, Kupriyanova & Stoyanova, 2020] and the economic crisis of science journalism in many countries [Schäfer, 2017] could result in considerable challenges for science communication practitioners.

3 Generative AI and research on science communication

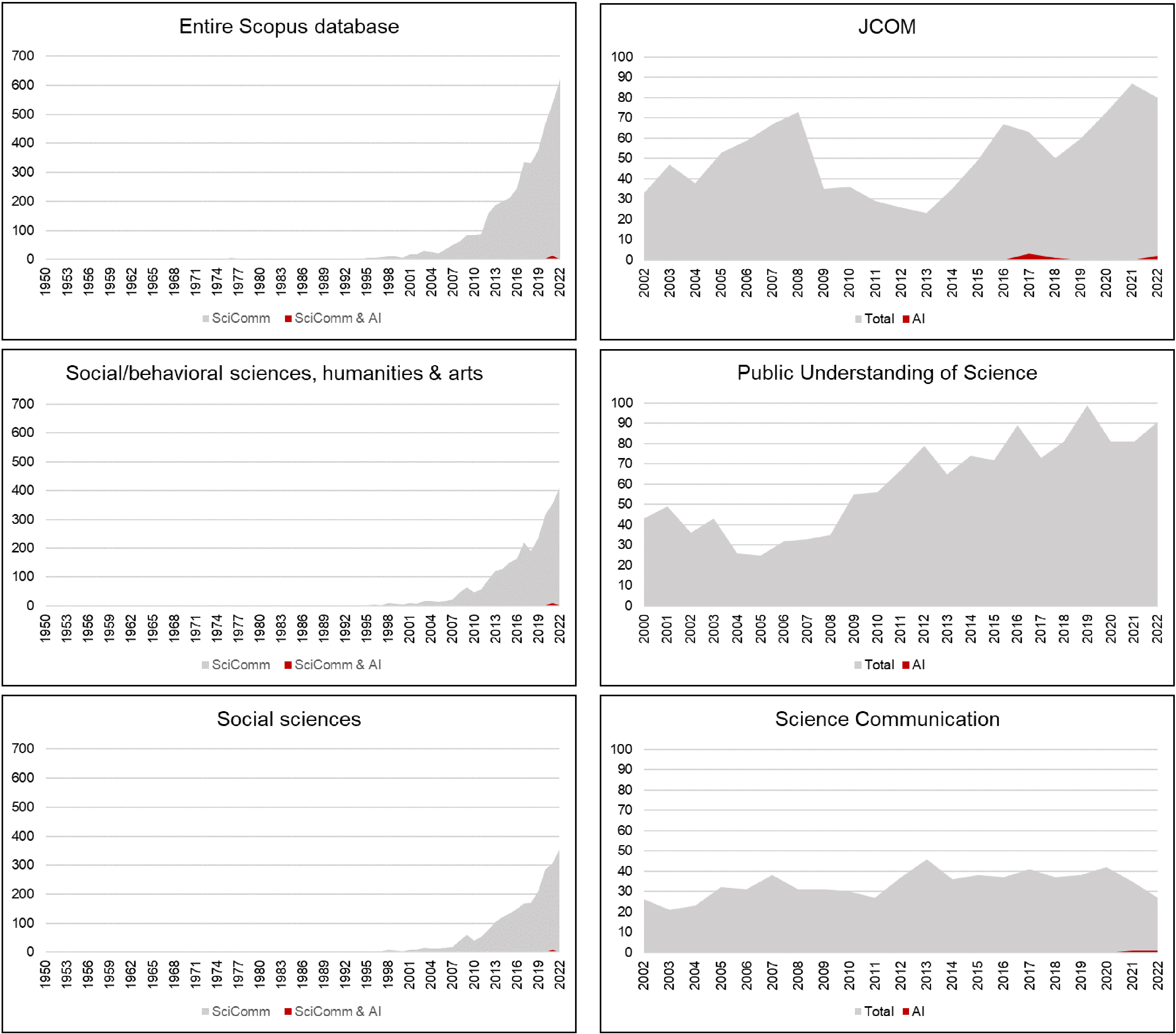

Given that our field researches communication about science, AI as a key technology and important field of scientific development is strikingly under-researched. A simple database search illustrates this: when searching the Scopus database for scholarly publications mentioning both “science communication” and “artificial intelligence” (i.e., not even limiting the search to generative AI), only a handful of hits appear, and those stem almost exclusively from the past few years (Figure 2). This is true when searching the entire database, and for searches in the humanities and social sciences as well. It is also true when applying a similar search to the three most influential journals in the field of science communication, “JCOM”, “Public Understanding of Science” and “Science Communication”. And it was further underlined in my research for this essay: While many media articles, social media posts etc. discuss the impact of ChatGPT, generative AI and AI on other fields including scholarly publishing and the scientific process more broadly, articles on its role in science communication are still relatively scarce.

The Scopus search included the following document types: “Article”, “Book chapter”, “Editorial”, “Conference Paper”, “Book”, “Letter” and “Note”. The first search included the entire database, the second only the subject areas “Social Sciences”, “Psychology”, “Arts and Humanities”, “Business, Management and Accounting” and “Economics, Econometrics and Finance", and the third only the subject area “Social Sciences”. The search was conducted in February of 2023.

Given the importance of generative AI, its exponential growth and its potential impact on our field itself, we as science communication researchers urgently need to remedy this: We need a research push assessing the nexus between (generative) AI and science communication. Four avenues could and should be pursued by such research.

First, scholars should analyse public communication about AI. Generative AI, and AI more broadly, are major scientific and technological issues that have become popular topics of public debate as well and, thus, natural objects for research on science communication. This research strand would treat AI similar to other objects of science communication research and, in doing so, could apply the full range of the respective analytical perspectives:

-

On the production side, researchers should analyse how scholars, scientific organisations and institutions of higher education, tech companies, stakeholders, regulators, journalists, NGOs and others communicate about (generative) AI, what perspectives, frames and imaginaries they promote, and what strategies they employ to position them prominently in which public arenas. Some scholars have done that already, but without focusing on generative AI specifically or connecting it to science communication, and with a clear slant towards corporate communicators in Anglophone countries [for an overview Richter, Katzenbach & Schäfer, 2023].

-

Scholars should also analyse how public communication about generative AI in legacy media, social media, public imagery, fictional accounts and elsewhere looks like. Only few researchers have done that with regards to AI in general, and analyses of generative AI are lacking [for an overview Brause, Zeng, Schäfer & Katzenbach, 2023].

-

Furthermore, researchers should analyse how non-experts, stakeholders, regulators etc. use and make sense of (generative) AI-related content: Where do they get information about AI, which sources do they use, how do they evaluate them and how does this communication affect their knowledge about, attitudes towards and use of (generative) AI? [cf. Schäfer & Metag, 2021].

But, second, generative AI also differs from other objects of science communication research in that the technology itself has “increased agency” as a form of “communicative AI” (Guzman and Lewis [2019, p. 79] some scholars have even posited that GPT-4 contains “sparks of artificial general intelligence”, Bubeck et al. [2023]). Therefore, scholars should also analyse user interactions with ChatGPT and Co., i.e. communication with AI.

-

In order to do so, on the one hand, scholars have to better understand how generative AI works — which is often a challenge, however. To use ChatGPT as an example: While the company behind the chatbot, OpenAI, has laid out how the chatbot was designed and trained in general, specifics remain opaque and information about the training data and algorithms are proprietary — which creates the typical black box that encapsulates and hides many aspects of crucial technologies in digital and datafied societies, from search engine algorithms over recommender systems to the specifics of content moderation [Seaver, 2019]. This black box severely hampers the ability of science communication (and other) scholars to judge generative AI, its potentials, but also its potential biases. While approaches such as reverse engineering — which attempt to understand a process or model by systematically analysing input and output data around the black box inbetween — are limited, they are still urgently needed for lack of better alternatives.

-

On the other hand, scholars should analyse generative AI as an agent in science communication. How people interact with generative AI, how it responds and what the results of these interactions are on both sides are some of the most interesting research questions of the near future [Lermann Henestrosa & Kimmerle, 2022]. A first such study by Chen, Shao, Burapacheep and Li [2022], for example, analysed approximately 20,000 dialogues between GPT-3 and 3290 individuals on climate change and Black Lives Matters. It found, among other things, that the chatbot responded differently to climate change deniers compared to other groups, providing them with more research results and external links, but also using more negative and emotional language towards them. It is important to reconstruct interactions like these, their causes as well as their effects on human users and generative AI tools themselves [cf. Neff & Nagy, 2016].

The third avenue of research should scrutinise the impact of generative AI on science communication and its foundations. Again, this has (at least) two facets:

-

On the one hand, scholars should analyse how generative AI impacts communication about a whole range of scientific issues. Given that autoregressive LLMs rely on training data, that characteristics of this training data influence how LLMs work, but that such training data may not be similarly available and equally well structured for different scholarly fields, it would be interesting to know whether certain fields lend themselves more easily to science communication via generative AI. Are there differences between STEM fields, the social and behavioral sciences and the arts, e.g.? Do fields have an advantage for which clear, state-of-the-art summaries are publicly available, like climate science with the regular assessment reports of the Intergovernmental Panel on Climate Change (IPCC)? Do large and/or mainstream research fields differ from niche fields and (esp. given that GPT-3’s training data was not entirely up to date at the time of its launch) do older differ from more recent fields?

-

On the other hand, researchers should assess if and how generative AI affects and potentially changes the broader science communication ecosystem. This involves analysing whether the abovementioned promises and pitfalls of practical science communication are empirically true: How widely will the technology be used to assist and supplant science communication efforts, is it used across modalities, and to what degree can it democratise dialogical science communication? And in turn, how accurate is AI-generated content, how much misinformation does it contain, and does it really produce “wrongness at scale”? But it also involves questions about power relations between communicators, and their potential shifts: Will generative AI enable smaller, less influential communicators to close the gap to bigger ones because the tools are available to everybody, or will powerful communicators be able to use them in order to further fortify their position? And similar to “digital divides” [Hargittai & Hsieh, 2013] surrounding the rise of online and social media communication, generative AI will likely also result in divides among users. There could be “first-level” divides in terms of access, as generative AI might not be available to, or too expensive for, some users. There could also be “second-level” divides in terms of differing literacy and skill in dealing with the technology: Some users might be skilled enough to use generative AI more fruitfully than others — a crucial divide because “to be valuable, ChatGPT needs a very discerning user” [From The Lab Bench, 2023]. But this ability is likely unevenly distributed in the population, and might result in widening gaps between those who can use generative AI to their advantage, and those who are less able to do so.

Fourth, the emergence of generative AI is a conceptual and theoretical challenge. After all, “artificial intelligence (AI) and people’s interactions with it […] do not fit neatly into paradigms of communication theory that have long focused on human — human communication” [Guzman & Lewis, 2019, p. 70]. For example, the abovementioned distinction between mis- and disinformation is often made depending on the (alleged) intention of the communicator: Misinformation is then seen as wrong information unintentionally communicated, while disinformation is seen as wrong information deliberately passed on [Wardle, 2018]. But distinctions like these are more difficult to uphold when generative AI is involved. Conceptual work and theory-building are needed here, drawing on fields like Human-Machine-Communication [Guzman, 2018] and social-constructivist approaches like Science and Technology Studies or Actor-Network-Theory. With its interdisciplinary tradition, research on science communication should be well positioned to contribute in this respect [cf. Greussing, Taddicken & Baram-Tsabari, 2022].

4 Bigger lessons and the road ahead

These arguments underline that generative AI — including but going considerably beyond ChatGPT — is of utmost importance for the practice of and research on science communication. And although even the short-term ramifications of generative AI for science communication are still largely unclear (not to speak of its mid- and long-term implications), practitioners and scholars should take the technology seriously, assess it critically, embrace its opportunities, but also tackle its challenges.

This is all the more urgent as generative AI, and AI more broadly, have not yet been core issues of the science communication community. This should, and likely will, change, as the community has demonstrated its ability to adapt quickly to upcoming issues such as the COVID-19 pandemic in the past.

A general point to be learned from the meteoric rise of generative AI, is that we should all be continuously on the lookout for emerging socio-technical innovations that may impact (science) communication [Schäfer & Wessler, 2020; cf. Rahwan et al., 2019]. The rise of online communication, the emergence of social media, algorithmic curation and recommendation, the growth of short video platforms, the turn towards instant messengers etc. have all changed (science) communication considerably in recent years, and new developments are certain to come. For practitioners and scholars interested in science communication (as well as in other fields), it is important to keep abreast of technological development and reflect its implications for their field(s).

A second point to remember is that technological development can be influenced. Social Construction of Technology [Bijker, 2009] and related approaches have demonstrated that not only technological capabilities, but also societal, sociocultural and sociopolitical factors influence the trajectory of technologies. How technologies such as generative AI are used, how broadly, by whom, and in what ways, is of enormous importance for their development and impact on society. And this use can be influenced: One example are deliberate attempts to identify the values and potential biases inscribed into AI and to transform them in constructive ways — attempts that interventionist schools like “Values in Design” [Manders-Huits, 2011] have proposed and where science communication practice and research may well find their own place. Another example are debates about the normative, ethical and regulatory foundations of generative AI that are already going on, as current calls for a voluntary moratorium in AI development [Knight & Dave, 2023] and the ban of ChatGPT in Italy [Satariano, 2023] show.

Such attempts to critically assess generative AI and to limit its problems while making use of its potentials are crucial. This is especially true in a situation where a(nother) core technology of contemporary society seems to move quickly into the hands of a few Western corporations [Murgia, 2023] — a situation familiar from the early days of contemporary digital, datafied, platformed societies. These societies will now be heavily influenced by (generative) AI, and science communication research and practice can and should play an important role in its development.

Acknowledgments

I thank Sabrina H. Kessler for helpful comments and Xeno Krayss and Damiano Lombardi for helping me prepare this article.

References

-

Akin, H. (2017). Overview of the Science of Science Communication. In K. H. Jamieson, D. M. Kahan & D. A. Scheufele (Eds.), The Oxford Handbook of the Science of Science Communication (pp. 25–33). doi:10.1093/oxfordhb/9780190497620.013.3

-

Angelis, L. D., Baglivo, F., Arzilli, G., Privitera, G. P., Ferragina, P., Tozzi, A. E. & Rizzo, C. (2023). ChatGPT and the Rise of Large Language Models: The New AI-Driven Infodemic Threat in Public Health. SSRN Electronic Journal. Paper N. 4352931. doi:10.2139/ssrn.4352931

-

Barnett, S. (2023). ChatGPT Is Making Universities Rethink Plagiarism. Wired. Retrieved from https://www.wired.com/story/chatgpt-college-university-plagiarism/

-

Barsee (2023). ChatGPT is going to be the new App Store. Twitter. [@heyBarsee]. Retrieved from https://twitter.com/heyBarsee/status/1640044406202826756

-

Bijker, W. E. (2009). Social Construction of Technology. In J. K. Olsen, S. A. Pedersen & V. F. Hendricks (Eds.), A Companion to the Philosophy of Technology (pp. 88–94). doi:10.1002/9781444310795.ch15

-

Brause, S. R., Zeng, J., Schäfer, M. S. & Katzenbach, C. (2023). Media Representations of Artificial Intelligence. In S. Lindgren (Ed.), Handbook of Critical Studies of Artificial Intelligence. Edward Elgar Publishing. Retrieved from https://www.e-elgar.com/shop/gbp/handbook-of-critical-studies-of-artificial-intelligence-9781803928555.html

-

Broader Impacts Productions (2023). How to Use ChatGPT for Science Communication. Broader Impacts Productions. Retrieved from https://www.broaderimpacts.tv/chatgpt-science-communication/

-

Bubeck, S., Chandrasekaran, V., Eldan, R., Gehrke, J., Horvitz, E., Kamar, E., … Zhang, Y. (2023). Sparks of Artificial General Intelligence: Early experiments with GPT-4. doi:10.48550/ARXIV.2303.12712. arXiv: 2303.12712

-

Bucchi, M. (2008). Of deficits, deviations and dialogues: theories of public communication of science. In M. Bucchi & B. Trench (Eds.), Handbook of public communication of science and technology (1st ed., pp. 57–76). London, U.K.: Routledge.

-

Chen, K., Shao, A., Burapacheep, J. & Li, Y. (2022). How GPT-3 responds to different publics on climate change and Black Lives Matter: A critical appraisal of equity in conversational AI. doi:10.48550/ARXIV.2209.13627. arXiv: 2209.13627

-

Choi, J. H., Hickman, K. E., Monahan, A. & Schwarcz, D. B. (2023). ChatGPT Goes to Law School. SSRN Electronic Journal. Paper N. 4335905. doi:10.2139/ssrn.4335905

-

Curtis, N. & ChatGPT (2023). To ChatGPT or not to ChatGPT? The Impact of Artificial Intelligence on Academic Publishing. Pediatric Infectious Disease Journal 42(4), 275. doi:10.1097/inf.0000000000003852

-

Davies, S. R. & Horst, M. (2016). Science Communication. doi:10.1057/978-1-137-50366-4

-

Doctorow, C. (2023). Google’s chatbot panic (16 Feb 2023). Pluralistic: Daily links from Cory Doctorow. Retrieved from https://pluralistic.net/2023/02/16/tweedledumber/

-

Eisikovits, N. & Stubbs, A. (2023). ChatGPT, DALL-E 2 and the collapse of the creative process. The Conversation. Retrieved from http://theconversation.com/chatgpt-dall-e-2-and-the-collapse-of-the-creative-process-196461

-

Eloundou, T., Manning, S., Mishkin, P. & Rock, D. (2023). GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models. doi:10.48550/ARXIV.2303.10130. arXiv: 2303.10130

-

Estermann, T., Bennetot Pruvot, E., Kupriyanova, V. & Stoyanova, H. (2020). The impact of the Covid-19 crisis on university funding in Europe. European University Association. Retrieved from https://eua.eu/resources/publications/927:the-impact-of-the-covid-19-crisis-on-university-funding-in-europe.html

-

Flanagin, A., Bibbins-Domingo, K., Berkwits, M. & Christiansen, S. L. (2023). Nonhuman “Authors” and Implications for the Integrity of Scientific Publication and Medical Knowledge. JAMA 329(8), 637–639. doi:10.1001/jama.2023.1344

-

From The Lab Bench (2023). reative Science Writing in the Age of ChatGPT. From The Lab Bench. Retrieved from http://www.fromthelabbench.com/from-the-lab-bench-science-blog/2023/1/29/creative-science-writing-in-the-age-of-chatgpt

-

Gleason, N. (2022). ChatGPT and the rise of AI writers: how should higher education respond? THE — Times Higher Education. Retrieved from https://www.timeshighereducation.com/campus/chatgpt-and-rise-ai-writers-how-should-higher-education-respond

-

Goedecke, C., Koester, V. & ChatGPT (2023). Chatting With ChatGPT. ChemViews. doi:10.1002/chemv.202200001

-

Goldberg, J. (2023). Artificial Intelligence (AI) & Its Impact on the Sports Industry. Lexology. Retrieved from https://www.lexology.com/library/detail.aspx?g=404ee360-cdc8-4772-900c-eecdca6874b9

-

Gravel, J., D’Amours-Gravel, M. & Osmanlliu, E. (n.d.). Learning to fake it: limited responses and fabricated references provided by ChatGPT for medical questions. medRxiv. doi:10.1101/2023.03.16.23286914

-

Greussing, E., Taddicken, M. & Baram-Tsabari, A. (2022). Changing Epistemic Roles through Communicative AI. In ICA Science of Science Communication Preconference, 26th–30th May 2022. Paris, France.

-

Guzman, A. L. (Ed.) (2018). Human-Machine Communication. New York, U.S.A.: Peter Lang. Retrieved from https://www.peterlang.com/document/1055458

-

Guzman, A. L. & Lewis, S. C. (2019). Artificial intelligence and communication: A Human–Machine Communication research agenda. New Media & Society 22(1), 70–86. doi:10.1177/1461444819858691

-

Hargittai, E. & Hsieh, Y. P. (2013). Digital Inequality. In W. H. Dutton (Ed.), The Oxford Handbook of Internet Studies (pp. 129–150). doi:10.1093/oxfordhb/9780199589074.013.0007

-

Haven, J. (2022). ChatGPT and the future of trust. Nieman Lab. Retrieved from https://www.niemanlab.org/2022/12/chatgpt-and-the-future-of-trust/

-

Heaven, W. D. (2022). Why Meta’s latest large language model survived only three days online. MIT Technology Review. Retrieved from https://www.technologyreview.com/2022/11/18/1063487/meta-large-language-model-ai-only-survived-three-days-gpt-3-science/

-

Hegelbach, S. (2023). ChatGPT opened our eyes. DIZH. Retrieved from https://dizh.ch/en/2023/03/20/chatgpt-opened-our-eyes/

-

Hoes, E., Altay, S. & Bermeo, J. (2023). Using ChatGPT to Fight Misinformation: ChatGPT Nails 72% of 12,000 Verified Claims. PsyArXiv. doi:10.31234/osf.io/qnjkf

-

iTechnoLabs (2023). How is ChatGPT Explaining Scientific Concepts? iTechnoLabs. Retrieved from https://itechnolabs.ca/how-is-chatgpt-explaining-scientific-concepts/

-

Johnson, A. (2022). Here’s What To Know About OpenAI’s ChatGPT — What It’s Disrupting And How To Use It. Forbes. Retrieved from https://www.forbes.com/sites/ariannajohnson/2022/12/07/heres-what-to-know-about-openais-chatgpt-what-its-disrupting-and-how-to-use-it/

-

King, M. (2023). ChatGPT 4 Jailbreak — Step-By-Step Guide with Prompts: MultiLayering technique. Medium. Retrieved from https://medium.com/@neonforge/chatgpt-4-jailbreak-step-by-step-guide-with-prompts-multilayering-technique-ac03d5dd2304

-

Knight, W. & Dave, P. (2023). In Sudden Alarm, Tech Doyens Call for a Pause on ChatGPT. WIRED. Retrieved from https://www.wired.com/story/chatgpt-pause-ai-experiments-open-letter/

-

Koch, M.-C. & Hahn, S. (2023). Visual ChatGPT: Microsoft ergänzt ChatGPT um visuelle KI-Fähigkeiten mit Bildern. Heise. Retrieved from https://www.heise.de/news/Visual-ChatGPT-Microsoft-ergaenzt-ChatGPT-um-visuelle-KI-Faehigkeiten-mit-Bildern-8968047.html

-

Kordzadeh, N. & Ghasemaghaei, M. (2021). Algorithmic bias: review, synthesis, and future research directions. European Journal of Information Systems 31(3), 388–409. doi:10.1080/0960085x.2021.1927212

-

Lermann Henestrosa, A. & Kimmerle, J. (2022). The Effects of Assumed Authorship on the Perception of Automated Science Journalism. In ICA Science of Science Communication Preconference, 26th–30th May 2022. Paris, France.

-

Manders-Huits, N. (2011). What Values in Design? The Challenge of Incorporating Moral Values into Design. Science and Engineering Ethics 17(2), 271–287. doi:10.1007/s11948-010-9198-2

-

Mede, N. G. & Schäfer, M. S. (2020). Science-related populism: Conceptualizing populist demands toward science. Public Understanding of Science 29(5), 473–491. doi:10.1177/0963662520924259

-

Mollick, E. (2023a). Bing AI for seminar preparation. Twitter. [@emollick]. Retrieved from https://twitter.com/emollick/status/1630683712739332097

-

Mollick, E. (2023b). One interesting way to see how much more powerful GPT-4 is than GPT-3.5. Twitter. [@emollick]. Retrieved from https://twitter.com/emollick/status/1642532388570910725

-

Mollick, E. (2023c). Some lessons of the insane past 4 days of generative AI. Twitter. [@emollick]. Retrieved from https://twitter.com/emollick/status/1627161768966463488

-

Mollick, E. (2023d). The Machines of Mastery [Substack newsletter]. One Useful Thing. Retrieved from https://oneusefulthing.substack.com/p/the-machines-of-mastery

-

Mu, K. (2023). Generative AI Companies with >5MM raised (as of March 2023). LinkedIn. Retrieved from https://www.linkedin.com/feed/update/urn:li:activity:7043395787585712128

-

Murgia, M. (2023). Risk of ‘industrial capture’ looms over AI revolution. Financial Times. Retrieved from https://www.ft.com/content/e9ebfb8d-428d-4802-8b27-a69314c421ce

-

Myklebust, J. P. (2023). Universities adjust to ChatGPT, but the ‘real AI’ lies ahead. University World News. Retrieved from https://www.universityworldnews.com/post.php?story=20230301105802395

-

Nash, B. (2023). With the help of ChatGPT 4 I’m coding a new side scroller game for the browser. Twitter. [@bennash]. Retrieved from https://twitter.com/bennash/status/1640226129729536000

-

Neff, G. & Nagy, P. (2016). Automation, Algorithms, and Politics| Talking to Bots: Symbiotic Agency and the Case of Tay. International Journal of Communication 10, 4915–4931. Retrieved from https://ijoc.org/index.php/ijoc/article/view/6277

-

Nerlich, B. (2023). Artificial Intelligence: Education and entertainment. Making Science Public. Retrieved from https://blogs.nottingham.ac.uk/makingsciencepublic/2023/01/06/artificial-intelligence-education-and-entertainment/

-

Nicolaou, A. (2023). Streaming services urged to clamp down on AI-generated musi. Financial Times. Retrieved from https://www.ft.com/content/aec1679b-5a34-4dad-9fc9-f4d8cdd124b9

-

Pavlik, J. V. (2023). Collaborating With ChatGPT: Considering the Implications of Generative Artificial Intelligence for Journalism and Media Education. Journalism & Mass Communication Educator 78(1), 84–93. doi:10.1177/10776958221149577

-

Peng, S., Kalliamvakou, E., Cihon, P. & Demirer, M. (2023). The Impact of AI on Developer Productivity: Evidence from GitHub Copilot. doi:10.48550/ARXIV.2302.06590. arXiv: 2302.06590

-

Rahwan, I., Cebrian, M., Obradovich, N., Bongard, J., Bonnefon, J.-F., Breazeal, C., … Wellman, M. (2019). Machine behaviour. Nature 568(7753), 477–486. doi:10.1038/s41586-019-1138-y

-

Richter, V., Katzenbach, C. & Schäfer, M. S. (2023). Imaginaries of Artificial Intelligence. In S. Lindgren (Ed.), Handbook of Critical Studies of Artificial Intelligence. Edward Elgar Publishing. Retrieved from https://www.e-elgar.com/shop/gbp/handbook-of-critical-studies-of-artificial-intelligence-9781803928555.html

-

Rutjens, B. T., Heine, S. J., Sutton, R. M. & van Harreveld, F. (2018). Attitudes Towards Science. Advances in Experimental Social Psychology, 125–165. doi:10.1016/bs.aesp.2017.08.001

-

Sarraju, A., Bruemmer, D., Iterson, E. V., Cho, L., Rodriguez, F. & Laffin, L. (2023). Appropriateness of Cardiovascular Disease Prevention Recommendations Obtained From a Popular Online Chat-Based Artificial Intelligence Model. JAMA 329(10), 842–844. doi:10.1001/jama.2023.1044

-

Satariano, A. (2023). ChatGPT Is Banned in Italy Over Privacy Concerns. The New York Time. Retrieved from https://www.nytimes.com/2023/03/31/technology/chatgpt-italy-ban.html

-

Schäfer, M. S. (2017). How Changing Media Structures Are Affecting Science News Coverage. In K. H. Jamieson, D. M. Kahan & D. A. Scheufele (Eds.), The Oxford Handbook of the Science of Science Communication (pp. 50–59). doi:10.1093/oxfordhb/9780190497620.013.5

-

Schäfer, M. S., Kristiansen, S. & Bonfadelli, H. (2015). Wissenschaftskommunikation im Wandel. Herbert von Halem Verlag.

-

Schäfer, M. S. & Metag, J. (2021). Routledge handbook of public communication of science and technology. In M. Bucchi & B. Trench (Eds.), Routledge handbook of public communication of science and technology (3rd ed., pp. 291–304). New York, NY, U.S.A.: Routledge.

-

Schäfer, M. S. & Wessler, H. (2020). Öffentliche Kommunikation in Zeiten künstlicher Intelligenz. Publizistik 65 (3), 307–331.

-

Seaver, N. (2019). Knowing Algorithms. In J. Vertesi & D. Ribes (Eds.), digitalSTS (pp. 412–422). doi:10.1515/9780691190600-028

-

Shabanov, I. (2023). There is a new AI search engine ideal for researchers: Perplexity. Twitter. Retrieved from https://twitter.com/Artifexx/status/1645303838595858432?s=20

-

Stokel-Walker, C. & Van Noorden, R. (2023). What ChatGPT and generative AI mean for science. Nature 614(7947), 214–216. doi:10.1038/d41586-023-00340-6

-

synthesia (2023). GPT-3 Video Generator | Create Videos in 5 Minutes. synthesia. Retrieved from https://www.synthesia.io/tools/gpt-3-video-generator

-

Thorp, H. H. (2023). ChatGPT is fun, but not an author. Science 379(6630), 313–313. doi:10.1126/science.adg7879

-

Ulken, E. (2022). Generative AI brings wrongness at scale. Nieman Lab. Retrieved from https://www.niemanlab.org/2022/12/generative-ai-brings-wrongness-at-scale/

-

Wardle, C. (2018). The Need for Smarter Definitions and Practical, Timely Empirical Research on Information Disorder. Digital Journalism 6(8), 951–963. doi:10.1080/21670811.2018.1502047

-

Wesche, J. (2023). Dr. Julius Wesche auf LinkedIn: #chatgpt #openai. LinkedIn. Retrieved from https://www.linkedin.com/posts/juliuswesche_chatgpt-openai-activity-7016341359661879296-ROCy

Author

Dr. Mike S. Schäfer is a full professor of science communication, Head of Department at

IKMZ — the Dept. of Communications and Media Research and director of the

Center for Higher Education and Science Studies (CHESS) at the University of

Zurich.

@mss7676 E-mail: m.schaefer@ikmz.uzh.ch