1 Introduction

In the past two decades, citizen science has been more and more recognized as a valuable approach across a variety of scientific fields [Kullenberg and Kasperowski, 2016 ; Pettibone, Vohland and Ziegler, 2017 ]. The wider distribution of citizen science was supported by technological development, such as smartphone apps and online platforms, which facilitated expansion of the geographical scope and projects involving numerous participants [Silvertown, 2009 ; Newman, Wiggins et al., 2012 ; Bonney, Shirk et al., 2014 ; Wynn, 2017 ]. But interaction and communication change with the use of digital technologies, and these specifics have to be addressed during the development of digital tools and project execution [Raddick et al., 2010 ; Curtis, 2015 ; Land-Zandstra et al., 2016 ; Jennett, Kloetzer et al., 2016 ; Kloetzer, Da Costa and Schneider, 2016 ; Preece, 2016 ; Crall et al., 2017 ].

2 User-centred design

Perceived usefulness and user-friendliness are crucial factors for acceptance of a technology [Bagozzi, Davis and Warshaw, 1992 ]. The relationship between people and computers and techniques for the design of usable technology are studied in the interdisciplinary field of human-computer interaction (HCI) [Sellen et al., 2009 ]. User-centred design (UCD) is the most widespread methodology and philosophy of the four main approaches in HCI to design interactive digital products and improve the quality of interaction [Vredenburg et al., 2002 ; Haklay and Nivala, 2010 ]. UCD enables the creation of useful and usable products by significantly involving users throughout an iterative software development process. The approach focuses on the users’ needs, wants and goals, as well as their perception and responses before, during and after using a product, system or service [Gould and Lewis, 1985 ; International Organization for Standardization, 2010 ; Preece, Rogers and Sharp, 2001 ]. The main principles of human-centred design are described in the standard ISO 9241-210 ‘Human-centred design for interactive systems’ as follows: 1) understanding of user needs and context of use; 2) involvement of users; 3) evaluation by users; 4) iteration of development solutions; 5) addressing the whole user experience; 6) multi-disciplinary design team. Techniques for applying UCD range widely and depend on the purpose and the stage of design [Preece, Rogers and Sharp, 2001 ; Gulliksen, Göransson et al., 2003 ].

The importance of considering and addressing the needs of users often only become clear if errors in design are costly and highly visible, for example if a product is rejected by users [Schaffer, 2004 ]. But smaller, hidden design failures also cause users frustration and decrease their productivity, which can lead to a financial loss over time [Haklay and Nivala, 2010 ]. Many usability issues could be avoided by applying a UCD approach. But despite the advantages of UCD, organisational practices can interfere with its implementation [Gulliksen, Boivie et al., 2004 ]. For instance, projects very often have to be completed under great time pressure, and developing a product is often restricted in time and resources, including trained staff. User involvement is therefore often limited by the amount of time available and further constrained by a lack of available expertise to implement UCD adequately [Bak et al., 2008 ].

3 User-centred design in Citizen Science

The concept of UCD has been emphasised in several guidelines as an approach to address challenges arising from the use of digital technologies in citizen science [Jennett and Cox, 2014 ; Preece and Bowser, 2014 ; Yadav and Darlington, 2016 ; Sturm et al., 2018 ]. Well-designed technology can attract more people to get involved with citizen science, foster retention and encourage community development [Eveleigh et al., 2014 ; Wald, Longo and Dobell, 2016 ; Preece, 2016 ; Preece, 2017 ]. Involvement of volunteers is a substantial part of citizen science and often discussed in terms of the level of collaboration and participation [Bonney, Cooper et al., 2009 ; Shirk et al., 2012 ; Haklay, 2013 ]. Thus, in the context of citizen science, the role of users in UCD becomes even more important.

However, even though there is awareness of the importance of UCD in the citizen science community, little research and few case studies addressing the specific requirements of UCD in citizen science have been published. Exceptions include Traynor, Lee and Duke [ 2017 ], who presented two case studies testing the usability of two citizen science applications. They found that both projects were able to identify improvements based on UCD. They emphasised that the number of participants in user tests depend on the stage of development and that at later stages of development, a higher number of participants is needed. Additionally, citizen science projects often target a large and diverse group of participants. But individual users do not only have different user experiences and concepts on how features should work, they also have varying expectations on how the system should evolve [Seyff, Ollmann and Bortenschlager, 2014 ; Preece, 2016 ]. Therefore, extensive user feedback is needed.

Newman, Zimmerman et al. [ 2010 ] gave insights into UCD in citizen science by showing the evaluation and improvement of a geospatially enabled citizen science website, and providing guidelines to improve such applications. Bowser et al. [ 2013 ] introduced PLACE, an iterative, mixed-fidelity approach for prototyping location-based applications and games and showed its implementation by prototyping a geocaching game for citizen science. They found that for location-based applications, most user testing techniques do not test the whole user experience with its spatial, temporal and social perspective. For instance, user testing techniques such as focus groups [Krueger, 1994 ], thinking out aloud [Frommann, 2005 ], and shadowing [Ginsburg, 2010 ] are of limited use due to their restriction to controlled, predefined, lab-like situations. Additional evaluation in a real-world context is required. Kjeldskov et al. [ 2005 ] studied methods for evaluating mobile guides and advocated in-situ data collection to understand mobile use better.

4 User feedback in user-centred design

In human-computer interaction, feedback often refers to the communication from the application to the user [Shneiderman, 1987 ], whereas user feedback is described as information user provide about their perception of the quality of a product [Smith, Fitzpatrick and Rogers, 2004 ]. User perception may vary as it is influenced by the users’ expectations [Szajna and Scamell, 1993 ], and duration of use [Karapanos, 2013 ]. User feedback can be obtained in written formats, e.g. questionnaires [Froehlich et al., 2007 ] or comments [Pagano and Maalej, 2013 ], and verbal formats, e.g. interviews [Ahtinen et al., 2009 ].

Smith, Fitzpatrick and Rogers [ 2004 ] distinguish two types of user feedback in UCD. They define the users’ immediate reaction and opinions regarding the prototypes’ look and feel as reactive feedback. Feedback expressing a deeper understanding of the context of the use with its spatial, temporal and social perspective is defined as reflective feedback. Also, the approach of crowdsourced design is mostly based on an expert’s perspective, and users are invited to express their opinion and suggest changes [Grace et al., 2015 ]. In comparison, participatory design acknowledges users shaping the development of technology with their ideas, proposals, and solutions as an active partnership [Schuler and Namioka, 1993 ; Sanders and Stappers, 2008 ]. In recent years, these approaches started influencing one another [Sanders and Stappers, 2008 ]. A study by Valdes et al. [ 2012 ] demonstrated that a combination of user-centred and participatory design methods helps to address the complexity of citizen science projects.

In UCD, users are consulted in every stage of the development. The users’ involvement is mostly limited to the opportunity to provide feedback in predefined testing situations and concepts that were created by others [Sanders and Stappers, 2008 ]. Fotrousi, Fricker and Fiedler [ 2017 ] showed that users must be able to provide feedback in a way that is intuitive and desired by the user, especially in regard to the timing and content parameters of a feedback form. Special mobile feedback apps [Seyff, Ollmann and Bortenschlager, 2014 ], application distribution platforms (Google Play Store, App Store) [Pagano and Maalej, 2013 ], email, and citizen science platform forums [Scanlon, Woods and Clow, 2014 ; Woods, McLeod and Ansine, 2015 ] have proven to be useful sources for user feedback, which is characterised by on-site use. But to the best of our knowledge, there is no study of digital user feedback in a user-centred development process that also addresses the specific requirements of citizen science.

5 Objectives of the study

The aim of this paper is to give a short outline of user-centred design in citizen science using the example of the mobile app “Naturblick” and to discuss digital user feedback as part of the process with regard to the users’ involvement. We have analysed levels of involvement in digitally provided user feedback and looked into variances between communication channels to provide. Thereby, we provide insights and directions for the future mobile app development in citizen science.

In this publication, we focus only on the users who participated in the development process by providing some type of feedback. We define them as “participants” and therefore use the terms “user” and “participants” interchangeably.

6 Developing Naturblick

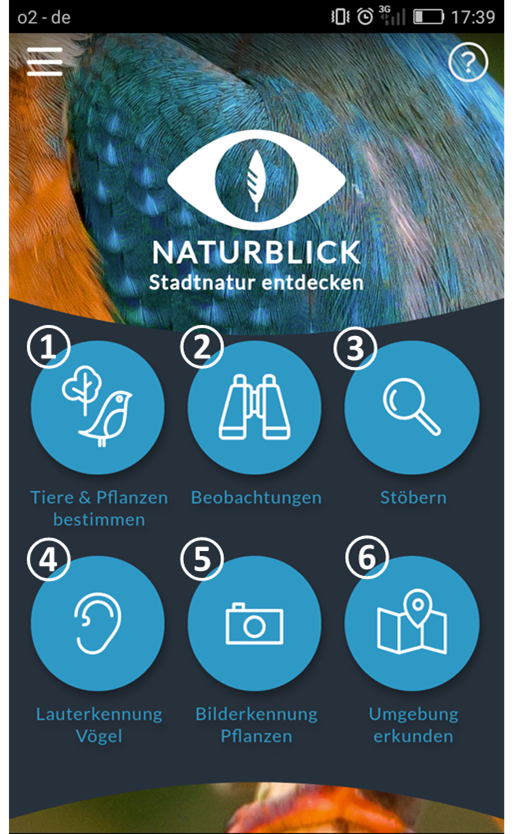

The smartphone app “Naturblick” (nature view) was developed as part of the research project “Stadtnatur entdecken” (discovering urban nature) at the Museum für Naturkunde Berlin (MfN). The aim of the project was to explore possibilities to communicate educational content on urban nature to the target group of young adults (age 18 to 30) and to engage them in citizen science. The initial focus was to develop a mobile app to support participants in their curiosity about nature by providing help with identifying animals and plants at all times and context. In the second step, the app was developed further as an integrative tool for environmental education and citizen science.

Naturblick combines several tools to support users in identifying animals and plants and sharing their observations and recordings. It consists of six main features: 1) multi-access identification keys for animals and plants; 2) a field book for observations; 3) species descriptions; 4) a sound recognition tool for bird vocalizations; 5) an image recognition tool for plants; and 6) a map that highlights urban nature sites in Berlin and the diversity of species to be found (Figure 1 ).

The app development was based on the approach of an agile user-centred design following Chamberlain, Sharp and Maiden [ 2006 ] and Sy [ 2007 ]. It was conducted by a multidisciplinary team of specialists in biology, informatics, and social sciences. The initial phase of the app development was based on a market analysis. Additionally, a better understanding of the user needs was established by creating personas, scenarios and use cases [Cooper, 2004 ]. In order to involve as many people as possible, Naturblick does not require registration and thus does not collect personal data. So far, Naturblick is limited to the German language and was promoted with Berlin as the geographic target. Despite these limitations, the use of the app is constantly increasing.

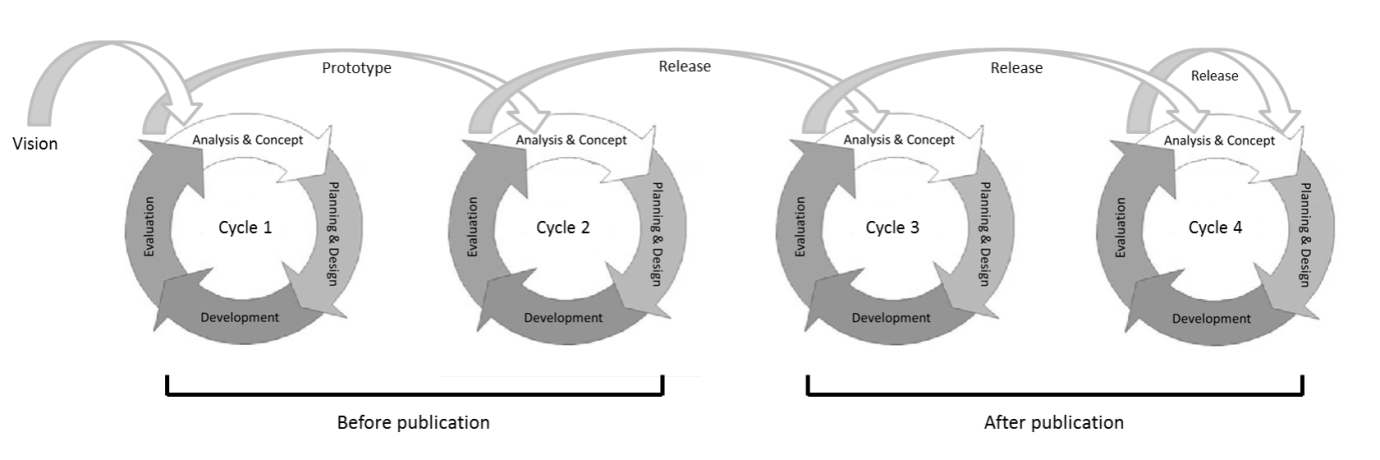

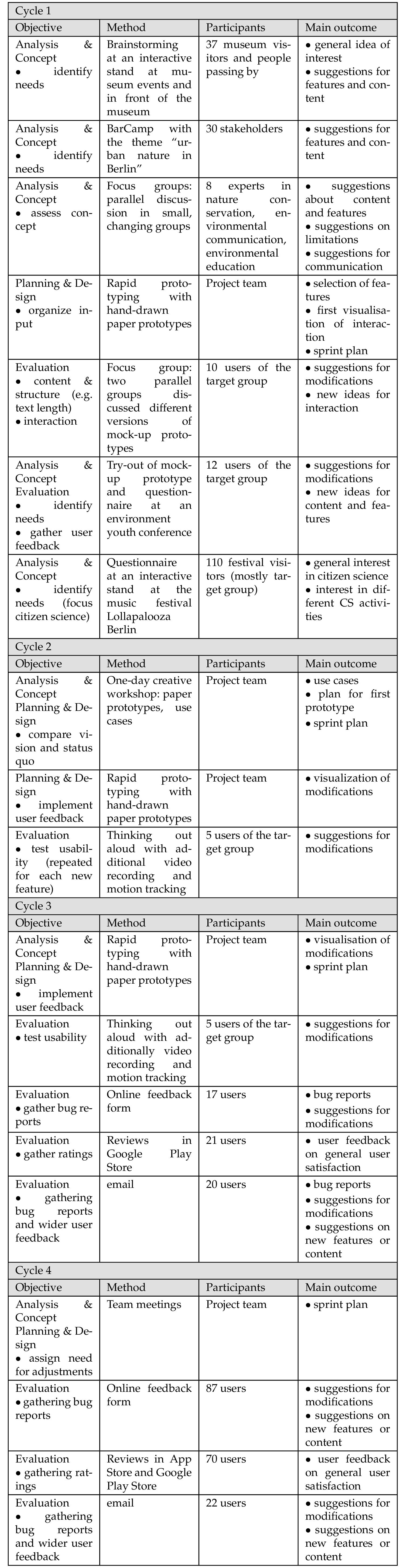

The agile user-centred process was divided into four iterative cycles (Figure 2 ). Each cycle was composed of four main stages: 1) analysis and concept; 2) planning and design; 3) development; and 4) evaluation. Each of these cycles had several rapid iterations, which allowed short-term planning and fast adjustments to new insights [Chamberlain, Sharp and Maiden, 2006 ; Sy, 2007 ]. Based on the prototypes and test results, the development was moved into the next cycle, and methods were adapted (Table 1 ). Not all features were implemented at the same time, as some underwent the development process with different time schedules. After prioritising in Cycle 1, the development of two main features was delayed. Different cycles were executed at the same time.

In Cycle 1, the main focus was on the analysis and the establishment of a concept (Table 1 ). For the early testing stages, mock-up prototypes were discussed by focus groups. Focus groups are discussion groups, compiled on the basis of specific criteria, such as age, gender and educational background, to generate ideas, evaluate concepts, review ideas and gather information on the motivations and backgrounds of target groups [Krueger, 1994 ; Morgan, 1997 ]. In the Cycle 2, several prototypes were built and iteratively tested by the target group. The participants solved a task with a prototype. During that task, they thought out loud and were monitored by video recording and motion tracking. This method was used to test the usability of the app based on prototypes and insights into the thinking processes of the participants; it also enhanced interaction [Frommann, 2005 ; Häder, 2015 ]. The app was released for Android without much publicity to evaluate the app in a real-world context in Cycle 3. Overall, 58 feedback comments were collected via an online feedback form, email and the application distribution platforms (Table 1 ). In Cycle 4, the app was released officially for Android and iOS. Further user feedback was collected to ensure that the app can be continuously improved and maintained to adapt to user expectations as they change over time [Bennett and Rajlich, 2000 ].

7 Methods

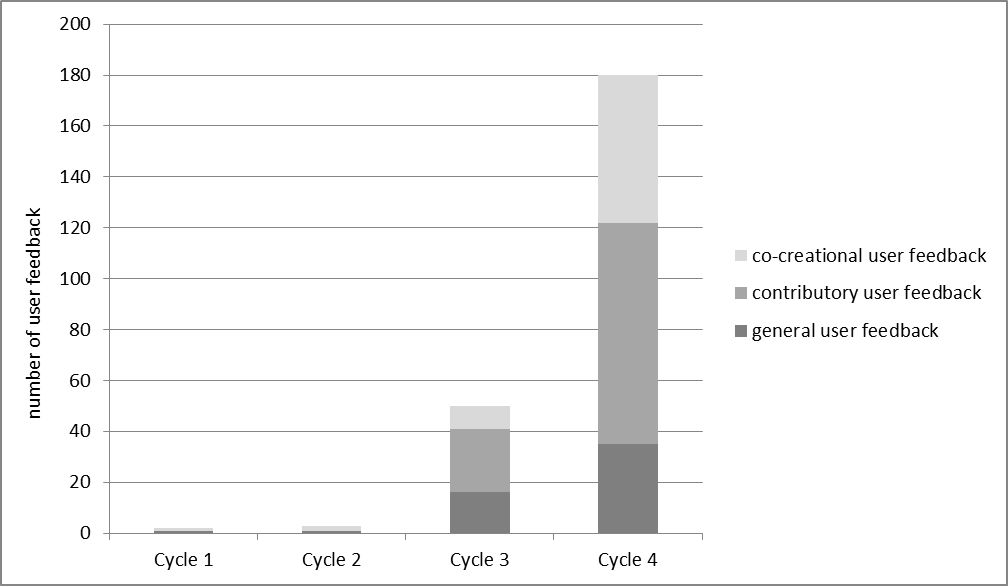

Throughout the whole development process, user feedback was being collected from beginning April 2015 onwards. Users and the general public were regularly approached via national and local radio interviews, a local television report, national and local newspaper articles, press releases and on social media and encouraged to give feedback. Furthermore, people were asked to send their feedback digitally at events. Between April 2015 and September 2017, the mobile app was downloaded 47,102 times and 240 initial feedback comments were provided via email, feedback forms and application distribution platforms, whereas only five feedback comments were provided in Cycle 1 and 2.

Users were mainly asked to provide ideas and feedback via a feedback form, which is implemented in the mobile app and the project homepage. The form consists of six items: categories of user feedback (e.g. bug report, question, and suggestions), device type and version, app information, a free text field, and contact information for further inquiry. Additionally, people are asked to send emails to the projects email address, which can be found on the app and the project homepage. Moreover, users rate and review the app on the application distribution platforms (Google Play Store and App Store).

Pursuant to the guidelines for ethical internet research, no individual participants were analysed, and the content was paraphrased, if it was not publicly posted on the application distribution platforms [Ess and Association of Internet Researchers (AoIR), 2002 ]. No personal information of the participants was collected. User feedback gained during events and user tests was not included in the study. User feedback given in response to further questions from the project team was not included.

The user feedback was analysed using qualitative content analysis to classify the user feedback with regard to the level of involvement [Mayring, 2010 ; Zhang and Wildemuth, 2009 ]. The coding categories were based on the level of participation for public participation in scientific research, as defined by Shirk et al. [ 2012 ]. They defined the degree of collaboration by the extent of participants’ involvement in the process of scientific research and distinguished between five models: contractual projects, contributory projects, collaborative projects, co-created projects, and collegial projects. The definition was adapted to UCD in citizen science. Initially, three categories for the level of involvement were established: contributory involvement, in which users primarily provide information about the software; collaborative involvement, in which users contribute information about the software, help to refine the design and improve features; co-creational involvement, in which users are actively involved in the conceptual and design process. Additionally, the level of involvement was linked to the types of user feedback as outlined by Smith, Fitzpatrick and Rogers [ 2004 ] and to the role of the user as outlined by Sanders and Stappers [ 2008 ].

The data was analysed by two researchers. They coded each user feedback independently and discussed and adopted the coding categories to achieve a mutual understanding and sufficient coding consistency. The categories were not mutually exclusive, as participants combined different themes and levels of involvement in one feedback. Therefore, the category of the highest formulated level of involvement was coded, when different categories were expressed in the same user feedback. The coding resulted in three user feedback types, which were analysed in terms of frequency for each development cycle. Furthermore, the three communication channels were compared with regard to the user feedback type.

8 Results

In their digital feedback, users showed three levels of involvement. The user feedback was categorised as: general user feedback, contributory user feedback and co-creational user feedback. The three types are described in Table 2 . Users who gave general user feedback expressed the lowest level of involvement and gave reactive user feedback. A higher involvement in shaping the development process was expressed by users who provided contributory user feedback. They took part in improving existing features through reactive user feedback, such as reporting bugs. The highest level of involvement was shown by users who gave co-creational user feedback. They also showed an understanding of the context of the use and provided reflective user feedback on their own initiative.

Five feedback comments were not assigned to one of the three types because they were not linked to the development process. These users reported species observations “ I saw black terns. ”, asked for support to identify species “ ….attached a photo. What kind of butterfly is it? ” and one user asked to include humans, which as appeared to be a joke: “ I was not able to find anything in the application for the following features: […] has 2 legs, […] lives in rocks with various caves … ”.

Overall 0.5% of the people who downloaded the app provided feedback, while the majority of user feedback was received after releasing the mobile app (98%). As can be seen in Figure 3 user feedback was mostly given in Cycle 4. In the cycles before releasing the app, users mostly expressed co-creational user feedback towards the overall concept. One participant even provided a market analysis of other apps and technical concepts: “ …currently available bird sound recognition APPs have several disadvantages […]. Since mobile phone recorders are only moderately good, I think it would be great, if one could also upload recordings into your app made with an external voice recorder. This feature should be easily integrated, since you could just access a different destination folder… ”.

In both cycles, after releasing the app, user feedback was mainly contributory (Cycle 3: 49%, Cycle 4: 48%) and there was no difference in themes between both cycles. Users referred mostly to device compatibility, bug reports, and usability concerns. For example, one user reported: “ The app is great. Unfortunately, it takes 70 seconds to start. After this time, the bird I wanted to identify via sound recognition is often already gone. ”

The number of co-creational user feedback increased in Cycle 4. Almost a third of the users (32%) made suggestions for improvement, suggestions for additional content or proposed new features. While a variety of themes were covered, there were also frequently repeated topics, such as “ …A geographical display of the field book would be a useful extension… ” and “ …I hope the app will be extended … with even more pictures of each plant, because one picture is often not enough, depending on the season. ”. Other co-creational user feedback pointed to user groups that were not the first target group but appeared to be frequent users. One example for new user groups were people who are already engaged in participatory observation networks. These people identified the chance to connect the app to already existing citizen science project: “ I miss a function to transfer my recording, so I could insert the recording directly into my observation network…. ”.

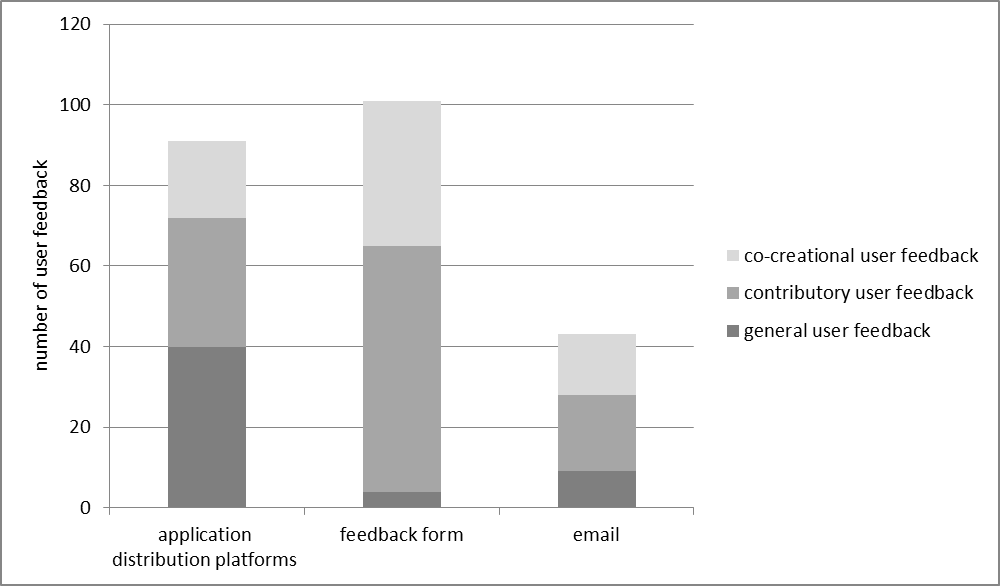

Figure 4 shows that participants most commonly used the feedback form, and email was used least. Notably, 75% of all general user feedback was provided via the App Store and Google Play Store. The application distribution platforms were also often used to give other users advice for or against downloading the app. Nevertheless, comments were also directed towards the developers to provide contributory and even co-creational user feedback. In contrast, almost no general user feedback was sent via the feedback form (4%), which was primarily used for but mostly contributory user feedback (60%). Although co-creational user feedback was given via all communication channels, about half of it was provided via the feedback form (51%).

9 Discussion

In citizen science, user-centred design is recognised for its potential to involve participants in the development of technology, address different types of volunteers, and develop successful tools [e.g. Newman, Zimmerman et al., 2010 ; Sturm et al., 2018 ]. But with the user-centred development of Naturblick, we experienced the limitations of involving participants meaningfully in the development process because of the controlled UCD usability testing situations. For instance, the user tests of the audio pattern recognition feature revealed no usability problems, but participants who used the app in a real world scenario found that the app was starting too slowly for them to be able to record a bird before it flew away. Also, the conventional focus on usability often ignores features that address a volunteer’s motivation [Wald, Longo and Dobell, 2016 ]. Thus, we found that a combination of UCD with more participatory design approaches would fit the requirements of technical development for citizen science. These experiences are consistent with reflections of the role of users in UCD [Smith, Fitzpatrick and Rogers, 2004 ; Sanders and Stappers, 2008 ]. Along the same lines, a combination of UCD and participatory design seems more suitable to address the complex relation between technology, participants and the intricately complex tasks and themes of citizen science [Valdes et al., 2012 ].

The study highlights the potential of digitally provided user feedback to give users more influence and room for initiative in UCD processes in citizen science. The digital user feedback showed three levels of involvement. Interestingly, users showed either a relatively low level of participation or a high level of involvement. On the one hand, users frequently provided general user feedback which implied low involvement. This user feedback is more akin to the concept of the user as a consumer than to the model of collaboration defined by Shirk et al. [ 2012 ]. On the other hand, users provided co-creational user feedback, which revealed very high involvement. This might be explained by the findings of Smith, Fitzpatrick and Rogers [ 2004 ], who emphasise that using software repeatedly motivates participants to engage on a deeper level and enables them to provide reflective user feedback. These findings suggest that individual digital feedback may act as a link between traditional UCD and participatory design. With individual digital feedback, the users can take an active role by evaluating the technology based on their priorities, motivation and time schedule and in real-life contexts. But this change of roles remains limited by the developers’ commitment to engage intensively in the culture of collaboration. For instance, citizen science activities often have predefined goals, and scientists often struggle to accept that the public can make an actual contribution [Golumbic et al., 2017 ].

Additionally, it provides the opportunity to address important challenges for human-computer interaction in citizen science, such as supporting different types of volunteers [Preece, 2016 ]. We found that traditional user tests not only limit the number of participants but often result in specific groups of people, such as students and people who visit museum events, as in our case. Six times as many users were involved with digital user feedback in comparison to the number of users involved with focus groups and thinking out aloud, techniques that are often suggested for involving users in UCD [e.g. by Preece, Rogers and Sharp, 2001 ]. Robson et al. [ 2013 ] also demonstrate that digital two-way communication for social networks has the potential to overcome these restrictions and increase the number of participants.

Types of user feedback were not associated with specific development cycles. Nonetheless, different types of user feedback were considered by the project team as useful depending on the stage of development. At an early stage of development, user involvement in analysis and concept can be co-creational. In later stages, contributory user feedback can support the development by detecting bugs quickly without the high investment of user tests. Furthermore, general user feedback at later stages of development may act as a source to measure UCD effectiveness. Additionally, the ongoing user feedback in Cycle 4 provides information about changing user needs and prevents volunteers from dropping out [Aristeidou, Scanlon and Sharples, 2017 ]. These findings are consistent with previous studies which found that different types of user feedback are perceived as useful depending on the development stage and type of input [Vredenburg et al., 2002 ; Hornbæk and Frøkjær, 2005 ].

We found that the application distribution platforms were the most common channel for general user feedback. Users often direct their feedback towards each other to give general advice, e.g. whether it is worthwhile downloading the app. This interaction along the potential of application distribution platforms for user feedback in UCD was also found by Pagano and Maalej [ 2013 ]. In contrast, user feedback via private communication channels was more often contributory and co-creational. Especially the structured feedback form resulted in the highest contributory user feedback and most responses overall. This can be explained by the design of the form, which was made to collect structured and easily analysed bug reports.

Our results are only informative. The findings only describe one UCD process in citizen science and cannot be generalised. We were also unable to analyse who is providing user feedback digitally and what motivation they had to do so. Therefore, further investigation about the context and user motivation is needed to better understand user involvement via digital user feedback. However, this study does provide insights into UCD for app development in citizen science. It adds to existing UCD techniques and provides directions to involve numerous participants with relatively low costs.

10 Conclusion

Based on our results we conclude that a mix of online and offline communication, digitally provided user feedback and traditional user tests help to increase participation and suits the needs of citizen science projects best. Digital user feedback can link UCD techniques with more participatory design approaches. Therefore, we recommend starting implementation of the digital user feedback at a very early stage. We recommend experimenting with communication channels, including social media, and to adapt techniques depending on experiences, project cycles, and the desired type of user feedback. We emphasise that the categorization of user feedback types does not indicate the quality or usefulness of the user feedback.

In future studies, we plan to investigate the motivation and reason for users to provide feedback digitally in citizen science. Furthermore, we would like to study user feedback in combination with analytics data on app use. We hope this will allow us to better understand how to support and effectively use this form of communication in UCD.

Acknowledgments

We would like to thank our participants.

This publication was written as part of the project “Stadtnatur entdecken”, funded by the Federal Ministry of the Environment, Nature Conservation and Nuclear Safety (BMU).

References

-

Ahtinen, A., Mattila, E., Vaatanen, A., Hynninen, L., Salminen, J., Koskinen, E. and Laine, K. (2009). ‘User experiences of mobile wellness applications in health promotion: user study of Wellness Diary, Mobile Coach and SelfRelax’. In: Proceedings of the 3d International ICST Conference on Pervasive Computing Technologies for Healthcare . London, U.K.: IEEE, pp. 1–8. https://doi.org/10.4108/icst.pervasivehealth2009.6007 .

-

Aristeidou, M., Scanlon, E. and Sharples, M. (2017). ‘Design processes of a citizen inquiry community’. In: Citizen inquiry: synthesising science and inquiry learning. Ed. by C. Herodotou, M. Sharples and E. Scanlon. Abingdon, U.K.: Routledge, pp. 210–229. https://doi.org/10.4324/9781315458618-12 .

-

Bagozzi, R. P., Davis, F. D. and Warshaw, P. R. (1992). ‘Development and test of a theory of technological learning and usage’. Human Relations 45 (7), pp. 659–686. https://doi.org/10.1177/001872679204500702 .

-

Bak, J. O., Nguyen, K., Risgaard, P. and Stage, J. (2008). ‘Obstacles to usability evaluation in practice’. In: Proceedings of the fifth Nordic conference on Human-computer interaction building bridges — NordiCHI ’08 (Lund, Sweden). New York, U.S.A.: ACM Press, pp. 23–32. https://doi.org/10.1145/1463160.1463164 .

-

Bennett, K. H. and Rajlich, V. T. (2000). ‘Software maintenance and evolution: a roadmap’. In: Proceedings of the conference on the future of software engineering — ICSE ’00 . New York, U.S.A.: ACM Press, pp. 73–87. https://doi.org/10.1145/336512.336534 .

-

Bonney, R., Cooper, C. B., Dickinson, J., Kelling, S., Phillips, T., Rosenberg, K. V. and Shirk, J. (2009). ‘Citizen Science: a Developing Tool for Expanding Science Knowledge and Scientific Literacy’. BioScience 59 (11), pp. 977–984. https://doi.org/10.1525/bio.2009.59.11.9 .

-

Bonney, R., Shirk, J. L., Phillips, T. B., Wiggins, A., Ballard, H. L., Miller-Rushing, A. J. and Parrish, J. K. (2014). ‘Citizen science. Next steps for citizen science’. Science 343 (6178), pp. 1436–1437. https://doi.org/10.1126/science.1251554 . PMID: 24675940 .

-

Bowser, A. E., Hansen, D. L., Raphael, J., Reid, M., Gamett, R. J., He, Y. R., Rotman, D. and Preece, J. J. (2013). ‘Prototyping in PLACE: a scalable approach to developing location-based apps and games’. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems — CHI ’13 . New York, U.S.A.: ACM Press, pp. 1519–1528. https://doi.org/10.1145/2470654.2466202 .

-

Chamberlain, S., Sharp, H. and Maiden, N. (2006). ‘Towards a framework for integrating agile development and user-centred design’. In: Extreme programming and agile processes in software engineering. Proceedings of 7th International conference on XP and agile process in software engineering (XP ’06). Berlin, Heidelberg, Germany: Springer, pp. 143–153. https://doi.org/10.1007/11774129_15 .

-

Cooper, A. (2004). The inmates are running the asylum: why high-tech products drive us crazy and how to restore the sanity. Indianapolis, IN, U.S.A.: Sams Publishing.

-

Crall, A., Kosmala, M., Cheng, R., Brier, J., Cavalier, D., Henderson, S. and Richardson, A. (2017). ‘Volunteer recruitment and retention in online citizen science projects using marketing strategies: lessons from Season Spotter’. JCOM 16 (01), A1. URL: https://jcom.sissa.it/archive/16/01/JCOM_1601_2017_A01 .

-

Curtis, V. (2015). ‘Motivation to Participate in an Online Citizen Science Game: a Study of Foldit’. Science Communication 37 (6), pp. 723–746. https://doi.org/10.1177/1075547015609322 .

-

Ess, C. and Association of Internet Researchers (AoIR) (2002). Ethical decision-making and Internet research . URL: https://aoir.org/reports/ethics.pdf (visited on 8th February 2018).

-

Eveleigh, A. M. M., Jennett, C., Blandford, A., Brohan, P. and Cox, A. L. (2014). ‘Designing for dabblers and deterring drop-outs in citizen science’. In: Proceedings of the IGCHI Conference on Human Factors in Computing Systems (CHI ’14) . New York, NY, U.S.A.: ACM Press, pp. 2985–2994. https://doi.org/10.1145/2556288.2557262 .

-

Fotrousi, F., Fricker, S. A. and Fiedler, M. (2017). ‘The effect of requests for user feedback on Quality of Experience’. Software Quality Journal 26 (2), pp. 385–415. https://doi.org/10.1007/s11219-017-9373-7 .

-

Froehlich, J., Chen, M. Y., Consolvo, S., Harrison, B. and Landay, J. A. (2007). ‘MyExperience: a system for in situ tracing and capturing of user feedback on mobile phones’. In: Proceedings of the 5th international conference on Mobile systems, applications and services — MobiSys ’07 (San Juan, Puerto Rico, 11th–13th June 2007). New York, U.S.A.: ACM Press, pp. 57–70. https://doi.org/10.1145/1247660.1247670 .

-

Frommann, U. (2005). Die Methode “Lautes Denken” . URL: https://www.e-teaching.org/didaktik/qualitaet/usability/Lautes%20Denken_e-teaching_org.pdf (visited on 22nd January 2018).

-

Ginsburg, S. (2010). Designing the iPhone user experience: a user-centered approach to sketching and prototyping iPhone apps. New York, U.S.A.: Addison-Wesley Professional.

-

Golumbic, Y. N., Orr, D., Baram-Tsabari, A. and Fishbain, B. (2017). ‘Between vision and reality: a case study of scientists’ views on citizen science’. Citizen Science: Theory and Practice 2 (1), p. 6. https://doi.org/10.5334/cstp.53 .

-

Gould, J. D. and Lewis, C. (1985). ‘Designing for usability: key principles and what designers think’. Communications of the ACM 28 (3), pp. 300–311. https://doi.org/10.1145/3166.3170 .

-

Grace, K., Maher, M. L., Preece, J., Yeh, T., Stangle, A. and Boston, C. (2015). ‘A process model for crowdsourcing design: a case study in citizen science’. In: Design Computing and Cognition ’14. Ed. by J. Gero and S. Hanna. Cham, Switzerland: Springer International Publishing, pp. 245–262. https://doi.org/10.1007/978-3-319-14956-1_14 .

-

Gulliksen, J., Boivie, I., Persson, J., Hektor, A. and Herulf, L. (2004). ‘Making a difference: a survey of the usability profession in Sweden’. In: Proceedings of the third Nordic conference on Human-computer interaction — NordiCHI ’04 (Tampere, Finland). New York, U.S.A.: ACM Press, pp. 207–215. https://doi.org/10.1145/1028014.1028046 .

-

Gulliksen, J., Göransson, B., Boivie, I., Blomkvist, S., Persson, J. and Cajander, Å. (2003). ‘Key principles for user-centred systems design’. Behaviour & Information Technology 22 (6), pp. 397–409. https://doi.org/10.1080/01449290310001624329 .

-

Häder, M. (2015). Empirische Sozialforschung. Eine Einführung. Wiesbaden, Germany: VS Verlag für Sozialwissenschaften.

-

Haklay, M. M. and Nivala, A.-M. (2010). ‘User-centred design’. In: Interacting with geospatial technologies. Ed. by M. Haklay. Chippenham, U.K.: John Wiley & Sons, Ltd, pp. 89–106. https://doi.org/10.1002/9780470689813.ch5 .

-

Haklay, M. (2013). ‘Citizen Science and Volunteered Geographic Information: Overview and Typology of Participation’. In: Crowdsourcing Geographic Knowledge: Volunteered Geographic Information (VGI) in Theory and Practice. Ed. by D. Sui, S. Elwood and M. Goodchild. Berlin, Germany: Springer, pp. 105–122. https://doi.org/10.1007/978-94-007-4587-2_7 .

-

Hornbæk, K. and Frøkjær, E. (2005). ‘Comparing usability problems and redesign proposals as input to practical systems development’. In: Proceedings of the SIGCHI conference on Human factors in computing systems — CHI ’05 (Portland, OR, U.S.A.). New York, U.S.A.: ACM Press, pp. 391–400. https://doi.org/10.1145/1054972.1055027 .

-

International Organization for Standardization (2010). ISO 9241-210. Ergonomics of human-system interaction — part 210: human-centred design for interactive systems. Geneva, Switzerland: ISO.

-

Jennett, C. and Cox, A. L. (2014). ‘Eight guidelines for designing virtual citizen science projects’. In: HCOMP ’14 (Pittsburgh, PA, U.S.A. 2nd–4th November 2014), pp. 16–17. URL: https://www.aaai.org/ocs/index.php/HCOMP/HCOMP14/paper/view/9261 .

-

Jennett, C., Kloetzer, L., Schneider, D., Iacovides, I., Cox, A., Gold, M., Fuchs, B., Eveleigh, A., Mathieu, K., Ajani, Z. and Talsi, Y. (2016). ‘Motivations, learning and creativity in online citizen science’. JCOM 15 (03), A05. https://doi.org/10.22323/2.15030205 .

-

Karapanos, E. (2013). ‘User experience over time’. In: Modeling users’ experiences with interactive systems. Studies in Computational Intelligence. Vol. 436. Berlin, Heidelberg, Germany: Springer, pp. 57–83. https://doi.org/10.1007/978-3-642-31000-3_4 .

-

Kjeldskov, J., Graham, C., Pedell, S., Vetere, F., Howard, S., Balbo, S. and Davies, J. (2005). ‘Evaluating the usability of a mobile guide: the influence of location, participants and resources’. Behaviour & Information Technology 24 (1), pp. 51–65. https://doi.org/10.1080/01449290512331319030 .

-

Kloetzer, L., Da Costa, J. and Schneider, D. K. (2016). ‘Not so passive: engagement and learning in volunteer computing projects’. Human Computation 3 (1), pp. 25–68. https://doi.org/10.15346/hc.v3i1.4 .

-

Krueger, R. A. (1994). Focus groups: a practical guide for applied research. 2nd ed. Thousand Oaks, CA, U.S.A.: SAGE Publications Inc.

-

Kullenberg, C. and Kasperowski, D. (2016). ‘What is Citizen Science? A Scientometric Meta-Analysis’. Plos One 11 (1), e0147152. https://doi.org/10.1371/journal.pone.0147152 .

-

Land-Zandstra, A. M., Devilee, J. L. A., Snik, F., Buurmeijer, F. and van den Broek, J. M. (2016). ‘Citizen science on a smartphone: Participants’ motivations and learning’. Public Understanding of Science 25 (1), pp. 45–60. https://doi.org/10.1177/0963662515602406 .

-

Mayring, P. (2010). Qualitative Inhaltsanalyse: Grundlagen und Techniken. 11th ed. Weinheim, Germany: Beltz.

-

Morgan, D. L. (1997). Focus groups as qualitative research. 2nd ed. Thousand Oaks, CA, U.S.A.: SAGE Publications Inc.

-

Newman, G., Wiggins, A., Crall, A., Graham, E., Newman, S. and Crowston, K. (2012). ‘The future of citizen science: emerging technologies and shifting paradigms’. Frontiers in Ecology and the Environment 10 (6), pp. 298–304. https://doi.org/10.1890/110294 .

-

Newman, G., Zimmerman, D., Crall, A., Laituri, M., Graham, J. and Stapel, L. (2010). ‘User-friendly web mapping: lessons from a citizen science website’. International Journal of Geographical Information Science 24 (12), pp. 1851–1869. https://doi.org/10.1080/13658816.2010.490532 .

-

Pagano, D. and Maalej, W. (2013). ‘User feedback in the appstore: an empirical study’. In: 2013 21st IEEE International Requirements Engineering Conference (RE) (Rio de Janeiro, Brazil, 17th–19th July 2013). IEEE, pp. 125–134. https://doi.org/10.1109/re.2013.6636712 .

-

Pettibone, L., Vohland, K. and Ziegler, D. (2017). ‘Understanding the (inter)disciplinary and institutional diversity of citizen science: a survey of current practice in Germany and Austria’. PLOS ONE 12 (6), e0178778. https://doi.org/10.1371/journal.pone.0178778 .

-

Preece, J., Rogers, Y. and Sharp, H. (2001). Interaction design: beyond human-computer interaction. New York, U.S.A.: John Wiley & Sons.

-

Preece, J. (2016). ‘Citizen science: new research challenges for human-computer interaction’. International Journal of Human-Computer Interaction 32 (8), pp. 585–612. https://doi.org/10.1080/10447318.2016.1194153 .

-

— (2017). ‘How two billion smartphone users can save species!’ Interactions 24 (2), pp. 26–33. https://doi.org/10.1145/3043702 .

-

Preece, J. and Bowser, A. (2014). ‘What HCI can do for citizen science’. In: Proceedings of the extended abstracts of the 32nd annual ACM conference on Human factors in computing systems — CHI EA ’14 . New York, U.S.A.: ACM Press, pp. 1059–1060. https://doi.org/10.1145/2559206.2590805 .

-

Raddick, M. J., Bracey, G., Gay, P. L., Lintott, C. J., Murray, P., Schawinski, K., Szalay, A. S. and Vandenberg, J. (2010). ‘Galaxy Zoo: Exploring the Motivations of Citizen Science Volunteers’. Astronomy Education Review 9 (1), 010103, pp. 1–18. https://doi.org/10.3847/AER2009036 . arXiv: 0909.2925 .

-

Robson, C., Hearst, M., Kau, C. and Pierce, J. (2013). ‘Comparing the use of social networking and traditional media channels for promoting citizen science’. In: Proceedings of the 2013 conference on Computer supported cooperative work (CSCW ’13) (San Antonio, TX, U.S.A.). https://doi.org/10.1145/2441776.2441941 .

-

Sanders, E. B.-N. and Stappers, P. J. (2008). ‘Co-creation and the new landscapes of design’. CoDesign 4 (1), pp. 5–18. https://doi.org/10.1080/15710880701875068 .

-

Scanlon, E., Woods, W. and Clow, D. (2014). ‘Informal participation in science in the U.K.: identification, location and mobility with iSpot’. Journal of Educational Technology & Society 17 (2), pp. 58–71. URL: https://www.j-ets.net/ETS/journals/17_2/6.pdf .

-

Schaffer, E. (2004). Institutionalization of usability: a step-by-step guide. Boston, MA, U.S.A.: Addison-Wesley: Pearson Education.

-

Schuler, D. and Namioka, A. (1993). Participatory design: principles and practices. Hillsdale, NJ, U.S.A.: L. Erlbaum Associates Inc.

-

Sellen, A., Rogers, Y., Harper, R. and Rodden, T. (2009). ‘Reflecting human values in the digital age’. Communications of the ACM 52 (3), pp. 58–66. https://doi.org/10.1145/1467247.1467265 .

-

Seyff, N., Ollmann, G. and Bortenschlager, M. (2014). ‘AppEcho: a user-driven, in situ feedback approach for mobile platforms and applications’. In: Proceedings of the 1st International Conference on Mobile Software Engineering and Systems — MOBILESoft 2014 . New York, U.S.A.: ACM Press, pp. 99–108. https://doi.org/10.1145/2593902.2593927 .

-

Shirk, J. L., Ballard, H. L., Wilderman, C. C., Phillips, T., Wiggins, A., Jordan, R., McCallie, E., Minarchek, M., Lewenstein, B. V., Krasny, M. E. and Bonney, R. (2012). ‘Public Participation in Scientific Research: a Framework for Deliberate Design’. Ecology and Society 17 (2), p. 29. https://doi.org/10.5751/ES-04705-170229 .

-

Shneiderman, B. (1987). Designing the user interface: strategies for effective human-computer interaction. Reading, MA, U.S.A.: Addison-Wesley Publishing Co.

-

Silvertown, J. (2009). ‘A new dawn for citizen science’. Trends in ecology and evolution 24 (9), pp. 467–471. https://doi.org/10.1016/j.tree.2009.03.017 .

-

Smith, H., Fitzpatrick, G. and Rogers, Y. (2004). ‘Eliciting reactive and reflective feedback for a social communication tool: a multi session approach’. In: Proceedings of the 2004 conference on Designing interactive systems processes, practices, methods and techniques — DIS ’04 . New York, U.S.A.: ACM Press, pp. 39–48. https://doi.org/10.1145/1013115.1013123 .

-

Sturm, U., Schade, S., Ceccaroni, L., Gold, M., Kyba, C., Claramunt, B., Haklay, M., Kasperowski, D., Albert, A., Piera, J., Brier, J., Kullenberg, C. and Luna, S. (2018). ‘Defining principles for mobile apps and platforms development in citizen science’. Research Ideas and Outcomes 4, e23394. https://doi.org/10.3897/rio.4.e23394 . (Visited on 4th July 2018).

-

Sy, D. (2007). ‘Adapting usability investigations for agile user-centered design’. Journal of Usability Studies 2 (3), pp. 112–132. URL: http://dl.acm.org/citation.cfm?id=2835547.2835549 .

-

Szajna, B. and Scamell, R. W. (1993). ‘The effects of information system user expectations on their performance and perceptions’. MIS Quarterly 17 (4), pp. 493–516. https://doi.org/10.2307/249589 .

-

Traynor, B., Lee, T. and Duke, D. (2017). ‘Case study: building UX design into citizen science applications’. In: Design, User Experience and Usability: Understanding Users and Contexts: 6th International Conference, DUXU 2017, held as part of HCI International 2017 . Lecture Notes in Computer Science (Vancouver, BC, Canada). Cham, Switzerland: Springer International Publishing, pp. 740–752. https://doi.org/10.1007/978-3-319-58640-3_53 .

-

Valdes, C., Ferreirae, M., Feng, T., Wang, H., Tempel, K., Liu, S. and Shaer, O. (2012). ‘A collaborative environment for engaging novices in scientific inquiry’. In: Proceedings of the 2012 ACM international conference on Interactive tabletops and surfaces — ITS ’12 . New York, U.S.A.: ACM Press. https://doi.org/10.1145/2396636.2396654 .

-

Vredenburg, K., Mao, J.-Y., Smith, P. W. and Carey, T. (2002). ‘A survey of user-centered design practice’. In: Proceedings of the SIGCHI conference on Human factors in computing systems Changing our world, changing ourselves — CHI ’02 . New York, U.S.A.: ACM Press. https://doi.org/10.1145/503376.503460 .

-

Wald, D. M., Longo, J. and Dobell, A. R. (2016). ‘Design principles for engaging and retaining virtual citizen scientists’. Conservation Biology 30 (3), pp. 562–570. https://doi.org/10.1111/cobi.12627 .

-

Woods, W., McLeod, K. and Ansine, J. (2015). ‘Supporting mobile learning and citizen science through iSpot’. In: Mobile learning and STEM: case studies in practice. Ed. by H. Crompton and J. Traxler. Abingdon, U.K.: Routledge, pp. 69–86. https://doi.org/10.4324/9781315745831 .

-

Wynn, J. (2017). Citizen science in the digital age. Rhetoric, science and public engagement. Tuscaloosa, AL, U.S.A.: The University of Alabama Press.

-

Yadav, P. and Darlington, J. (2016). ‘Design guidelines for the user-centred collaborative citizen science platforms’. Human Computation 3 (1), pp. 205–211. https://doi.org/10.15346/hc.v3i1.11 .

-

Zhang, Y. and Wildemuth, B. M. (2009). ‘Qualitative analysis of content’. In: Applications of social research methods to questions in information and library science. Ed. by B. M. Wildemuth. Westport, CT, U.S.A.: Libraries Unlimited.

Authors

Ulrike Sturm is project leader of the project “Discover Urban Nature” and manages the development of the app “Naturblick” at Museum für Naturkunde Berlin (MfN). Before that, she coordinated the EU Project “Europeana Creative” at MfN. She holds a Master‘s degree in Urban Ecosystem Science from the Technical University of Berlin and a Bachelor’s degree in Education from the University of Bremen. E-mail: Ulrike.Sturm@mfn.berlin .

Martin Tscholl studied Social Science, Psychology and Visual and Media Anthropology. Since 2015 he is a research associate at the Museum für Naturkunde Berlin where he conducts research about the cultural perception of urban nature with digital media. E-mail: Martin.Tscholl@mfn.berlin .