1 Introduction

The media play an extremely important role in the dissemination of climate change information to the public [e.g., Schäfer, 2007 ; Storch, 2009 ; Weingart, 2001 ]. Only few other scientific topics have received a comparable amount of news media attention in the past two decades [Kirilenko and Stepchenkova, 2012 ; Painter, 2013 ; Pearce et al., 2014 ; Schäfer, Ivanova and Schmidt, 2014 ; Trumbo, 1996 ; Weingart, Engels and Pansegrau, 2000 ]. Between 1996 and 2010, the German quality newspaper Süddeutsche Zeitung published an average of 500 articles on climate change per year [Schäfer, Ivanova and Schmidt, 2014 ].

Climate change presents a prime example of a highly complex scientific topic with a high degree of abstraction [Knorr-Cetina, 2002 ; Sluijs, 2012 ]. Multiple scientific disciplines contribute to the process of gaining and interpreting scientific results, which can be conflicting. Also, the degree of certainty within climate related statistical models and projections varies such as those covered in the IPCC (Intergovernmental Panel on Climate Change) reports [Hulme and Mahony, 2010 ; Painter, 2013 ]. However, individuals must make everyday decisions based on knowledge about climate change.

Media messages carry the potential to enable audiences to develop and enhance knowledge, and thus shift attitudes and behavioral intentions. But, exposure to media about climate change and climate sciences does not guarantee comprehension. Perceived familiarity towards the scientific topic and factual knowledge were found to be different constructs as they are predicted differently by media use, although they are slightly correlated [Ladwig et al., 2012 , for the issue of nanotechnology]. In order to acquire new knowledge through media coverage on climate science, individuals require scientific literacy. To interpret scientific results on climate change, recipients must understand the principal idea behind ‘probability’ and ‘uncertainty’.

While an ongoing debate remains about the role of uncertainty in science communication [Heidmann and Milde, 2013 ; Keohane, Lane and Oppenheimer, 2014 ; Maslin, 2013 ; Painter, 2013 ; Rauser et al., 2014 ], it is rather unclear which effects this may have on audiences. While ‘uncertainty’ represents a common characteristic of media coverage on climate change [e.g., about the IPCC reports, see Painter, 2013 ], few empirical studies on its effects exist [Retzbach and Maier, 2014 ; Ryghaug, Sørensen and Næss, 2011 ]. According to Ryghaug, Sørensen and Næss [ 2011 ], a main effect of the uncertainty discourse related to climate change occurs when audiences get uncertain themselves, for example, about what is assumed to be climate friendly behavior.

In this paper, we argue that in order to measure media effects, researchers must consider and grasp the uncertainties of an individual’s knowledge on climate change and climate sciences. Scholars have criticized that empirical research on people’s knowledge about scientific issues often suffers from weaknesses and inconsistencies in the concept and measurements of knowledge [Connor and Siegrist, 2010 ; Durant et al., 2000 ; Ladwig et al., 2012 ; Pardo and Calvo, 2002 ]. To meet this critique, this paper theorizes five different dimensions of knowledge about climate change and takes the degree of uncertainty into account.

Therefore, it seems necessary to consider previous research on people’s knowledge on science issues. One popular but often criticized model in science communication is the knowledge deficit model . This model assumes that the general public (in contrast to science) is ignorant regarding scientific topics [Royal Society of London, 1985 ]. The main drivers for increased knowledge consist of information and education. The more knowledge or scientific literacy one has, the more positive one’s attitudes towards science and scientific issues are [Gustafson and Rice, 2016 ; Royal Society of London, 1985 ]. Understood conversely, this means that “low levels of scientific literacy result from a lack of information” [Kahlor and Rosenthal, 2009 , p. 381] and in more skeptical opinions about science. Empirical studies have shown inconsistent, primarily minor to non-significant, correlations between media use and knowledge as well as between knowledge and attitudes [e.g., Allum et al., 2008 ; Arlt, Hoppe and Wolling, 2011 ; Lee and Scheufele, 2006 ; Lee, Scheufele and Lewenstein, 2005 ; Nisbet et al., 2002 ; Taddicken, 2013 ; Taddicken and Neverla, 2011 ; Zhao, 2009 ]. As a consequence, scholars evaluate the knowledge deficit model as ‘too simplistic’ [e.g., Gustafson and Rice, 2016 ; Sturgis and Allum, 2004 ]. Thus, this paper aims to extend the basic assumption of this model by evaluating how different concepts and measurements of knowledge affect attitudes towards climate change.

Although Sturgis and Allum [ 2004 ] found that knowledge acts as a determinant on attitudes toward science, they stated that their analysis “highlights the complex and interacting nature of the knowledge-attitude interface” [p. 55]. The authors admit that:

“The key problem […] is obtaining satisfactory operationalizations of the relevant knowledge domains […] The process is at its most treacherous when, as in the current instance, the concepts in question are ‘fuzzy’, multi-dimensional, and, to a large degree, contested. […] Rather, the question that needs to be addressed is: how can we obtain the best measurements?”

When studying the state of research on measuring knowledge about climate change, it becomes clear that knowledge is often hard to distinguish from, or at least is strongly intertwined with, perceptions, beliefs and cultural cognitions [e.g., Kahan, 2015 ; Lombardi, Sinatra and Nussbaum, 2013 ; McCright and Dunlap, 2011 ; Zhao et al., 2011 ]. Kahan [ 2015 , p. 37] tried to disentangle “the question of ‘ what do you know? ’ from the question ‘ who are you; whose side are you on? ’.” He argues that a very large relationship between science comprehension and belief in climate change exists, but that asking whether people believe in human-caused climate change does not measure knowledge about scientific evidence on the climate science. To disentangle knowledge from identity management and cultural cognition becomes especially important when dealing with morally controversial and emotionally charged science topics [Kahan, 2015 ; National Academies of Sciences, Engineering and Medicine, 2016 ] such as climate change.

This paper discusses appropriate concepts and measures of knowledge, not only with regard to survey questions, but also in light of data analysis through the use of empirical data from a survey among German Internet users. The first step is therefore to discuss what to measure, in order to differentiate and systematize the diverse dimensions of climate related knowledge people can have. The second step will be to deal with the question of how to measure climate related knowledge and which response scales are suitable to detect what kinds of knowledge media users have, where they lack information, are uncertain and where they show misperceptions, that is perceptions which do not correspond with the current scientific consensus. Publications often neglect to describe how researchers (re-)code for data analysis in different response formats. We will therefore present and discuss this aspect in detail. As a final step, we will use multivariate regression models to test and compare whether differentiations benefit the total explained variance (R ) of explaining attitudes towards climate change. This will shed light to the disentanglement of knowledge and attitudes.

2 What to measure: dimensions of (climate change) knowledge

Previous research in the field of climate sciences has on occasion addressed the process of how to record knowledge across space and time [e.g., Miller, 1998 ; Pardo and Calvo, 2002 ]. Although it may appear more challenging than the measurement of subjective knowledge [e.g., Evans, 2011 ; McKercher, Prideaux and Pang, 2013 ], most scholars collect objective knowledge [e.g., Connor and Siegrist, 2010 ; Tobler, Visschers and Siegrist, 2012 ].

But what level of knowledge can, or even should, scientists expect individuals to know about a particular issue?

In the case of climate change, the IPCC report frequently functions as knowledge bearer and reference for climate change knowledge [Engesser and Brüggemann, 2016 ]. If climate change knowledge is measured in questionnaires, it mainly reflects the current scientific consensus. Most scholars measure the causes of climate change [e.g., Bråten and Strømsø, 2010 ; Nolan, 2010 ; Sundblad, Biel and Gärling, 2009 ]. In particular, scholars frequently measure whether human activities represent the (main) cause of climate change. Very few reception studies measure different dimensions of (climate change) knowledge .

To reflect on different dimensions of knowledge, we propose to apply the general differentiation summarized by Kiel and Rost [ 2002 ] for its applicable focus that stems from didactics and educational sciences. This framework takes individual and social relevance of knowledge into account. Kiel and Rost [ 2002 ] distinguish between four types of knowledge, each according to their unique functions.

The first type is orientation knowledge , which serves to enable people in orienting themselves in the world, while not necessarily having to be applied immediately. It is understood as broad knowledge about various matters in the world. In relation to the climate issue, one can already speak of orientation knowledge when people know about the fact that there is anthropogenic climate change. In environmental psychology, this form has been investigated as “environment system knowledge” [“declarative knowledge”, Kaiser and Fuhrer, 2003 ]. In relation to climate change, reference can also be made to “origin knowledge”. This means that recipients know that (a) climate change exists, and (b) this climate change is caused by human influence (e.g., the increase in the level of greenhouse gases). Here, we refer to this dimension as (1) causal knowledge .

However, if recipients have knowledge about climate change and its anthropogenic origins, this does not necessarily denote that they know the precise meteorological or physical contexts. For this function, Kiel and Rost [ 2002 ] suggest the term explanation and interpretation knowledge . They refer to information, which goes beyond awareness about the existence of certain phenomena but includes explanations. This type of knowledge can cover both models and theories [Kiel and Rost, 2002 ]. Since it is highly unlikely that people who do not work in the climate sciences have acquired knowledge about specific climate models, it is suggested here to investigate more selectively what they know about basic climate scientific facts ( (2) basic knowledge ), and what they know about the consequences of climate change ( (3) effects knowledge ). Basic knowledge is comprised of, above all, knowledge about CO , the greenhouse effect and the hole in the ozone layer. Here, knowledge about the consequences is not just knowledge about the increase in the average global temperature. Instead, much more specific knowledge should be recorded in survey studies, such as knowledge as to whether an increase in precipitation is to be anticipated to the same degree in all regions worldwide, or what the effects of the melting Arctic Circle are.

As third function of knowledge, Kiel and Rost [ 2002 ] indicate understanding, which relates to human actions, practices, methods and strategies. In relation to the reception of climate change communication, it is particularly interesting if recipients have certain information as to which everyday activities generate a high level of CO and which lead to a low ‘CO footprint’. Accordingly, this knowledge is referred to as (4) action-related knowledge [Kaiser and Fuhrer, 2003 ; see also Tobler, Visschers and Siegrist, 2012 ].

Finally, the fourth type is defined as source knowledge [Kiel and Rost, 2002 ] and refers to the origins of knowledge and where they can be found (e.g., in the library or on Wikipedia). Here, it is of particular relevance how recipients perceive the climate sciences, which produce knowledge about climate change and are thus the central ‘knowledge source’. Nisbet et al. [ 2002 ] have described this knowledge as “procedural science knowledge”, since it comprises knowledge about the process of gaining knowledge. This dimension also covers the recognition of the transient, incomplete, and contradictory nature of results generated by the (climate) sciences and reflects, whether recipients know that (climate) sciences can never offer universally valid answers with a zero percent error probability. As recommended by Bauer, Allum and Miller [ 2007 ] and Miller [ 1983 ], this dimension completes knowledge about scientific issues, such as climate change ( (5) procedural knowledge ).

We will use the aforementioned dimensions to investigate what people in Germany know about climate change:

RQ1: What do Germans know about climate change in relation to different knowledge dimensions?

3 How to measure: concepts and measures of (climate change) knowledge

The literature review on survey methods for knowledge measurement revealed numerous distinct possibilities for operationalization. Initially, survey studies captured information on definitions and understanding of scientific topics using “open-ended questions”, such as “Please tell me, in your own words, what is DNA?” [Miller, 1998 ]. Kahlor and Rosenthal [ 2009 ] analyzed corresponding answers on definitions and explanations of climate change and subsequently coded them by complexity and accuracy. Anik and Khan [ 2012 ] categorized respondents by their level of knowledge based on their given answers. In contrast, in telephone surveys, so-called “multi-part questions” were asked, such as whether “the Earth goes around the Sun, or the Sun goes around the Earth?” [Miller, 1998 , p. 208]. Next, we will discuss three more widespread concepts in detail: the closed-ended true-false quiz, the threefold distinction of informed, uninformed and misinformed respondents and a combination of knowledge and confidence.

3.1 Closed-ended true-false quiz: knowledge and deficiencies in knowledge (A1)

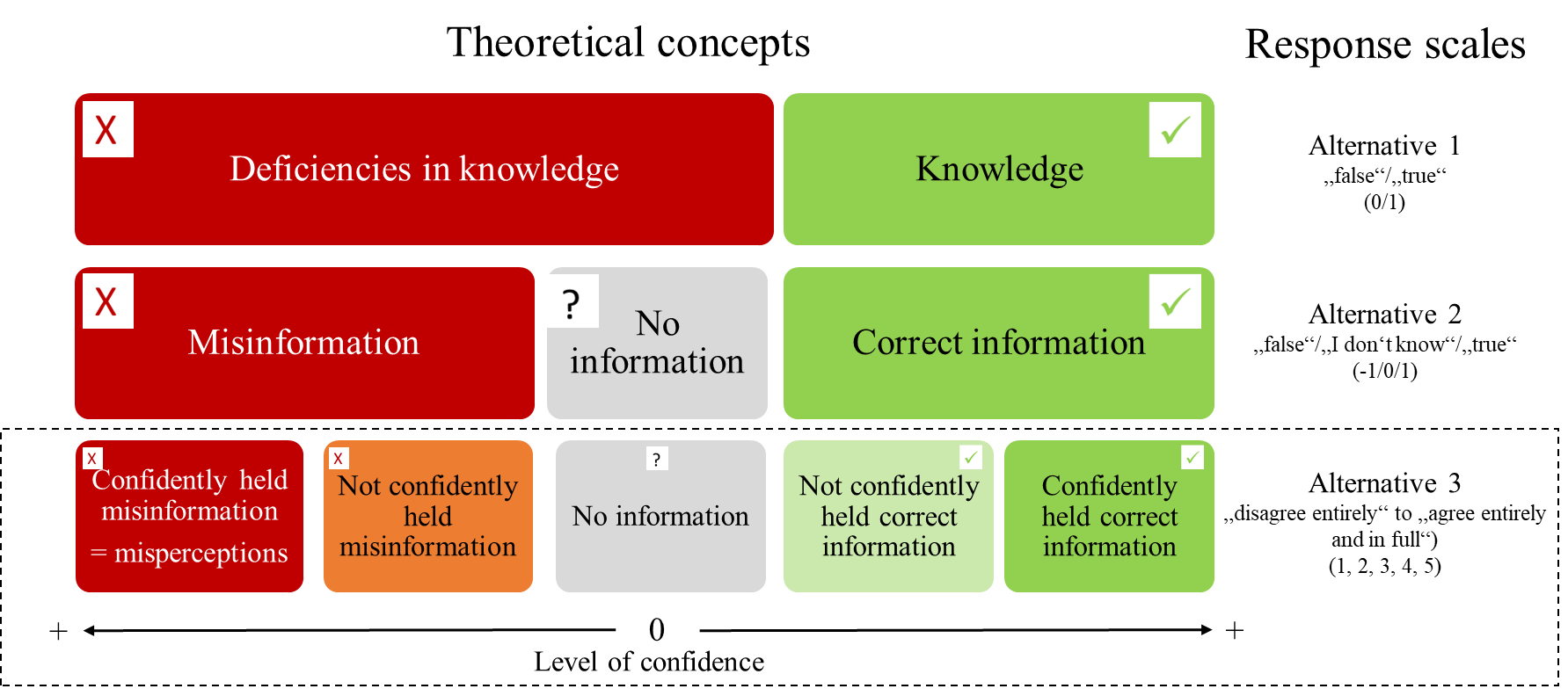

As open-ended questions lack practicability in survey studies [National Academies of Sciences, Engineering and Medicine, 2016 ], the “closed-ended true-false quiz” 1 soon became the most popular form [Pasek, Sood and Krosnick, 2015 ] in which survey participants assess true and false statements [“The center of the Earth is very hot.” — true or false?, National Academies of Sciences, Engineering and Medicine, 2016 , see alternative A1 in Figure 1 ]. A standard method of determining their present knowledge is to count the correct answers given by those questioned [e.g., Bråten and Strømsø, 2010 ; Dijkstra and Goedhart, 2012 ; Nolan, 2010 ; Vignola et al., 2013 ] or to build a mean index ranging from 1 (knowledge on all items) to 0 (no knowledge) [Tobler, Visschers and Siegrist, 2012 ]. The more points or the higher the mean, the more knowledge is present. Studies using the dichotomous distinction mainly focus on discovering either existing knowledge or knowledge gaps. However, this option neglects a 50 percent probability of choosing the correct answer by chance. Furthermore, researchers cannot be certain whether respondents picked the incorrect answer because they did not know how to respond or because they are misinformed about the issue in question. We therefore suggest considering two alternatives for the measurement of (climate change) knowledge in the following.

3.2 Threefold distinction: informed, uninformed and misinformed (A2)

Most notably, Kuklinski et al. [ 2000 ] suggest a threefold distinction into being informed, uninformed or misinformed. “To be informed requires, first, that people have factual beliefs and, second, that the beliefs be accurate. If people do not hold factual beliefs at all, they are merely uninformed. […] But if they firmly hold beliefs that happen to be wrong, they are misinformed” [Kuklinski et al., 2000 , pp. 792–793]. It is believed that misinformation leads to more problematic attitudes and behavioral intentions than no information [in the field of political communication: Kuklinski et al., 2000 ; Pasek, Sood and Krosnick, 2015 ]. For some questions, results may yield no behaviorally significant difference as to whether citizens do not respond to a knowledge question or give the wrong answer. However, for questions about climate friendly activities, being misinformed or being uninformed may result in different attitudes and behaviors. For example, someone who does not know whether taking a train or driving a car causes more CO may alternatingly take the car or the train. In contrast, misinformed people may always drive the car.

This threefold concept is frequently applied in survey studies by including a third response option such as “I don’t know” [e.g., Dijkstra and Goedhart, 2012 ; Tobler, Visschers and Siegrist, 2012 ]. For data analyses, however, most scholars include only the correct statements in sum or mean indices just as in the dichotomous distinction [e.g., Connor and Siegrist, 2010 ; Tobler, Visschers and Siegrist, 2012 ]. An alternative is to count misinformation (-1) as negative knowledge in a mean index additionally to information (+1, see A2 in Figure 1 ).

3.3 Combination of knowledge and confidence (A3)

What precisely is behind the correct or false responses to the knowledge questions?

Sociological research has highlighted the importance of examining further facets of knowledge and ignorance [Ravetz, 1993 ; Stocking and Holstein, 1993 ]. Referring to expert knowledge, Janich, Rhein and Simmerling [ 2010 ] show, based on Ravetz [ 1993 ], that there is a ‘ knowledge about one’s own knowledge ’. Some scholars suggest that certainty about one’s own knowledge (confidence) can supplement the dichotomous or threefold concepts with further differentiations [e.g., Flynn, Nyhan and Reifler, 2017 ; Kuklinski et al., 2000 ; Pasek, Sood and Krosnick, 2015 ]. As a result, four different combinations emerge in addition to the category “no information” (see Figure 1 ). Confidently held correct information denote certain knowledge. People who are not sure about the accuracy of their correct answer do not hold correct information confidently. In contrast to the first group mentioned, the second reports uncertainties. In case of climate change, this might lead to less climate friendly attitudes or behaviors, even though both groups are equally informed. Moreover, the differentiation of misinformation according to confidence is emphasized. “It is one thing not to know and be aware of one’s ignorance. It is quite another to be dead certain about factual beliefs that are far off the mark” [Kuklinski et al., 2000 , p. 809]. Hence, misinformation consists of two categories: not-confidently held misinformation and misperception. These categories refer to information people feel confident to be true despite a lack of congruence to scientific consensus. Concerning climate change knowledge and action-related knowledge especially, misperceptions might be problematically linked to everyday activities that lead to a high ‘CO footprint’ although people are convinced that they act in a climate friendly way. 2 “Misperceptions are prevalent in a number of ongoing debates in politics, health, and science” [Flynn, Nyhan and Reifler, 2017 , p. 129] and may spread as conspiracy theories or ‘alternative facts,’ for instance, via online media. In order to develop effective communications strategies, survey studies need to identify ‘misperceivers’. Not only can misperceptions have a negative impact on attitudes and behaviors, but because of an individual’s high level of confidence and their profound perception of being right, this group is least likely to be corrected [Flynn, Nyhan and Reifler, 2017 ; Kuklinski et al., 2000 ].

Most of the scholars who have considered confidence [Kuklinski et al., 2000 ; Shephard et al., 2014 ] or certainty [e.g., Alvarez and Franklin, 1994 ; Krosnick et al., 2006 ; Pasek, Sood and Krosnick, 2015 ; Sundblad, Biel and Gärling, 2009 ] in their knowledge measures in survey studies, have included one additional question for each knowledge item. Knowledge and certainty are combined in a second step as to study possible linkages between inaccuracy and confidence [Kuklinski et al., 2000 ]. However, this procedure leads to a doubling of items. For this reason, a five-point scale from 1 “disagree entirely” to 5 “agree entirely and in full” similar to Lombardi, Sinatra and Nussbaum [ 2013 ] and Reynolds et al. [ 2010 ] is suggested here. This Likert scale saves considerably more space and still covers all five types of knowledge presented (see Figure 1 ).

The introduction of these three concepts of measurement warrants an additional research question:

RQ2: To what extent do results on climate change knowledge vary across different response formats?

4 The knowledge-attitude interface

Previous research on the interface of knowledge and attitudes toward science and scientific topics has mainly detected small to no effects or found conflicting results [e.g., Arlt, Hoppe and Wolling, 2011 ; Lee, Scheufele and Lewenstein, 2005 ; Lee and Scheufele, 2006 ; Nisbet et al., 2002 ; Taddicken, 2013 ; Taddicken and Neverla, 2011 ; Zhao, 2009 ] with often long-scale consequences such as the rejection of the knowledge deficit model. However, although the idea of attributing the disbelief in human-made climate change to low levels of knowledge and science literacy is not the only explanation for public conflict over this issue [Kahan, 2015 ], it is still reasonable to assume some kind of relationship. It was argued above that methodological shortcomings might be accountable for the lack of empirical proof. From a recipient’s perspective, the different dimensions and types of knowledge based on the combination with certainty or confidence might affect people’s attitudes or perceived relevance to act in different ways.

From a communicator’s perspective, these people with different types of knowledge need to be addressed by different communicative actions. As noted by Ko [ 2016 , p. 432], “it is not the lack of knowledge that communicators must address, but rather the tackling or ‘negotiating with’ […] misinformation, myths and incorrect beliefs that is the problem”. Therefore, both perspectives highlight the significance of the distinct measurement and analysis of knowledge as well as correlations between knowledge and attitudes.

Thus, this paper answers a third research question:

RQ3: To what extent do results of regression analyses on attitudes toward climate change (problem awareness, willingness to assume responsibility, attribution of responsibility to public agents) differ based on different dimensions and the operationalization of climate change knowledge (A1, A2, A3)?

5 Method

5.1 Questionnaire and sample

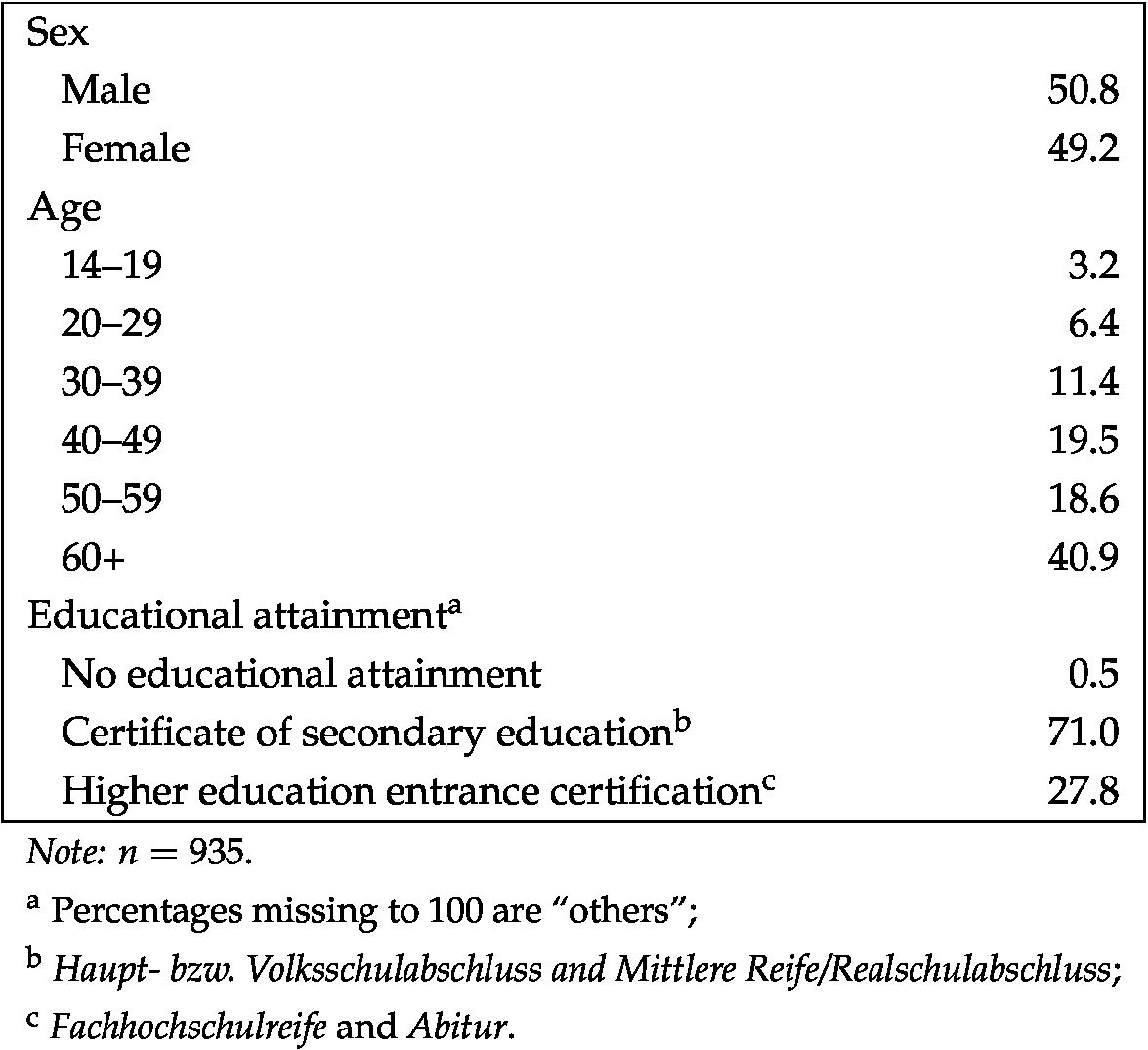

The research questions were analyzed with the help of an online access panel survey. Germans were questioned in a longitudinal study in three waves from 2013 to 2014 regarding their knowledge, attitudes, and media use on the scientific topic climate change. Quota sampling was used in the first wave according to age, sex, and federal state to be representative for Germany. The survey data used for this paper originates from the third wave, collected in October 2014 (with a time lag of ten months to the second wave). On average, the participants needed approximately twenty minutes to fill in the online questionnaire. Cases with too many missing values on the variables of interest were excluded which resulted in a final sample size of (see Table 1 ).

5.2 Measures and re-coding of knowledge

In their study, Tobler, Visschers and Siegrist [ 2012 ] established four scales for climate change knowledge and tested them in a mail survey among Swiss respondents ( ). These scales cover the first four dimensions introduced above and were applied here. For measures of causal knowledge, Tobler et al.’s “knowledge concerning climate change and causes” was used (see Table 3 ). Basic knowledge was measured by the scale “physical knowledge about CO and the greenhouse effect” and effects knowledge by the scale “knowledge concerning expected consequences of climate change” (see Tables 4 and 5 ). For action-related knowledge, the correspondent scale by Tobler, Visschers and Siegrist [ 2012 ] was applied (see Table 6 ). Additionally, items were developed to cover the last dimension of scientific knowledge according to considerations by Kiel and Rost [ 2002 ]. The scale of procedural knowledge collects information about the recipients’ understanding of how scientific findings are developed [Bauer, Allum and Miller, 2007 ; Miller, 1983 ; Nisbet et al., 2002 ; Taddicken and Reif, 2016 ] in climate research (see Table 7 ).

The levels of difficulty vary among items in each dimension. While some items can be considered as simple general knowledge, for others, expertise is required. This includes statements that are correct according to the current state of research, and to which agreement must be given, as well as items, which should be regarded as incorrect and thus rejected.

In contrast to the recommendations by Tobler, Visschers and Siegrist [ 2012 , ; “true”, “false”, “don’t know”], a five-point response scale from 1 “disagree entirely” to 5 “agree entirely and in full” 3 is employed here. However, it must be noted that this scale does not reflect a linear gradation of knowledge. Respondents who have opted for 1 “disagree entirely” do not know less about climate change than people who selected the middle range, which implies that they are uninformed. In order to compare all three concepts and methods presented in Figure 1 , this response scale was recoded twice into the threefold as well as the dichotomous differentiation.

Attitudes are measured by three indices on the same five-point Likert scale (please see Taddicken and Reif [ 2016 ] for a list of items). Problem awareness , according to Taddicken [ 2013 ] and Taddicken and Neverla [ 2011 ], denotes the degree to which respondents rate climate change as problematic issue. According to Wippermann, Calmbach and Kleinhückelkotten [ 2008 ], the degree to which respondents think that single citizens can take climate-protective actions ( willingness to assume responsibility ) or public agents are responsible ( attribution of responsibility to public agents ) was measured.

5.3 Data analyses

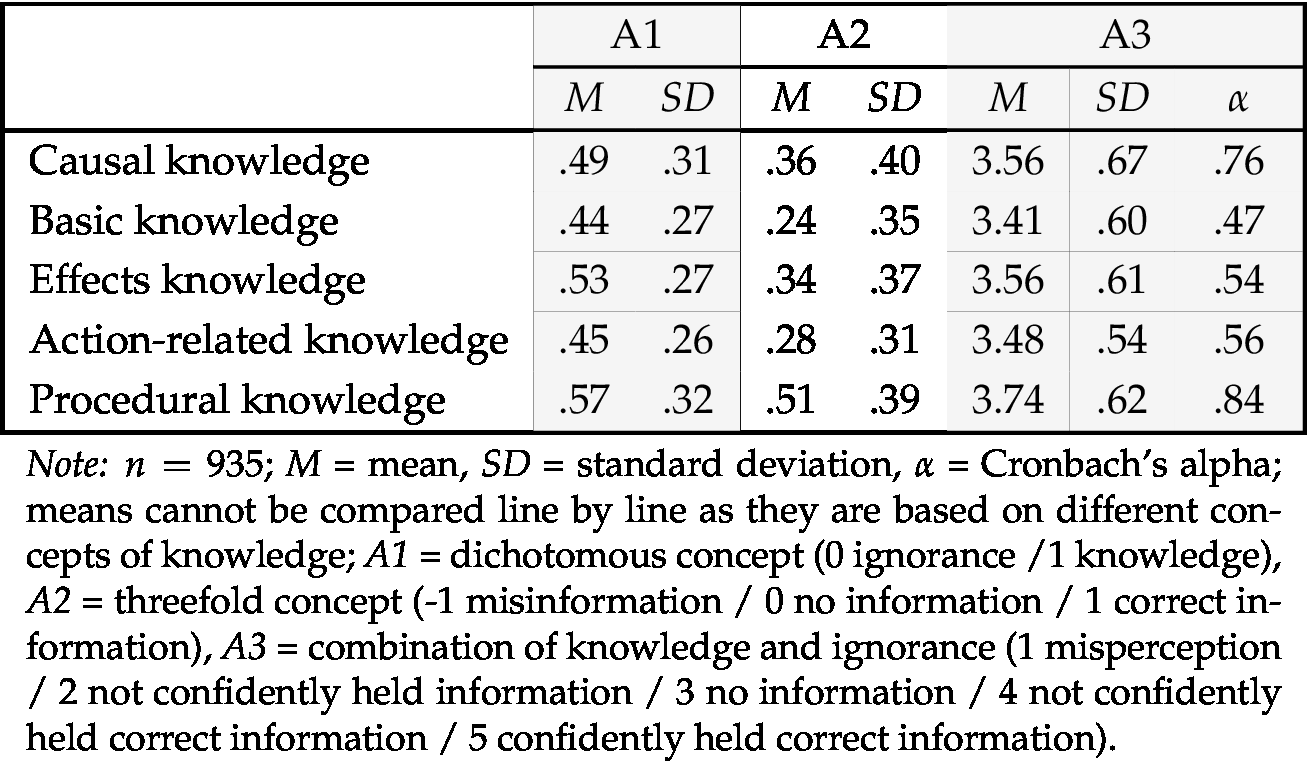

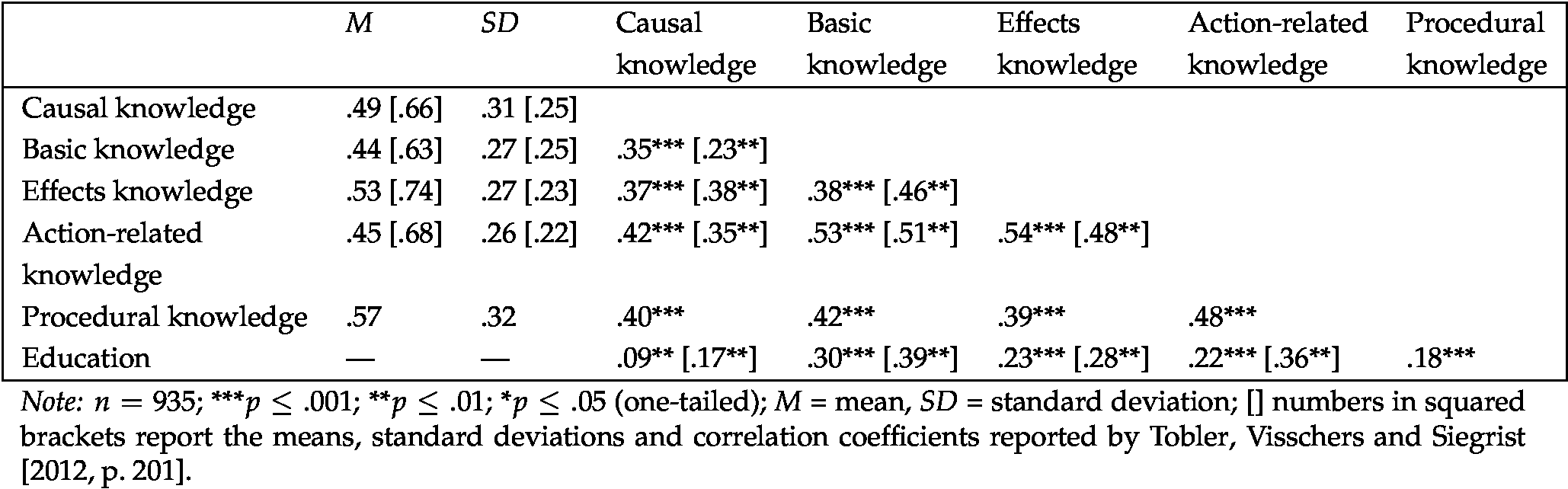

Mean indices were created for each concept and knowledge dimension (see Table 2 ). The additionally developed scale with nine knowledge items about processes in the climate sciences has a higher reliability ( ) than all other scales tested by Tobler, Visschers and Siegrist [ 2012 ] ( ). Factor analyses for all Tobler, Visschers and Siegrist [ 2012 ] scales showed that the incorrect statements tend to load on a second factor. Thus, low reliability values can be explained by the measures and indicate that participants generally tend to agree to statements asked in the survey. However, for comparability we decided in favor of the existing scales not to delete items.

First, descriptive frequencies are presented on a single item and mean level ( RQ1 ) which allow for comparison with the Swiss study of Tobler, Visschers and Siegrist [ 2012 ]. Results are compared across different response formats ( RQ2 ). Further, linear regression analyses are conducted to contrast the predictions of different knowledge dimensions on attitudes across the three concepts ( RQ3 ).

6 Results

In the following section, the results according to the three research questions are presented.

6.1 Knowledge about climate change (RQ1)

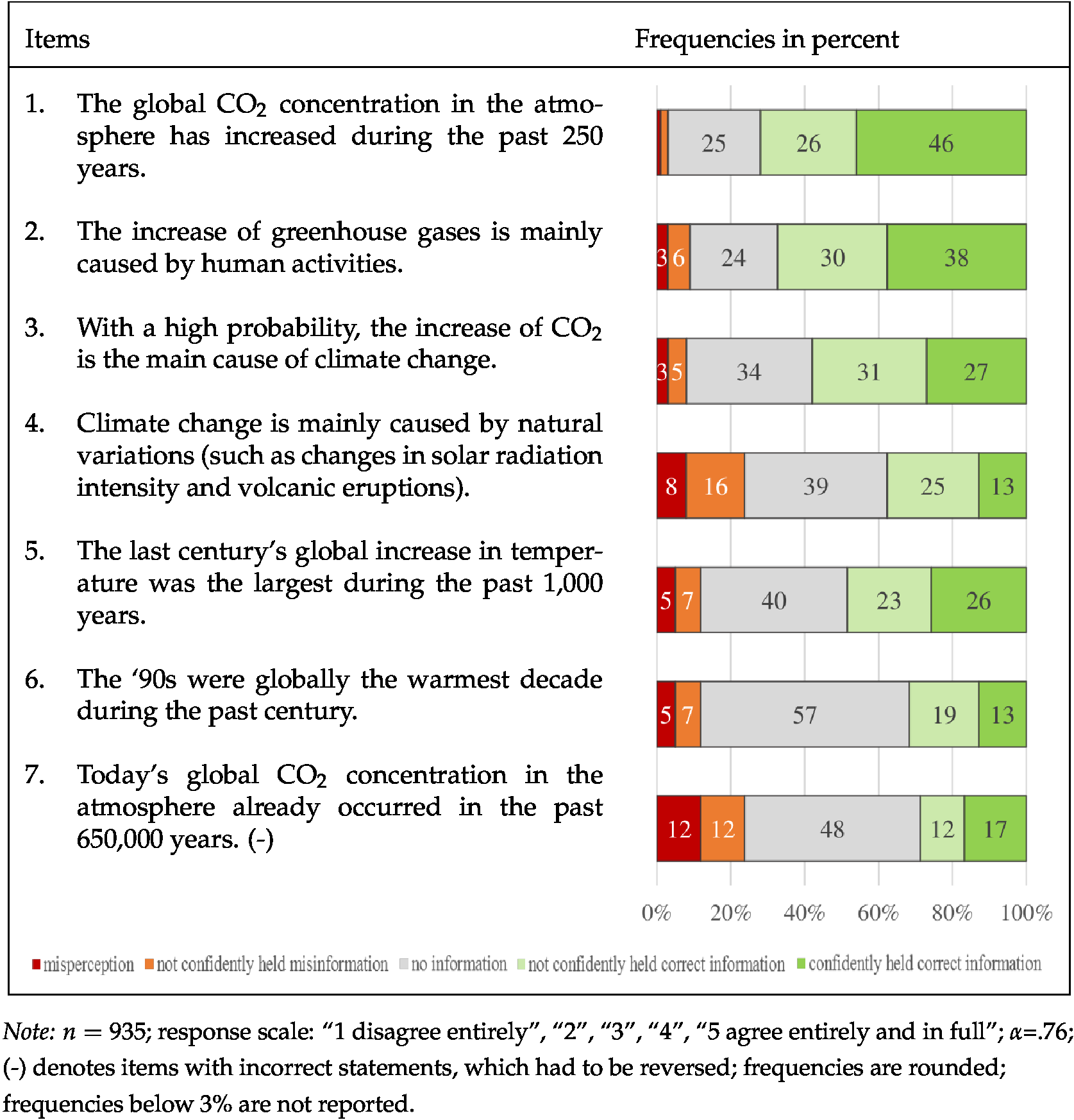

Based on the frequencies of each item, the proportion of respondents with different types of knowledge are discussed for each dimension. Overall, our data reflects fewer percentages of correctly informed and higher proportions of uninformed and often misinformed respondents than the Swiss sample. However, Tobler, Visschers and Siegrist [ 2012 ] were unable to differentiate between misinformation and misperceptions. Our data found that for most items about climate change, the smallest group of respondents are confident that their misinformation is correct and thus have misperceptions, as assumed by other scholars [e.g., Kuklinski et al., 2000 ; Pasek, Sood and Krosnick, 2015 ]. Often, more respondents have confidently held correct information than uncertain knowledge.

6.1.1 Causal knowledge

Similar to the Swiss sample, most German respondents are well informed concerning the increase in CO and greenhouse gases in general, which is mainly caused by human activity (items 1–3, see Table 3 ). Respondents are particularly uninformed about the fact that the 1990’s were globally the warmest decade of the past century.

A higher level of difficulty (or a tendency to agree to all statements) is manifested by the higher number of misinformed respondents for both incorrect statements.

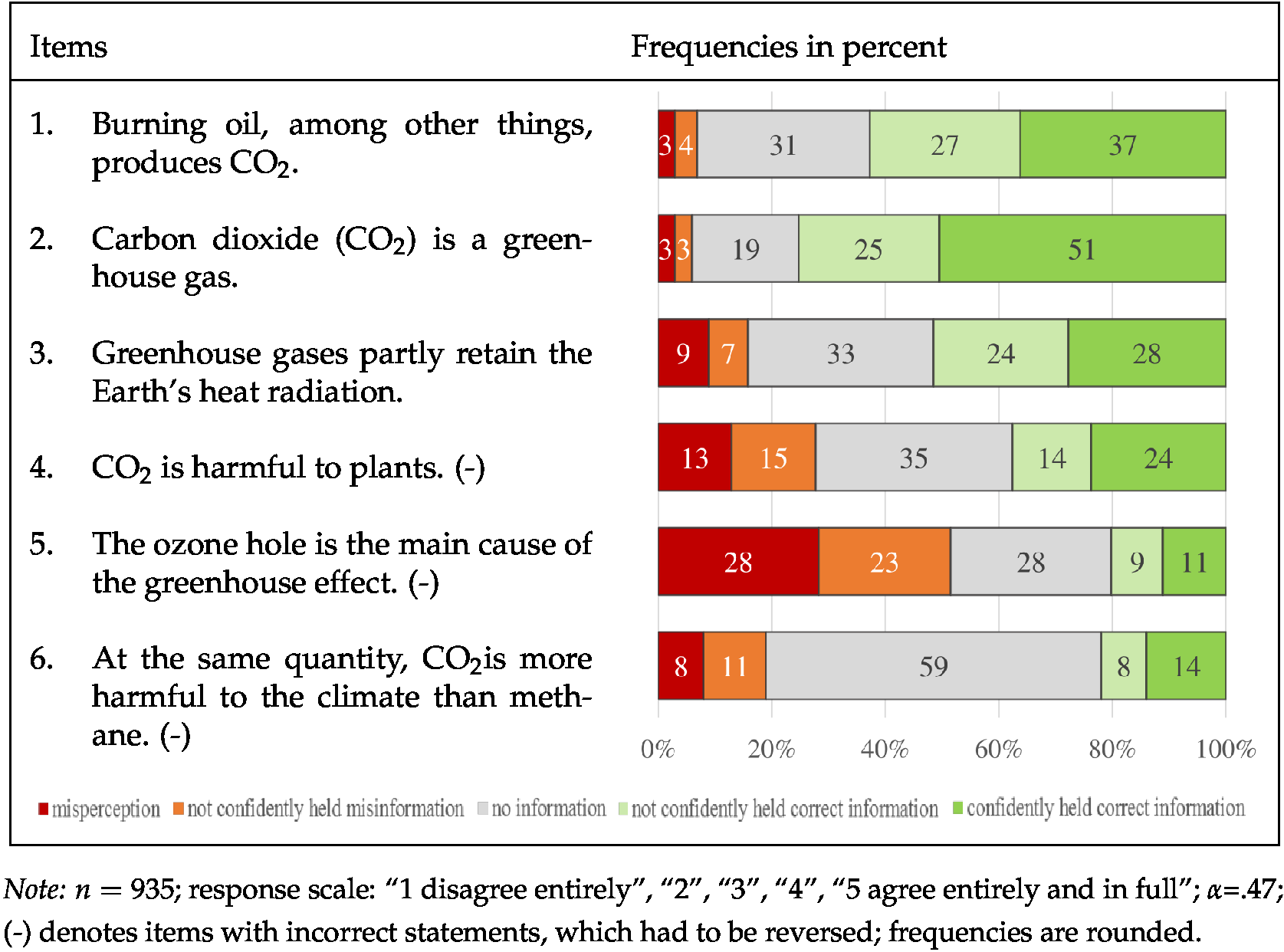

6.1.2 Basic knowledge

In this sample, the main proportion of people are knowledgeable on the first three items about the basics regarding greenhouse gases (see Table 4 ). Incorrectly formulated statements seem to be more difficult to answer correctly. Especially high proportions of the uninformed can be found in the statements regarding the harmfulness of CO and methane (items 4, 6). A very high number of participants (54%) are misinformed regarding the linkages between ozone hole and greenhouse effect and 30 percent even report confidence about knowing that the ozone hole is the main cause of the greenhouse effect. The Cronbach’s alpha value of .47, however, must be critically noted.

6.1.3 Effects knowledge

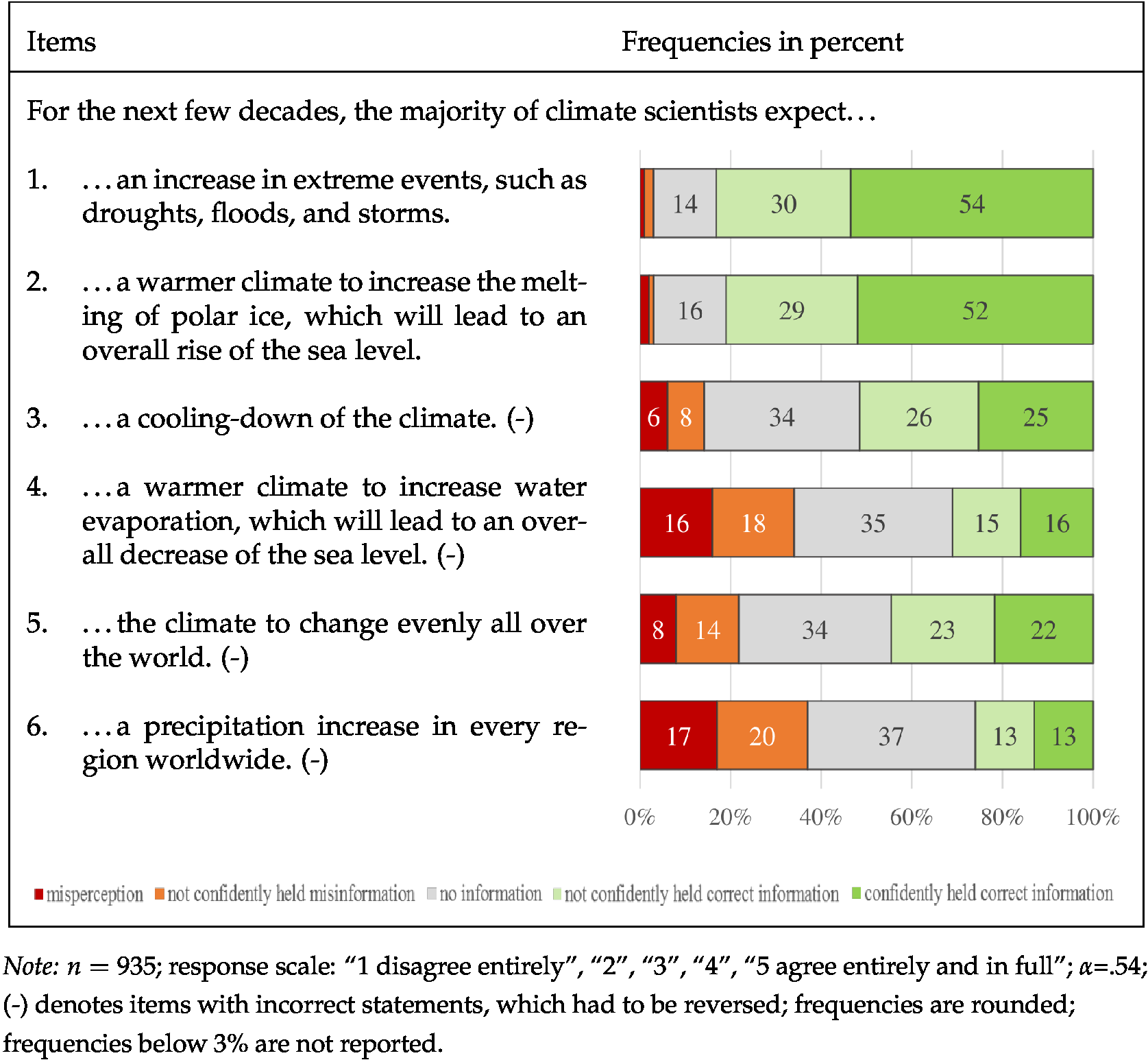

Most German respondents surveyed agree to an expected increase in extreme weather events and the melting of polar ice for the next decades (see items 1 and 2 in Table 5 ). There is neither a high number of uninformed, nor misinformed and more than half the sample is confident about their correct information. A possible reason for this might be that, future scenarios are often presented in relation to climate change in German mass media.

Most incorrect statements seem to be more complex for the respondents. Here, a higher lack of information and more misinformation and misperceptions are present. About 16 percent of respondents confidently and incorrectly believe in an overall rise of the sea level (item 4) and 17 percent believe that a precipitation increase is expected in every region worldwide (item 6).

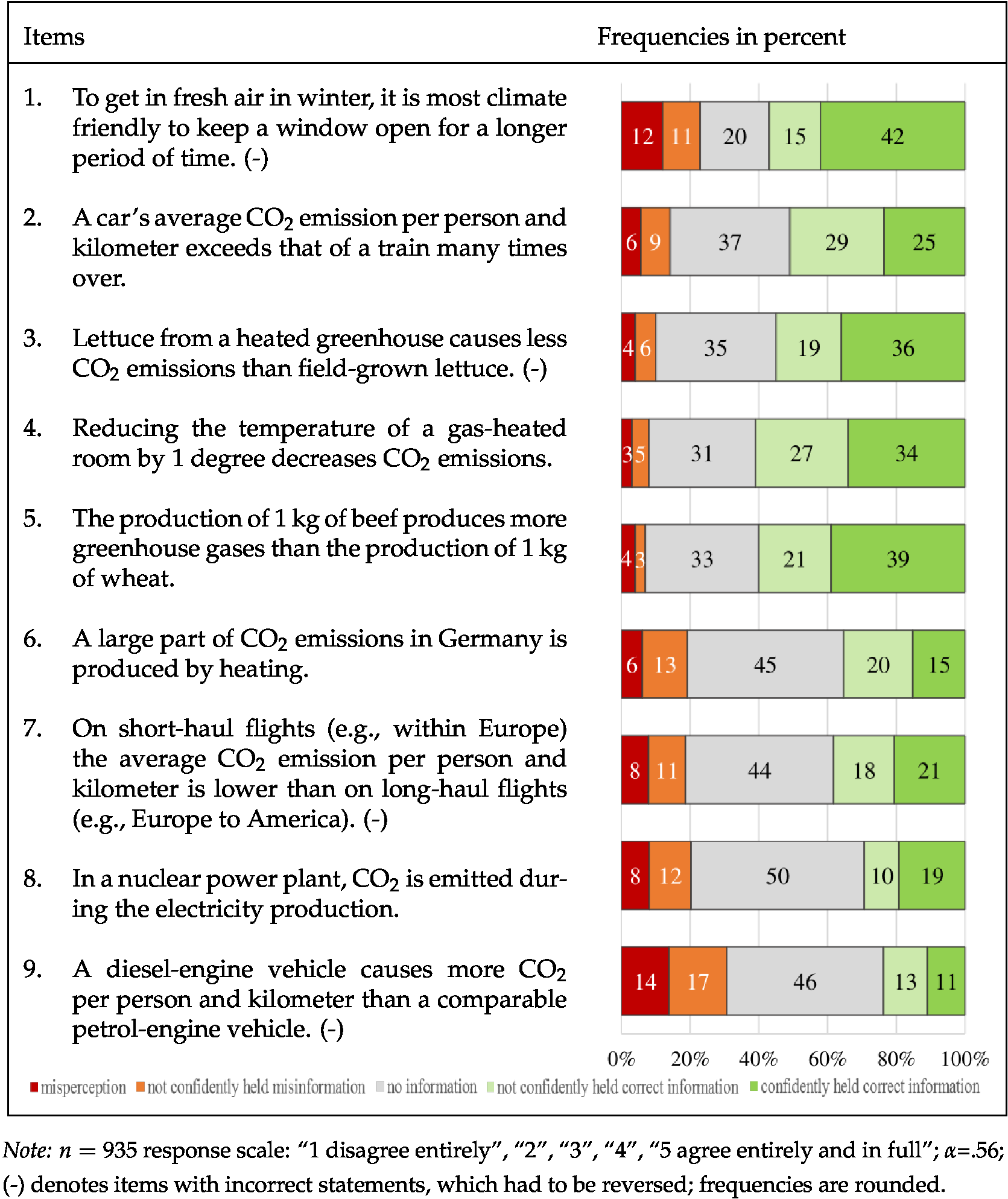

6.1.4 Action-related knowledge

Although the order of items concerning the frequencies of informed respondents differ when compared to the results presented by Tobler, Visschers and Siegrist [ 2012 ], most respondents in our sample are well informed about the first five action-related items. The highest proportion of misinformed and lowest proportion of correctly informed respondents is found in the statement about emission rates of diesel- compared to petrol-engines (item 9, see Table 6 ).

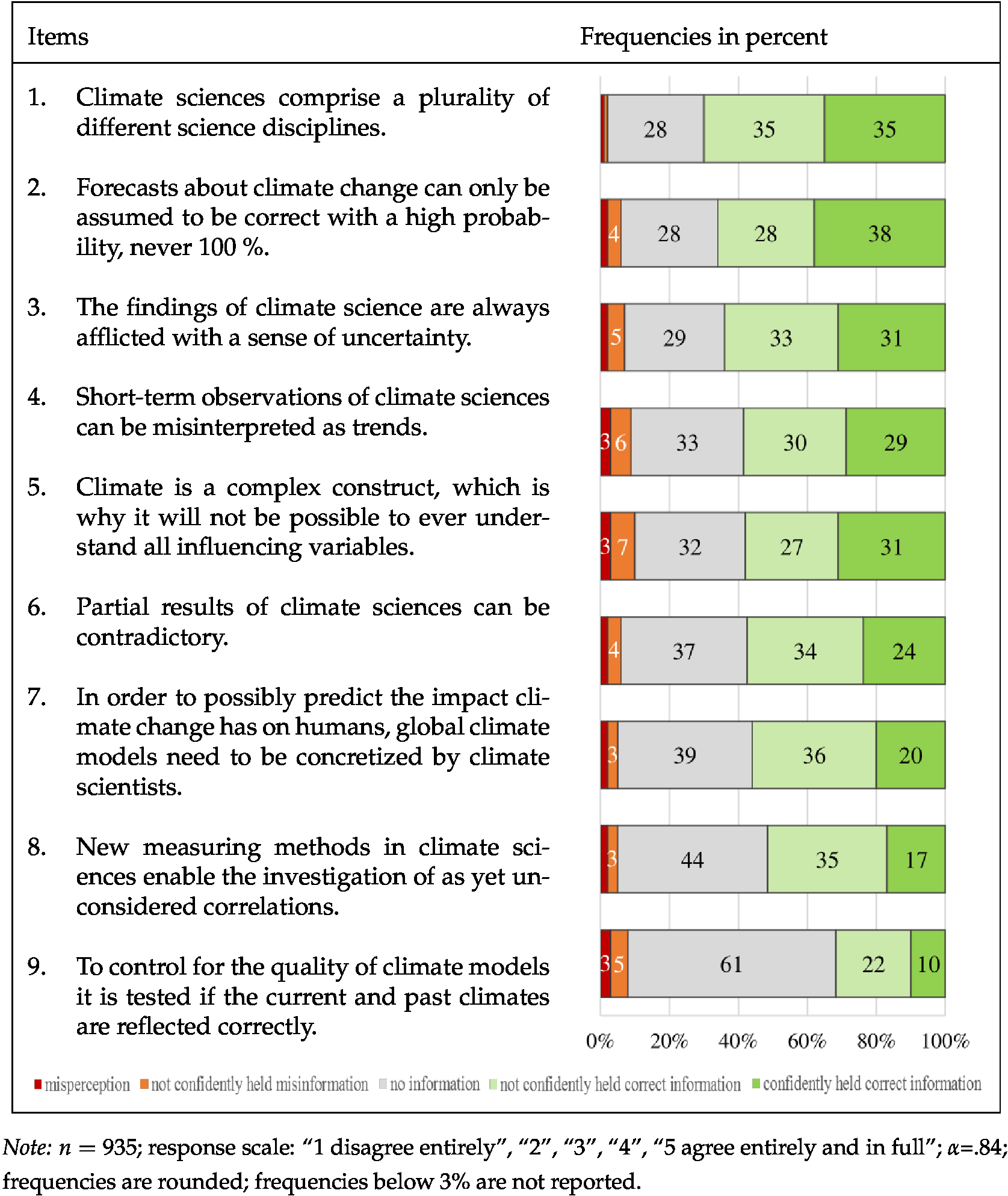

6.1.5 Procedural knowledge

All but one item on uncertainty and complexity of scientific results were answered correctly by a large proportion of respondents (see Table 7 ). Overall, very few participants (10% or less) stated misinformation and only one to three percent reported misperceptions. Interestingly, the percentage of correctly informed but uncertain respondents is often higher than the number of respondents who are confident about their correct information. This might reflect a higher level of uncertainty about how the climate sciences really work. Nevertheless, this conceptualization only detects effectively if survey participants are correctly informed about results and procedures in the climate sciences and not the degree to which they trust scientists.

In summary, the means of the first four knowledge dimensions are very similar when the first measurement option (A1) is used and only correct answers are counted. On average, respondents answered about half of the items per dimension correctly (see Table 8 ). Tobler, Visschers and Siegrist [ 2012 ] reported higher means by using the same indices. The respondents in our study knew slightly more about the effects and causes of climate change than basic physical knowledge about greenhouse gases and action-related knowledge. As already discussed at a single item level, the highest proportion of respondents indicate correct understanding about items of the introduced scale of procedural knowledge. Although the mean differences between the dimensions are rather small, they indicate that distinctive measures are beneficial.

The reproduced correlation table with all knowledge dimensions and education, reports mainly medium sized correlations ( ) between the different knowledge dimensions similarly to the results by Tobler, Visschers and Siegrist [ 2012 ]. Further, the linkage between procedural knowledge and all other dimensions is positive and medium sized and weaker but significantly positive correlations were found between knowledge indices and education ( ).

6.2 Comparison of results according to A1, A2, A3 (RQ2)

What exactly are the differences in the descriptive results depending on the response scale?

At the single item level and compared to the Swiss study, some differences in the frequencies of informed, uninformed and misinformed survey participants were detected, here. These findings may indicate that the German respondents are, in fact, less knowledgeable and more uninformed and misinformed than the Swiss respondents. However, as both samples were not fully representative and because of the fact that Internet users were studied here in contrast to the Swiss study, a sampling effect is more likely. Furthermore, the five-point Likert scale used in this study (A3) may have led to a more scattered distribution than the threefold option (A2) used by Tobler, Visschers and Siegrist [ 2012 ] at the single items level. Nevertheless, the Likert scale demonstrably gives scholars more information about why survey respondents answered a knowledge statement correctly or incorrectly.

The general trend regarding the means of all five knowledge dimensions remains similar across all three alternative response scales (see Table 2 ). German Internet users appear to know how scientific knowledge is developed, and know about the uncertainty of results. However, less is known by the Internet users about the basic facts of climate change such as meteorological and physical contexts.

In comparison to the first methodological alternative (A1), counting misinformation as negative values in addition to information (A2) resulted in an overall decrease in means and consequently, the standard deviations are higher. In contrast to the first four dimensions, the mean for procedural knowledge reflects a very low level of misinformation. The means of the third alternative of response scales (A3) are slightly above the middle of the scale. This indicates that most respondents reported that they either did not know the answer or were uncertain as to the validity of their, in fact, correct response. In the second response format, the numbers of correct and incorrect responses can offset each other. Therefore, a zero on one scale denotes that either no information is present on any items or an equal distribution of information and misinformation. Thus, method two (A2) as well as method three (A3), which adds further differentiations through confidence, are particularly suitable for evaluating individual items.

To conclude, results across the presented methods of operationalization reflect the importance of thoroughly planned and designed questionnaires.

6.3 The knowledge-attitude interface (RQ3)

Different dimensions as well as types of knowledge about climate change such as being informed, uninformed or misinformed, as well as the confidence of reporting information or misinformation, could be linked to various attitudes concerning climate friendly actions. The data collected by broader response formats is therefore expected to have a higher chance to detect relations, which are hypothesized by the knowledge deficit model.

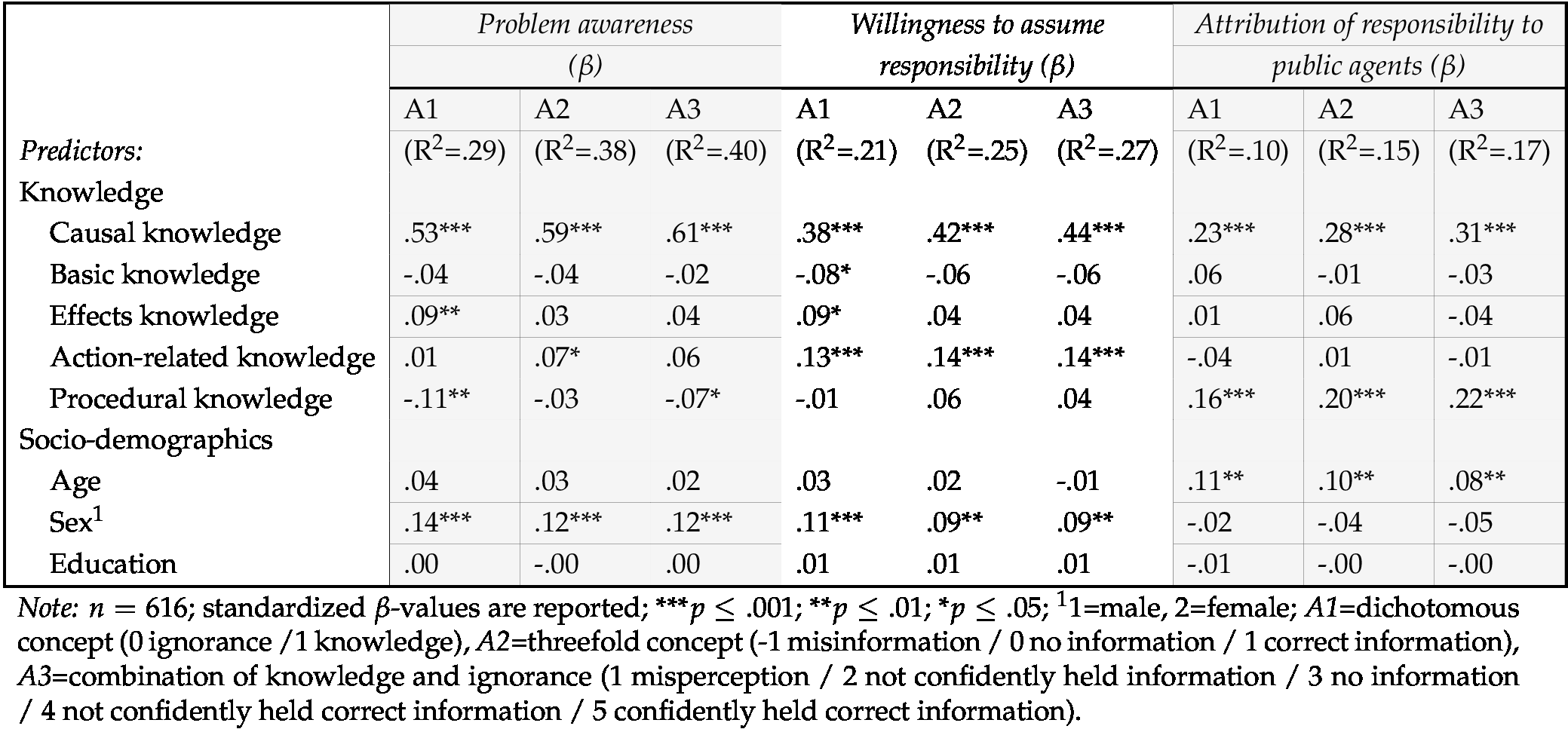

Below, linear regressions indicate that results differ based on the response format (see Table 9 ).

In our data, some significant positive beta coefficients exist between knowledge as well as socio-demographic variables and attitudes toward climate change. The predictors can explain a substantial amount of variance of problem awareness (R – ) and willingness to assume responsibility (R – ). The model explains less variance of attribution of responsibility to public agents (R – ). Knowledge about climate change and its causes is the strongest predictor for all variables that measure attitudes with medium sized to strong effects. Thus, the more people know about what causes climate change, the higher their problem awareness. They are more convinced that single citizens can counteract climate change and that public agents such as political or economic actors have to take action. Consistent with previous studies [e.g., Arlt, Hoppe and Wolling, 2011 ; Taddicken, 2013 ; Zhao, 2009 ], all other effects are small. A greater knowledge about climate (un)friendly everyday activities is linked to a stronger belief that citizens should assume responsibility. The more respondents know about the uncertainty of scientific findings and the limitations of the climate sciences, the greater their attribution of responsibility to public agents. While climate friendly attitudes are independent of the respondents’ education, significant beta coefficients between attitudes and age, as well as sex, were found. The attribution of responsibility to public agents is positively intertwined with the respondents’ age and females are more likely to be concerned about climate change and show a stronger willingness to assume responsibility.

For each attitudinal variable, the R naturally increases with a more differentiated response scale and thus more information. In contrast to medium-sized or large effects (causal knowledge), single small beta coefficients are less consistent and vary more strongly from one response format to another. While some beta coefficients increase with greater differentiations, some significant effects that are present in the first option disappear in the second, and some recur in the third. Interestingly, the positive prediction by effects knowledge of problem awareness, as well as willingness to assume responsibility, vanishes with the integration of confidence/certainty into the response format. In contrast, a positive link between action-related knowledge and problem awareness is only significant for the second response format.

Thus, as single, small effects come and go depending on the operationalization, it is not surprising that some studies find small effects and others do not. In light of these results, we give empirical proof that especially small effects found by linear regression have to be interpreted with caution. Thereby, the necessity to intensely consider and choose the appropriate response format for data collection is highlighted. What stands out in Table 9 is that the measurement of different climate change knowledge dimensions is very beneficial. Whereas most beta coefficients are small, causal knowledge is strongly related to positive attitudes toward climate change. Hence, it is crucial to identify relevant knowledge dimensions and to measure those distinctively.

7 Discussion

This paper highlights the necessity to incorporate the theoretical concepts and in conjunction with the measurement of knowledge in survey studies when correlations between media use, knowledge and attitudes are studied or communications strategies are developed to counteract a lack of information, misinformation or uncertainties among individuals. However, most scholars conduct knowledge quizzes with a dichotomous response format of “true” and “false” [e.g., Bråten and Strømsø, 2010 ], referred to in this paper as first alternative (A1). Some studies distinguish between informed, misinformed, and uninformed respondents by including a third response option (A2) such as “I don’t know” [e.g., Kuklinski et al., 2000 ]. Very few scholars include confidence or certainty as second dimension in addition to knowledge [e.g., Pasek, Sood and Krosnick, 2015 ]. The present study reflects this combination of knowledge and confidence in a five-point Likert scale from 1 “disagree entirely” to 5 “agree entirely and in full” (A3) and climate change knowledge among German Internet users ( ). This data was re-coded twice in order to obtain A2 and A1. Four scales by Tobler, Visschers and Siegrist [ 2012 ] with items on different aspects of knowledge about climate change (causal knowledge, basic knowledge, effects knowledge, and action-related knowledge) were used and a fifth scale (procedural knowledge) was presented denoting knowledge about the processes of the climate sciences.

The aim of this paper was to present what German Internet users know about climate change and about which aspects they are merely uncertain, uninformed or even misinformed. Furthermore, we discussed how strongly results (descriptive and from linear regression analyses with attitudes) can differ depending on the response format and knowledge concept (A1, A2, A3).

Firstly, it was shown that it is of essential importance to identify and measure relevant knowledge dimensions. A high proportion of respondents in the sample were knowledgeable about different aspects of climate change, especially about the processes of climate sciences and the effects of climate change. The frequencies further showed rather small proportions of misinformed and misperceived. The introduced scale of procedural knowledge has proven to have high reliability. On this dimension, we found the lowest proportions of misinformed. One probable reason is the lack of incorrect statements. Therefore, we suggest that the total number of items as well as incorrect and correct statements per scale should be considered whenever a new test is constructed. Although results indicated high proportions of correctly informed recipients, most respondents reported a high level of uncertainty about how the climate sciences work and how scientific results are produced. Thus, they may have guessed correct quiz answers. The prior focus of media reporting on causes and effects of climate change and a lack in the dissemination of scientific methods is one possible explanation. Compared to the Swiss sample from Tobler, Visschers and Siegrist [ 2012 ], this sample contained much more uninformed and less correctly informed respondents. As both samples were not fully representative, we do not suppose existing knowledge differences between the Swiss and German population. Results may be down to a sampling effect (e.g., mailed vs. online questionnaires) as well as differences in the response formats and thus response options.

Secondly, the analysis revealed the importance of considering different options for concepts and measurements of knowledge in survey studies. At first glance it may appear trivial, but knowledge is a complex construct, which is challenging to survey. It was shown that compared to a dichotomous and threefold concept, an additional integration of confidence about knowledge leads to a considerable gain in information and thus is recommenced on a single item level. A doubling of items is not necessary. On an aggregate level, however, the differences in means across the three alternatives are rather minor.

Thirdly, most of the beta coefficients between knowledge and attitudes found here are in line with previous research, most of which only detected minor or no linkages between knowledge about scientific topics and attitudes [e.g., Lee, Scheufele and Lewenstein, 2005 ; Taddicken, 2013 ; Zhao, 2009 ]. Therefore, the knowledge deficit model, which hypothesizes significant linear correlations between knowledge and attitudes, is often criticized. Interestingly however, understanding about causes of climate change was found here to have a medium to large effect on attitudes. Thus, it can be argued that instead of neglecting the knowledge deficit model in total, effects based on different knowledge dimensions have to be studied. Furthermore, the presented regression analyses empirically proved that small, significant effects can indeed appear or disappear depending on the response format and that the total amount of explained variance increases with more differentiated response options.

Although this study serves to stimulate the discussion on concepts and measurements of knowledge, it obviously cannot resolve the issue as a whole. Hence, some limitations of this study need to be addressed.

As the data for this paper emerge from the third wave of a panel survey, it must be critically noted that this might have resulted in potential survey response effects. Hence, learning effects on the knowledge items are plausible, as well as results that show an above average percentage of people highly interested in the issue of climate change remained in the sample of the last wave.

Another debatable aspect is the chosen response format from 1 “disagree entirely” to 5 “agree entirely and in full”. It can be argued that the data collected not only reflects knowledge but also attitudes. Alternatively, a scale from “definitely true” to “definitely false” can be suggested. Moreover, the question of whether a middle option and the additional “no response” option have identical meanings remained unanswered. A possible solution might be to use “neither true nor false” as labelling of the middle or to use a four-point Likert scale and an additional “I don’t know” option.

Further, the formulation of questions must be critically addressed. While effects and procedural knowledge are formulated to definitely measure knowledge about what is accepted as scientific evidence by most scientists and about how scientific results are generated, all other climate change knowledge dimensions could also be argued to potentially measure beliefs. Higher means for effects and procedural knowledge might support this idea introduced by Kahan [ 2015 ]. Moreover, the conceptualization of effects and procedural knowledge only reflect if survey participants are correctly informed about results and procedures in the climate sciences and not the degree to which they trust scientists.

The main limitation of this study is the comparison of results of different concepts and methods by merely recoding variables after data collection. We hope that our work will stimulate future experimental research.

While the presented gradation is considered to be highly advantageous for individual items, one could critically discuss the usefulness of aggregating both dimensions (knowledge and confidence) into one mean index, as this can have considerable influences on the means. Thus, the computation of two different indices for each dimension could be considered as well as asking two separate questions for each item.

Further longitudinal as well as experimental research on media effects is required. It is of practical relevance to study under which conditions misinformation and misperceptions can be corrected [Kuklinski et al., 2000 ] and which groups of people can be distinguished based on their knowledge. Those results could also be useful in developing targeted campaigns, which precisely address those areas where knowledge gaps or misinformation are widespread in order to educate audiences regarding scientific topics such as climate change. Furthermore, the role of public trust and deference toward science should be investigated. Especially on topics where public ignorance is predominant, trust or deference toward science could possibly serve as even stronger mediators on positive attitudes than knowledge [Lee and Scheufele, 2006 ]. Especially when collecting knowledge about the procedures of the climate sciences or when asking what scientific results on causes or effects of climate change that are accepted by the majority of climate scientists, trust in science and scientists should also be recorded.

Acknowledgments

The research presented in this paper was conducted as part of the project “Climate change from the audience perspective” under the direction of Prof. Dr. Irene Neverla and Prof. Dr. Monika Taddicken. This project was part of the Special Priority Program 1409 “Science and the public”, which was funded by the German Research Foundation (Deutsche Forschungsgemeinschaft, DFG). The authors would like to thank Ines Lörcher and Prof. Dr. Irene Neverla for their contributions to the project. Further, we are most thankful to all participants who took part in this study. Previous versions of this article were presented at the annual conference of the International Communication Association (ICA), San Diego, May 2017, and the annual conference of the Fachgruppe Wissenschaftskommunikation (division for science communication of the German Communication Association (DGPuK)), Dresden, March 2016. We thank all reviewers, colleagues and the audiences for their helpful comments.

References

-

Allum, N., Sturgis, P., Tabourazi, D. and Brunton-Smith, I. (2008). ‘Science knowledge and attitudes across cultures: a meta-analysis’. Public Understanding of Science 17 (1), pp. 35–54. https://doi.org/10.1177/0963662506070159 .

-

Alvarez, R. M. and Franklin, C. H. (1994). ‘Uncertainty and political perceptions’. The Journal of Politics 56 (3), pp. 671–688. https://doi.org/10.2307/2132187 .

-

Anik, S. I. and Khan, M. A. S. A. (2012). ‘Climate change adaptation through local knowledge in the north eastern region of Bangladesh’. Mitigation and Adaptation Strategies for Global Change 17 (8), pp. 879–896. https://doi.org/10.1007/s11027-011-9350-6 .

-

Arlt, D., Hoppe, I. and Wolling, J. (2011). ‘Climate change and media usage: effects on problem awareness and behavioural intentions’. International Communication Gazette 73 (1-2), pp. 45–63. https://doi.org/10.1177/1748048510386741 .

-

Bauer, M. W., Allum, N. and Miller, S. (2007). ‘What can we learn from 25 years of PUS survey research? Liberating and expanding the agenda’. Public Understanding of Science 16 (1), pp. 79–95. https://doi.org/10.1177/0963662506071287 .

-

Bråten, I. and Strømsø, H. I. (2010). ‘When law students read multiple documents about global warming: examining the role of topic-specific beliefs about the nature of knowledge and knowing’. Instructional Science 38 (6), pp. 635–657. https://doi.org/10.1007/s11251-008-9091-4 .

-

Connor, M. and Siegrist, M. (2010). ‘Factors influencing people’s acceptance of gene technology: the role of knowledge, health expectations, naturalness and social trust’. Science Communication 32 (4), pp. 514–538. https://doi.org/10.1177/1075547009358919 .

-

Dijkstra, E. M. and Goedhart, M. J. (2012). ‘Development and validation of the ACSI: measuring students’ science attitudes, pro-environmental behaviour, climate change attitudes and knowledge’. Environmental Education Research 18 (6), pp. 733–749. https://doi.org/10.1080/13504622.2012.662213 .

-

Durant, J., Gaskell, G., Bauer, M. W., Midden, C., Liakopoulus, M. and Scholten, L. (2000). ‘Two cultures of public understanding of science technology in Europe’. In: Between understanding and trust: the public, science and technology. Ed. by M. Dierkes and C. Grote. Amsterdam, The Netherlands: Taylor & Francis, pp. 131–156.

-

Engesser, S. and Brüggemann, M. (2016). ‘Mapping the minds of the mediators: the cognitive frames of climate journalists from five countries’. Public Understanding of Science 25 (7), pp. 825–841. https://doi.org/10.1177/0963662515583621 .

-

Evans, J. H. (2011). ‘Epistemological and moral conflict between religion and science’. Journal for the Scientific Study of Religion 50 (4), pp. 707–727. https://doi.org/10.1111/j.1468-5906.2011.01603.x .

-

Flynn, D. J., Nyhan, B. and Reifler, J. (2017). ‘The Nature and Origins of Misperceptions: Understanding False and Unsupported Beliefs About Politics’. Political Psychology 38, pp. 127–150. https://doi.org/10.1111/pops.12394 .

-

Gustafson, A. and Rice, R. E. (2016). ‘Cumulative advantage in sustainability communication’. Science Communication 38 (6), pp. 800–811. https://doi.org/10.1177/1075547016674320 .

-

Heidmann, I. and Milde, J. (2013). ‘Communication about scientific uncertainty: how scientists and science journalists deal with uncertainties in nanoparticle research’. Environmental Sciences Europe 25 (1), p. 25. https://doi.org/10.1186/2190-4715-25-25 .

-

Hulme, M. and Mahony, M. (2010). ‘Climate change: what do we know about the IPCC?’ Progress in Physical Geography 34 (5), pp. 705–718. https://doi.org/10.1177/0309133310373719 .

-

Janich, N., Rhein, L. and Simmerling, A. (2010). ‘“Do I know what I don’t know?”: the communication of non-knowledge and uncertain knowledge in science’. Fachsprache. International Journal of Specialized Communication 32 (3-4), pp. 86–99. https://doi.org/10.24989/fs.v32i3-4.1392 .

-

Kahan, D. M. (2015). ‘Climate-science communication and the measurement problem’. Political Psychology 36, pp. 1–43. https://doi.org/10.1111/pops.12244 .

-

Kahlor, L. and Rosenthal, S. (2009). ‘If we seek, do we learn?’ Science Communication 30 (3), pp. 380–414. https://doi.org/10.1177/1075547008328798 .

-

Kaiser, F. G. and Fuhrer, U. (2003). ‘Ecological Behavior’s Dependency on Different Forms of Knowledge’. Applied Psychology 52 (4), pp. 598–613. https://doi.org/10.1111/1464-0597.00153 .

-

Keohane, R. O., Lane, M. and Oppenheimer, M. (2014). ‘The ethics of scientific communication under uncertainty’. Politics, Philosophy & Economics 13 (4), pp. 343–368. https://doi.org/10.1177/1470594x14538570 .

-

Kiel, E. and Rost, F. (2002). Einführung in die Wissensorganisation: Grundlegende Probleme und Begriffe. Würzburg, Germany: Ergon.

-

Kirilenko, A. P. and Stepchenkova, S. O. (2012). ‘Climate change discourse in mass media: application of computer-assisted content analysis’. Journal of Environmental Studies and Sciences 2 (2), pp. 178–191. https://doi.org/10.1007/s13412-012-0074-z .

-

Knorr-Cetina, K. (2002). Wissenskulturen: Ein Vergleich naturwissenschaftlicher Wissensformen. Frankfurt am Main, Germany: Suhrkamp.

-

Ko, H. (2016). ‘In science communication, why does the idea of a public deficit always return? How do the shifting information flows in healthcare affect the deficit model of science communication?’ Public Understanding of Science 25 (4), pp. 427–432. https://doi.org/10.1177/0963662516629746 .

-

Krosnick, J. A., Holbrook, A. L., Lowe, L. and Visser, P. S. (2006). ‘The origins and consequences of democratic citizens’ policy agendas: a study of popular concern about global warming’. Climatic Change 77 (1-2), pp. 7–43. https://doi.org/10.1007/s10584-006-9068-8 .

-

Kuklinski, J. H., Quirk, P. J., Jerit, J., Schwieder, D. and Rich, R. F. (2000). ‘Misinformation and the currency of democratic citizenship’. The Journal of Politics 62 (3), pp. 790–816. https://doi.org/10.1111/0022-3816.00033 .

-

Ladwig, P., Dalrymple, K. E., Brossard, D., Scheufele, D. A. and Corley, E. A. (2012). ‘Perceived familiarity or factual knowledge? Comparing operationalizations of scientific understanding’. Science and Public Policy 39 (6), pp. 761–774. https://doi.org/10.1093/scipol/scs048 .

-

Lee, C.-J. and Scheufele, D. A. (2006). ‘The influence of knowledge and deference toward scientific authority: a media effects model for public attitudes toward nanotechnology’. Journalism & Mass Communication Quarterly 83 (4), pp. 819–834. https://doi.org/10.1177/107769900608300406 .

-

Lee, C.-J., Scheufele, D. A. and Lewenstein, B. V. (2005). ‘Public attitudes toward emerging technologies’. Science Communication 27 (2), pp. 240–267. https://doi.org/10.1177/1075547005281474 .

-

Lombardi, D., Sinatra, G. M. and Nussbaum, E. M. (2013). ‘Plausibility reappraisals and shifts in middle school students’ climate change conceptions’. Learning and Instruction 27, pp. 50–62. https://doi.org/10.1016/j.learninstruc.2013.03.001 .

-

Maslin, M. (2013). ‘Cascading uncertainty in climate change models and its implications for policy’. The Geographical Journal 179 (3), pp. 264–271. https://doi.org/10.1111/j.1475-4959.2012.00494.x .

-

McCright, A. M. and Dunlap, R. E. (2011). ‘Cool dudes: the denial of climate change among conservative white males in the United States’. Global Environmental Change 21 (4), pp. 1163–1172. https://doi.org/10.1016/j.gloenvcha.2011.06.003 .

-

McKercher, B., Prideaux, B. and Pang, S. F. H. (2013). ‘Attitudes of tourism students to the environment and climate change’. Asia Pacific Journal of Tourism Research 18 (1-2), pp. 108–143. https://doi.org/10.1080/10941665.2012.688514 .

-

Miller, J. D. (1983). ‘Scientific literacy: xa conceptual and empirical review’. Daedalus 112 (2), pp. 29–48. URL: http://www.jstor.org/stable/20024852 .

-

— (1998). ‘The measurement of civic scientific literacy’. Public Understanding of Science 7 (3), pp. 203–223. https://doi.org/10.1088/0963-6625/7/3/001 .

-

National Academies of Sciences, Engineering and Medicine (2016). Science literacy: concepts, contexts and consequences. Washington, DC, U.S.A.: The National Academies Press. https://doi.org/10.17226/23595 .

-

Nisbet, M. C., Scheufele, D. A., Shanahan, J., Moy, P., Brossard, D. and Lewenstein, B. V. (2002). ‘Knowledge, Reservations, or Promise? A Media Effect Model for Public Perceptions of Science and Technology’. Communication Research 29, pp. 584–608. https://doi.org/10.1177/009365002236196 .

-

Nolan, J. M. (2010). ‘“An inconvenient truth” increases knowledge, concern and willingness to reduce greenhouse gases’. Environment and Behavior 42 (5), pp. 643–658. https://doi.org/10.1177/0013916509357696 .

-

Painter, J. (2013). Climate change in the media: Reporting risk and uncertainty. London, U.K.: IB Tauris & Co Ltd. and RISJ.

-

Pardo, R. and Calvo, F. (2002). ‘Attitudes toward science among the European public: a methodological analysis’. Public Understanding of Science 11 (2), pp. 155–195. https://doi.org/10.1088/0963-6625/11/2/305 .

-

Pasek, J., Sood, G. and Krosnick, J. A. (2015). ‘Misinformed about the affordable care act? Leveraging certainty to assess the prevalence of misperceptions’. Journal of Communication 65 (4), pp. 660–673. https://doi.org/10.1111/jcom.12165 .

-

Pearce, W., Holmberg, K., Hellsten, I. and Nerlich, B. (2014). ‘Climate Change on Twitter: Topics, Communities and Conversations about the 2013 IPCC Working Group 1 Report’. PLOS ONE 9 (4), e94785. https://doi.org/10.1371/journal.pone.0094785 .

-

Rauser, F., Schmidt, A., Sonntag, S. and Süsser, D. (2014). ‘ICYESS2013: uncertainty as an example of interdisciplinary language problems’. Bulletin of the American Meteorological Society 95 (6), ES106–ES108. https://doi.org/10.1175/bams-d-13-00271.1 .

-

Ravetz, J. R. (1993). ‘The sin of science: ignorance of ignorance’. Science Communication 15 (2), pp. 157–165. https://doi.org/10.1177/107554709301500203 .

-

Retzbach, A. and Maier, M. (2014). ‘Communicating scientific uncertainty: media effects on public engagement with science’. Communication Research 42 (3), pp. 429–456. https://doi.org/10.1177/0093650214534967 .

-

Reynolds, T. W., Bostrom, A., Read, D. and Morgan, M. G. (2010). ‘Now what do people know about global climate change? survey studies of educated laypeople’. Risk Analysis 30 (10), pp. 1520–1538. https://doi.org/10.1111/j.1539-6924.2010.01448.x .

-

Royal Society of London (1985). The public understanding of science. London, U.K.: The Royal Society of London. URL: https://royalsociety.org/topics-policy/publications/1985/public-understanding-science/ .

-

Ryghaug, M., Sørensen, K. H. and Næss, R. (2011). ‘Making sense of global warming: Norwegians appropriating knowledge of anthropogenic climate change’. Public Understanding of Science 20 (6), pp. 778–795. https://doi.org/10.1177/0963662510362657 .

-

Schäfer, M. S. (2007). Wissenschaft in den Medien: Die Mediatisierung naturwissenschaftlicher Themen. Wiesbaden, Germany: VS Verlag für Sozialwissenschaften.

-

Schäfer, M. S., Ivanova, A. and Schmidt, A. (2014). ‘What drives media attention for climate change? Explaining issue attention in Australian, German and Indian print media from 1996 to 2010’. International Communication Gazette 76 (2), pp. 152–176. https://doi.org/10.1177/1748048513504169 .

-

Shephard, K., Harraway, J., Lovelock, B., Skeaff, S., Slooten, L., Strack, M., Furnari, M. and Jowett, T. (2014). ‘Is the environmental literacy of university students measurable?’ Environmental Education Research 20 (4), pp. 476–495. https://doi.org/10.1080/13504622.2013.816268 .

-

Sluijs, J. P. van der (2012). ‘Uncertainty and Dissent in Climate Risk Assessment: A Post-Normal Perspective’. Nature and Culture 7 (2), pp. 174–195. https://doi.org/10.3167/nc.2012.070204 .

-

Stocking, S. H. and Holstein, L. W. (1993). ‘Constructing and reconstructing scientific ignorance’. Knowledge 15 (2), pp. 186–210. https://doi.org/10.1177/107554709301500205 .

-

Storch, H. von (2009). ‘Climate research and policy advice: scientific and cultural constructions of knowledge’. Environmental Science & Policy 12 (7), pp. 741–747. https://doi.org/10.1016/j.envsci.2009.04.008 .

-

Sturgis, P. and Allum, N. (2004). ‘Science in Society: Re-Evaluating the Deficit Model of Public Attitudes’. Public Understanding of Science 13 (1), pp. 55–74. https://doi.org/10.1177/0963662504042690 .

-

Sundblad, E.-L., Biel, A. and Gärling, T. (2009). ‘Knowledge and confidence in knowledge about climate change among experts, journalists, politicians and laypersons’. Environment and Behavior 41 (2), pp. 281–302. https://doi.org/10.1177/0013916508314998 .

-

Taddicken, M. (2013). ‘Climate Change From the User’s Perspective: The Impact of Mass Media and Internet Use and Individual and Moderating Variables on Knowledge and Attitudes’. Journal of Media Psychology 25 (1), pp. 39–52. https://doi.org/10.1027/1864-1105/a000080 .

-

Taddicken, M. and Neverla, I. (2011). ‘Klimawandel aus Sicht der Mediennutzer. Multifaktorielles Wirkungsmodell der Medienerfahrung zur komplexen Wissensdomäne Klimawandel’. Medien & Kommunikationswissenschaft 59 (4), pp. 505–525. https://doi.org/10.5771/1615-634x-2011-4-505 .

-

Taddicken, M. and Reif, A. (2016). ‘Who participates in the climate change online discourse? A typology of Germans’ online engagement’. Communications 41 (3), pp. 315–337. https://doi.org/10.1515/commun-2016-0012 .

-

Tobler, C., Visschers, V. H. M. and Siegrist, M. (2012). ‘Consumers’ knowledge about climate change’. Climatic Change 114 (2), pp. 189–209. https://doi.org/10.1007/s10584-011-0393-1 .

-

Trumbo, C. (1996). ‘Constructing climate change: claims and frames in US news coverage of an environmental issue’. Public Understanding of Science 5 (3), pp. 269–283. https://doi.org/10.1088/0963-6625/5/3/006 .

-

Vignola, R., Klinsky, S., Tam, J. and McDaniels, T. (2013). ‘Public perception, knowledge and policy support for mitigation and adaption to climate change in Costa Rica: comparisons with North American and European studies’. Mitigation and Adaptation Strategies for Global Change 18 (3), pp. 303–323. https://doi.org/10.1007/s11027-012-9364-8 .

-

Weingart, P. (2001). Die Stunde der Wahrheit?: Zum Verhältnis der Wissenschaft zu Politik, Wirtschaft und Medien in der Wissensgesellschaft. 1st ed. Weilerswist, Germany: Velbrück Wiss.

-

Weingart, P., Engels, A. and Pansegrau, P. (2000). ‘Risks of communication: discourses on climate change in science, politics and the mass media’. Public Understanding of Science 9 (3), pp. 261–283. https://doi.org/10.1088/0963-6625/9/3/304 .

-

Wippermann, C., Calmbach, M. and Kleinhückelkotten, S. (2008). Umweltbewusstsein in Deutschland 2008. Ergebnisse einer repräsentativen Bevölkerungsumfrage. Forschungsprojekt des Umweltbundesamts. [Environmental awareness in Germany 2008: findings of a representative survey]. URL: https://www.klimanavigator.de/imperia/md/content/csc/klimanavigator/bmu_umfrage_umwetlbewusstsein_deutschland_2008.pdf .

-

Zhao, X. (2009). ‘Media Use and Global Warming Perceptions: A Snapshot of the Reinforcing Spirals’. Communication Research 36 (5), pp. 698–723. https://doi.org/10.1177/0093650209338911 .

-

Zhao, X., Leiserowitz, A. A., Maibach, E. W. and Roser-Renouf, C. (2011). ‘Attention to science/environment news positively predicts and attention to political news negatively predicts global warming risk perceptions and policy support’. Journal of Communication 61 (4), pp. 713–731. https://doi.org/10.1111/j.1460-2466.2011.01563.x .

Authors

Monika Taddicken is a professor of Communication and Media Sciences at the Technische Universität Braunschweig, Germany. She received her Ph.D. in communication research from the University of Hohenheim, Germany. She is currently working on the audience’s perspective of science communication. She has also published several papers on computer-mediated communication, and survey methodology. E-mail: m.taddicken@tu-braunschweig.de .

Anne Reif is a research assistant at the Department of Communication and Media Sciences at the Technische Universität Braunschweig, Germany. She holds an M.A. degree in Media and Communication Science from the Ilmenau University of Technology, Germany. She is currently working on her Ph.D. project, which looks at trust in and knowledge about science from the lay audience’s perspective. E-mail: a.reif@tu-braunschweig.de .

Imke Hoppe is a post-doctoral research assistant at the Institute for Journalism and Communication Science (digital communication and sustainability) at the University of Hamburg, Germany. She received her Ph.D. in communication research from the Ilmenau University of Technology, Germany. She has published several papers on climate change communication and its effects. E-mail: imke.hoppe@uni-hamburg.de .

1 Please note, some instruments such as the NASEM report (National Academies of Sciences, Engineering, and Medicine) do not only apply one question type but instead vary within the questionnaire.

2 Of course, other reasons for climate unfriendly behaviors than misperceptions exist at the complex and interacting knowledge-attitude interface [Sturgis and Allum, 2004 ].

3 Response options in between were labeled as “2”, “3”, and “4”. After data collection a sixth response option, “no statement” was recoded into point 3 on the scale as both response options are assumed to denote “no information” or “I don’t know”.