1 Introduction

In the last decades, there has been a significant increase in the number of science centres [Schiele, 2021]. In contrast with traditional museums that predominantly conserve and display science-related objects, science centres are characterised by interactive and mainly self-directed learning experiences [Amodio, 2012; Gilbert, 2001]. Many science centres therefore aim at being accessible to all visitors independent of their background and level of scientific knowledge [Rennie, 2001].

CERN, the European Laboratory for Particle Physics, is a leading institution in the field of high-energy physics and fundamental research. Public communication and education constitute an inherent part of organisational mission [CERN, 2021], with exhibitions playing a key role since the very beginning [CERN, 1956]. Recently, in order to expand CERN’s offer to the public, a new education and outreach centre has been built: CERN Science Gateway [CERN, 2023a]. Alongside an education laboratory and a venue for public events, the centre hosts three new permanent exhibitions.

Being a large and ambitious project, CERN Science Gateway exhibitions are a result of a collaborative effort of a variety of actors: CERN exhibition team, members of CERN community, CERN visitors (actual and prospective), design and multimedia companies, building companies, and external advisors. In this article, we — a mix of exhibition developers with a background in natural sciences and social science researchers — reflect on the dynamics within our team and share our challenges and learnings from combining exhibition development practice with evaluation research.

Over the last 30 years, CERN’s exhibition team has benefited considerably from the rich learning opportunities stemming from evaluation and audience research. To provide just a few examples, in 1994, a study in CERN’s ‘Microcosm’ exhibition carried out by Heather Mayfield, on sabbatical from the London Science Museum, showed that only 6% of visitors experienced the exhibition area showcasing the Large Hadron Collider, a project that was not yet fully funded and that was a communication priority for CERN at the time. Her findings led to new investment in the exhibitions and a complete redesign of the space and indeed the hiring of one of the authors of this report, still at CERN today. In 2000, an evaluation done by CERN’s exhibition team revealed several critical misconceptions held by high school student visitors about the structure of matter, which informed the subsequent exhibition development work. In 2011, Ben Gammon was contracted to make an overall assessment of CERN’s two exhibition spaces at the time [Ben Gammon Consulting, 2012a, 2012b]. A combination of tracking and interview data revealed a wealth of information on how different publics perceived and experienced the exhibitions. This again triggered change, with improvements to maintenance and content.

In short, evaluations have grown in both sophistication and breadth and are now a natural part of the exhibition development process at CERN. Over the years, a mix of approaches have been employed, from working with external consultants, to conducting internal studies and ultimately leading to today’s approach of embedding researchers within the exhibition team. The embedded nature of the work is extremely rewarding and highly impactful, but of course also brings its own set of challenges.

It is important to note that in this paper we use the terms ‘research’ and ‘evaluation’ interchangeably. There is no consensus in the field regarding the relation between these two terms: for example, some see ‘research’ and ‘evaluation’ as separate yet intersecting entities, while others consider ‘evaluation’ to be a subset of ‘research’ [Wanzer, 2020]. We tend to agree with the latter definition, therefore understanding ‘evaluation’ as a form of applied social research that explicitly seeks to inform practice — in our case, the practice of developing science exhibitions.

2 Challenge 1: coordinating roles and responsibilities

When it comes to science communication, organisational culture and structure vary largely among different institutions [Schwetje, Hauser, Böschen & Leßmöllmann, 2020]. Some scholars argue that these structural specificities are crucial to take into consideration, as they may influence the type and scope of communication realised by the institution [Davies & Horst, 2016].

Throughout the four years of working on the Science Gateway exhibitions, CERN exhibition team comprised several practising science communicators, who lead on the exhibitions development and project management, and one to two science communication researchers, who were responsible for all evaluation studies accompanying exhibition development. All our studies were conducted in both English and French, as these are the two official languages at CERN.

We do realise that having even one team member working full-time on evaluation can be considered almost ‘a luxury’ by other scientific research laboratories that may not have the resources or internal institutional support to implement such a structure. On the other hand, as the field is transitioning towards more evidence-based practice [Pellegrini, 2021], for many big science centres and museums it is becoming an industry standard to have a whole team, a department or external contractors dedicated to research and evaluation [Bequette, Cardiel, Cohn, Kollmann & Lawrenz, 2019]. Given the dual context of CERN Science Gateway, which is a full-fledged visitor centre of a large particle physics laboratory, our case of research-practice collaboration can therefore be considered neither exceptionally well nor inadequately resourced.

Moreover, having researchers working alongside practitioners in a project that is unprecedentedly large and ambitious for an institution is not necessarily equal to having ‘in-house’ evaluators. While practitioners were hired directly by CERN, the researchers were associate members of personnel with whose universities CERN had set a collaboration agreement. This may seem like a minor detail, but in practice it means that, when it came to science communication research, CERN had to bring in external expertise. For example, evaluation of exhibit prototypes that were adapted for blind and visually impaired visitors was carried out by a PhD student [Chennaz et al., 2023] who specialised on the topic and had an established access to potential study participants.

The downside of such high specialisation was an ‘expertise gap’. On the one hand, we had exhibition developers working on the content; on the other hand, we had social science researchers devising evaluation studies. However, we did not have a person who would be solely responsible for making exhibit prototypes, implementing the ideas of the former and enabling the work of the latter. Ideally, it would be someone with diverse technical skills, access to a workshop and enthusiasm for making ‘quick and dirty’ — that is, cheap yet functional — prototypes that could then be taken to a group of prospective visitors and evaluated. This was especially problematic for hands-on exhibits: producing ‘evaluatable’ prototypes, even in a simplest form, required more time, creativity and technical expertise than evaluation researchers had. Multimedia exhibits, at least at an early stage, could be prototyped with just a few pieces of paper — this was successfully demonstrated by another science communication PhD student whose expertise lay in the design of interactive experiences [Molins Pitarch, 2023].

Finally, the structure of our team may also be characterised from the hierarchical perspective. As it was evaluation research that supported the development of a communication product (and not vice versa), researchers’ role was secondary with respect to the role that practitioners played. In particular, exhibition developers defined the priorities for evaluation, which was then executed by researchers. This arrangement is notably different from the type of partnerships described by Peterman et al. [2021] and Allen and Gutwill [2015] where researchers and practitioners assumed equal roles. It is interesting that, despite the ‘power imbalance’, both practitioners and researchers in our team invested a lot of effort to adapt to and learn from each other, always acting on the basis of mutual trust and respect.

One aspect that helped to establish a common ground in our case was the mutuality of learning when it came to science communication as an academic discipline. Although it may be expected that it is mainly researchers’ responsibility to keep colleagues up-to-date with the relevant findings [Ziegler, Hedder & Fischer, 2021], it is a strong asset when such exchanges are initiated by both researchers and practitioners. For example, in our case a lot of content development and the respective evaluation research was grounded in — or at least deeply inspired by — some major science communication studies. In particular, the ASPIRES project [Archer et al., 2013; Archer, Moote, MacLeod, Francis & DeWitt, 2020] and the concept of ‘science capital’ [Archer, Dawson, DeWitt, Seakins & Wong, 2015; DeWitt, Archer & Mau, 2016; Moote, Archer, DeWitt & MacLeod, 2020, 2021], as well as works on the role of structural inequalities in science (dis)engagement [Dawson, 2014b, 2014a, 2018, 2019] served as core references throughout our work on CERN Science Gateway exhibitions.

Another big idea permeating science communication scholarship is co-production [see e.g. Leach, 2022]. There has been an evolution in the conception of design made for users towards approaches of design made with users, reflecting a transition towards more inclusive and participatory practices [Sanders, 2002]. In the very beginning of the project, practitioners in our team deliberately explored co-design as an option and eventually decided against using it. Most importantly, involving visitor groups to properly co-design exhibitions would require a substantial amount of time from all stakeholders. This was not only incompatible with the already ambitious timeline of the project, but also bore the risk of participants feeling they had to devote too much of their time to something they were neither paid nor trained to do. Moreover, by involving various members of our target audiences in a more sporadic and ad-hoc manner, we ensured a more diverse and international profile of participants. This aspect is non-negligible, since, unlike many science centres that are deeply rooted in their local communities, CERN is an international organisation and needs to carefully balance its relationships with its Member States [CERN, 2023b].

In general, the rationale and the driving force for our research-practice collaboration has been ensuring that the content and approaches of CERN Science Gateway exhibitions ‘work’ for CERN’s diverse audience groups from the communication perspective. This shared value helped us stay the course throughout the project.

3 Challenge 2: adapting the work methodologies

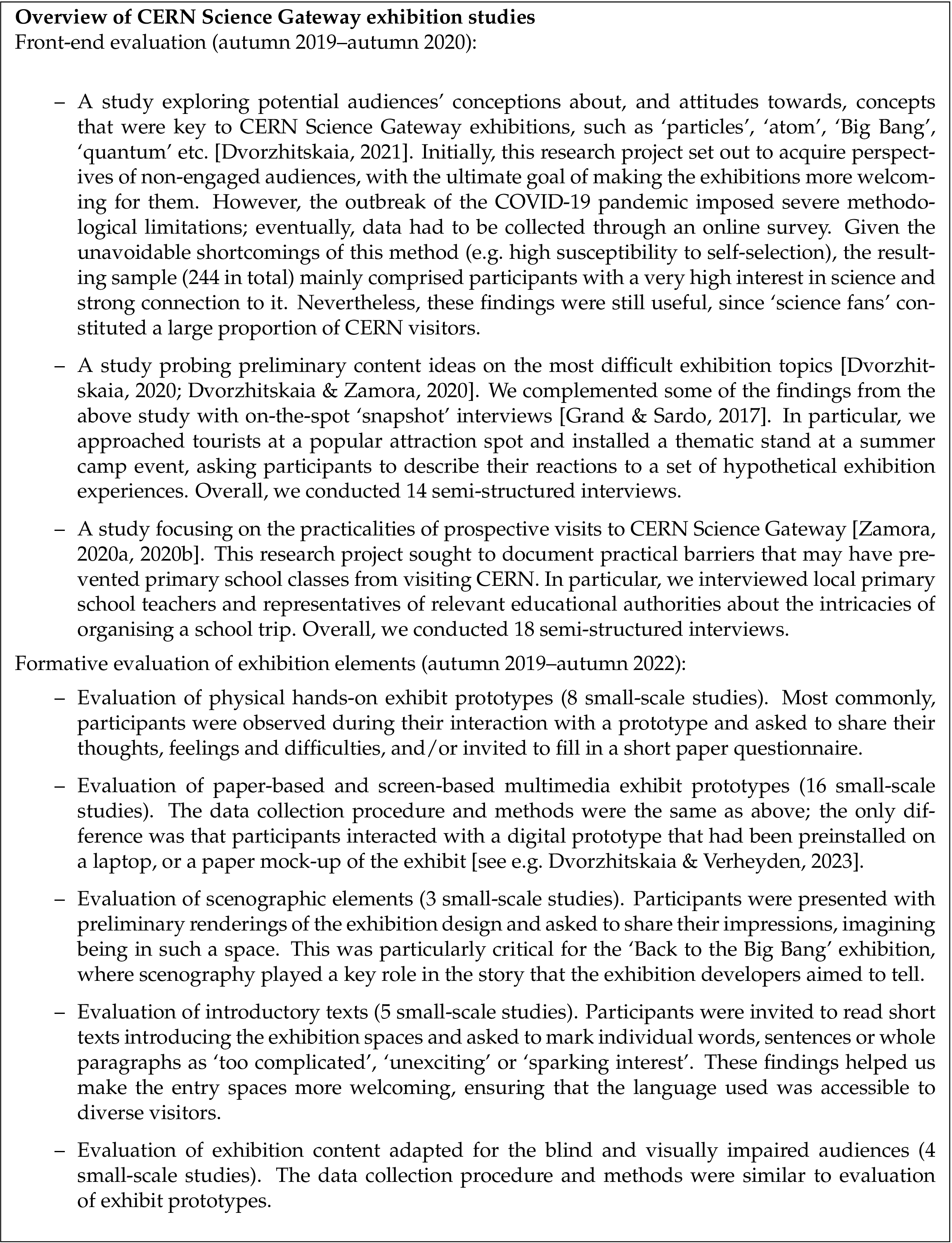

As Table 1 illustrates, the development of CERN Science Gateway exhibitions was accompanied by a variety of evaluation studies, driven by practitioners’ needs and realised by social researchers.

One of the fundamental differences we had to overcome concerned our work processes. For researchers, it was natural to expect to have all necessary input from practitioners (e.g. specific research interests or priorities) in its final form at the beginning of the project [Pellegrini, 2021]. Then, through literature review, formulation of research questions, outline of the methodology, data collection, iterations of data analysis etc., finally came the moment when research findings started to take shape and became translatable into implications for practice. Practitioners, in contrast, needed research input on a regular basis at different stages of the project: when setting up the core messages, when planning the opening times of the exhibitions, when developing exhibits etc. — essentially, every time a research-informed decision that concerned visitors had to be made.

In order for the collaboration to work out, both sides needed to compromise. For example, practitioners had to plan for a longer time interval between identifying an evaluation priority and receiving the results. It also helped to allow for some time margin in case data analysis took longer than could have been foreseen (which was often the case), while still keeping up with the pace of the project. Researchers, in turn, had to learn to accept the very limited scope of their empirical inquiries. Researchers had the responsibility and the liberty to decide in which case it was methodologically sufficient to rely on ‘convenience’ samples, and in which a bigger and more diverse group of participants had to be involved in order for the study to be meaningful. More often than not, it was necessary to set for the bare minimum in terms of the sample size and adapt data collection procedures for a wide range of circumstances, while still ensuring the quality of results.

Irrespective from the practical limitations, we almost always applied the principles of qualitative inquiry, which allowed us to keep the focus of a study relatively flexible [Grack Nelson & Cohn, 2015], as well as complement observational data and participants’ oral or written responses with tacit impressions of the researcher [Corbetta, 2003; Patton, 2002; Pellegrini, 2021]. Furthermore, in most of our studies we adopted a sampling strategy that Layder [2013] termed ‘problem sampling’. Similar to purposive sampling, this approach prioritises the relevance of each participant’s perspective to the research problem over generalisability of the findings.

When reflecting on their experience of research-practice partnership, Peterman et al. [2021] suppose that it was easier to build an understanding between researchers and practitioners explicitly thanks to the fact that many of the latter had a background in natural sciences. In our case, however, the solidity of our findings was sometimes queried by colleagues with a natural science background. Scepticism did not concern science communication research in general, but rather the inevitable methodological limitations of small-scale, qualitative studies in particular. This is hardly surprising, given that qualitative research is often looked down on even by other social scientists [Patton, 2002]. Luckily, in our case these epistemological disagreements did not really affect the project, since the team leader herself had experience in and understanding of qualitative evaluations.

It is also worth noting that, in our experience, the sampling strategy turned out to be more decisive than the exact data collection protocol. As we often relied on data yielded through open questions and unstructured observation, we did not spend time developing and validating a structured data collection tool. In contrast, involving study participants with certain characteristics (such as young age and low interest in science) required extra effort. It was crucial for the team to make a large number of exhibits in CERN Science Gateway accessible and enjoyable for primary school students — an audience that is relatively new for CERN, which so far has been mainly attracting high school classes majoring in physics, as well as other more science-savvy visitors [Sanders, 2023]. Naturally, it became a priority for evaluation to bring up the perspectives of those who have not been engaged with the subject. In practice, however, it proved quite difficult to explain to a science teacher why we needed to test a physics-themed exhibit with a class of students who had never studied physics before, or convince hiking tourists to answer a few questions about a ‘quantum world’ exhibition. To put it simply, it was often not as critical to ensure in advance how exactly we collect data as it was to ensure who exactly participates in a study.

4 Challenge 3: communicating and implementing results

As Peterman et al. [2021] rightfully note, ‘practical knowledge-building also requires practical communication and dissemination strategies’ (p. 10). In line with this thinking, results of the front-end evaluations were presented at CERN either internally for the exhibition team or at meetings with companies that had been selected to design and build CERN Science Gateway exhibitions.

Formative evaluations, on the other hand, were communicated through written reports. These reports (2–15 pages) typically included: brief description of the data collection procedure; findings related to participants’ interaction with the exhibit prototype; findings related to participants’ understanding of the message that the exhibit aimed to communicate; findings related to participants’ understanding of a connection between the exhibit and its relevance to CERN’s work (if applicable); suggestions for improvement. Upon completion, the report was handed over to practitioners. Using the terminology proposed by Allen and Gutwill [2015], this arrangement would correspond to ‘jointly negotiated research with differentiated roles (JNR-D)’. It is characterised by clear separation of responsibilities, where practitioners and researchers only make decisions in their own field of expertise.

Reflecting on our collaboration, both practitioners and researchers noted that a written report, however exhaustive, was not enough and that the next step in the process often seemed missing. After an evaluation report was finalised, shared and read, we should have re-met to discuss the findings and their practical implications, potentially even co-developed the next version of the exhibit prototype and set priorities for the next round of evaluation. In other words, we would have preferred the collaborative process to be less compartmentalised, more iterative and better integrated — thus moving somewhat closer towards the ‘jointly negotiated research with integrated roles (JNR-I)’ [Allen & Gutwill, 2015].

In most cases, such degree of co-involvement was not possible due to a large volume of work: there were dozens of exhibits to be developed and evaluated, limiting our opportunities to participate in each other’s jobs. The process became a little smoother for a short period when the team was joined by a PhD student who made and evaluated paper prototypes, as she worked closely with both practitioners and researchers, bridging the two worlds and ensuring the continuity of the workflow on the exhibits she was responsible for.

The fact that researchers did not actively follow up on the implementation of their suggestions could partially explain why in several cases evaluation findings were not strictly implemented or diluted in the abundance of inputs from different stakeholders. For example, one evaluation study showed a need to change a design solution because study participants clearly did not pick on the communication message that the design set to illustrate. However, in this particular case, the graphic coherence of the project outweighed the need for visually conveying a specific educational message, and it was therefore decided to keep the design. While the results of our evaluation normally stayed within the domain of science communication and education, there were other determinants shaping the exhibitions, such as aesthetics and design continuity, budgetary and technical limitations, scientific accuracy, etc. Nevertheless, in cases where researchers strongly advocated against specific wording or gameplay because it was too likely that visitors would not understand it, it was taken seriously and often redirected the conversation. After all, we all shared the goal of making the exhibitions more accessible and enjoyable for diverse visitor groups.

4.1 The problem of ‘invisible’ research

On the challenge of being taken seriously, science communication researchers face the universal academic pressure to publish in high-ranking journals. Moreover, some scholars in the field call for more publications of evaluation research [Sawyer, Church & Borchelt, 2021].

However, in our experience this expectation proved to be difficult to meet. Being part of the team working together towards a common goal, researchers were immersed in the continual flow of deadlines of the project and thus prioritised delivery of evaluation reports over writing academic papers. Choosing practice over academia at that moment also complied better with the values of research-informed science communication: should the evaluation results come a week later, and it could already be too late to impact decision-making. Furthermore, the scope of most of our formative evaluation studies was inadequate for a standard peer-review publication: the reports were too focused on the specificity of the evaluated exhibition element and too dispersed in terms of the topics to be easily combinable under one bigger study.

To further facilitate bridging the research-practice divide, there may be a necessity to diversify the types of empirical works that ‘have a right’ to be published. For example, in their article Peterman et al. [2021] suggest that publishing agencies could start accepting research and practice notes alongside standard academic publications. Another solution could be to establish an open evaluation research repository, bringing together the large volume of small-scale studies carried out by individual institutions (e.g. the Exploratorium or the London Science Museum).

5 Concluding remarks

As Ziegler et al. put it, ‘practitioners should not be expected to do the same work as researchers; therefore, meaningful cooperation between research and practice is key’ [2021, p. 4]. We, as a multidisciplinary team of science communication practitioners and researchers, agree with this statement. In this paper, we reflect on our collaboration in the context of developing CERN Science Gateway exhibitions from 2019 to 2023.

Since we have not yet done summative evaluation of CERN Science Gateway exhibitions at the time of writing this paper, many of the challenges described above may be specific to front-end and formative evaluation studies. These types of research are arguably associated with higher pressure in terms of delivering the results as soon as possible than summative evaluation, which likely impacts their scope and leads to underrepresentation in the literature [Ziegler et al., 2021].

Nevertheless, some of our key learnings seem to be widely applicable. First of all, it was of utmost importance to start the collaboration on the basis of mutual respect, progressively building up trust and understanding of each other’s fields of expertise. Both practitioners and researchers in our team had to remain flexible and open-minded towards the diversity of research methodologies, data collection settings and evaluation results. It was also crucial to discuss and agree on the scope of each evaluation study — and, consequently, on the findings’ limitations — beforehand, in order to explicate and manage the expectations.

Brought to the extreme, the enthusiasm for research-informed practice can also show a downside. On several occasions, researchers felt that practitioners viewed evaluation as a ‘panacea’ to any disagreement or hesitation. In other words, when there was no obvious solution or when exhibitions developers could not reach a consensus, researchers were tasked with finding out what potential visitors would prefer, which would then allow practitioners to decide. While the team agreed that empirical input is in general a good basis for decision making, there was also a need to recognise the fact that not every aspect of exhibition development may be suitable for evaluating with the public. This especially concerns cases when a feature in question would have to be evaluated out of context, or when the object or the goal of evaluation could not be defined clearly enough.

Undoubtedly, research may be daunting for practitioners to incorporate in projects, as it does normally take up a lot of time. Our experience shows that evaluation studies do not have to be large-scale and long-term to provide useful insights. Moreover, we noticed that, perhaps unsurprisingly, the more focused, practice-oriented, ‘down-to-earth’ a study was, the more easily applicable the results it yielded were. However, it still required the expertise of social scientists to implement — and years of practitioners’ experience to know which research problems exactly to address.

Looking at a bigger picture, it is true that all our evaluation endeavours so far have been largely determined by the strategic objectives of the organisation and thus reactive to the requirements of a given project, with the most recent example being CERN Science Gateway. Consequently, our team has never actually taken a step back and ‘evaluated the evaluation’, i.e. reflected on what kind of evaluation research would be most effective and suitable in a long term. We are grateful for having been prompted to think about this issue during our work on this paper, and we do hope that such a systemic outlook will constitute one of our next steps.

References

-

Allen, S. & Gutwill, J. P. (2015). Exploring models of research-practice partnership within a single institution: two kinds of jointly negotiated research. In D. M. Sobel & J. L. Jipson (Eds.), Cognitive development in museum settings (pp. 190–208). Routledge.

-

Amodio, L. (2012). Science communication at glance. In Science centres and science events (pp. 27–46). doi:10.1007/978-88-470-2556-1_4

-

Archer, L., Dawson, E., DeWitt, J., Seakins, A. & Wong, B. (2015). “Science capital”: a conceptual, methodological and empirical argument for extending bourdieusian notions of capital beyond the arts. Journal of Research in Science Teaching 52(7), 922–948. doi:10.1002/tea.21227

-

Archer, L., DeWitt, J., Osborne, J. F., Dillon, J. S., Wong, B. & Willis, B. (2013). ASPIRES report: young people’s science and career aspirations, age 10–14. London, U.K.: King’s College. Retrieved from https://kclpure.kcl.ac.uk/portal/en/publications/aspires-report-young-peoples-science-and-career-aspirations-age-1

-

Archer, L., Moote, J., MacLeod, E., Francis, B. & DeWitt, J. (2020). ASPIRES 2: young people’s science and career aspirations, age 10–19. UCL Institute of Education. London, U.K. Retrieved from https://discovery.ucl.ac.uk/id/eprint/10092041

-

Ben Gammon Consulting (2012a). Evaluation of the globe exhibition at CERN. Geneva, Switzerland: CERN.

-

Ben Gammon Consulting (2012b). Evaluation of the microcosm exhibition at CERN. Geneva, Switzerland: CERN.

-

Bequette, M., Cardiel, C. L. B., Cohn, S., Kollmann, E. K. & Lawrenz, F. (2019). Evaluation capacity building for informal STEM education: working for success across the field. New Directions for Evaluation 2019(161), 107–123. doi:10.1002/ev.20351

-

CERN (1956). The public relations service. Retrieved from https://cds.cern.ch/record/28583

-

CERN (2021). CERN communications strategy 2021–2025. Retrieved from https://international-relations.web.cern.ch/eco/strategy

-

CERN (2023a). CERN Science Gateway: The Vision. Retrieved August 31, 2023, from https://sciencegateway.cern/science-gateway

-

CERN (2023b). CERN: Our Member States. Retrieved December 5, 2023, from https://home.cern/about/who-we-are/our-governance/member-states

-

Chennaz, L., Isler, A., Mascle, C., Hessels, M., Gentaz, E. & Valente, D. (2023). Participatory design to improve comprehension and aesthetic access to artistic and scientific content for people with visual impairment.

-

Corbetta, P. (2003). Social research: theory, methods and techniques. doi:10.4135/9781849209922

-

Davies, S. R. & Horst, M. (2016). Science communication: culture, identity and citizenship. doi:10.1057/978-1-137-50366-4

-

Dawson, E. (2014a). “Not Designed for Us”: how science museums and science centers socially exclude low-income, minority ethnic groups. Science Education 98(6), 981–1008. doi:10.1002/sce.21133

-

Dawson, E. (2014b). Reframing social exclusion from science communication: moving away from ‘barriers’ towards a more complex perspective. JCOM 13(02), C02. doi:10.22323/2.13020302

-

Dawson, E. (2018). Reimagining publics and (non) participation: Exploring exclusion from science communication through the experiences of low-income, minority ethnic groups. Public Understanding of Science 27(7), 772–786. doi:10.1177/0963662517750072

-

Dawson, E. (2019). Equity, exclusion and everyday science learning: the experiences of minoritised groups. doi:10.4324/9781315266763

-

DeWitt, J., Archer, L. & Mau, A. (2016). Dimensions of science capital: exploring its potential for understanding students’ science participation. International Journal of Science Education 38(16), 2431–2449. doi:10.1080/09500693.2016.1248520

-

Dvorzhitskaia, D. (2020). Quantum world: evaluation results (part 2). In Science gateway exhibitions kick-off meeting Lot 3, Geneva, Switzerland: CERN.

-

Dvorzhitskaia, D. (2021). Exploring the relationship of the public with CERN Science Gateway exhibitions (MRes, Queen Margaret University, Musselburgh, U.K.).

-

Dvorzhitskaia, D. & Verheyden, P. (2023). Interactive exhibits: theory and practice. CERN Courier 63 (6), 31–32. Retrieved from https://cerncourier.com/a/interactive-exhibits-theory-and-practice/

-

Dvorzhitskaia, D. & Zamora, A. (2020). Quantum world: discussion of evaluation results.

-

Gilbert, J. K. (2001). Towards a unified model of education and entertainment in science and technology centres. In Science communication in theory and practice (pp. 123–142). doi:10.1007/978-94-010-0620-0_8

-

Grack Nelson, A. & Cohn, S. (2015). Data collection methods for evaluating museum programs and exhibitions. Journal of Museum Education 40(1), 27–36. doi:10.1080/10598650.2015.11510830

-

Grand, A. & Sardo, A. M. (2017). What works in the field? Evaluating informal science events. Frontiers in Communication 2, 22. doi:10.3389/fcomm.2017.00022

-

Layder, D. (2013). Doing excellent small-scale research. doi:10.4135/9781473913936

-

Leach, J. (2022). Commentary: rethinking iteratively (from Australia). JCOM 21(04), C02. doi:10.22323/2.21040302

-

Molins Pitarch, C. (2023). Carla Molins Pitarch website. Retrieved August 31, 2023, from https://carlamolins.com

-

Moote, J., Archer, L., DeWitt, J. & MacLeod, E. (2020). Science capital or STEM capital? Exploring relationships between science capital and technology, engineering, and maths aspirations and attitudes among young people aged 17/18. Journal of Research in Science Teaching 57(8), 1228–1249. doi:10.1002/tea.21628

-

Moote, J., Archer, L., DeWitt, J. & MacLeod, E. (2021). Who has high science capital? An exploration of emerging patterns of science capital among students aged 17/18 in England. Research Papers in Education, 1–21. doi:10.1080/02671522.2019.1678062

-

Patton, M. Q. (2002). Qualitative research and evaluation methods (3rd ed.). Sage Publications.

-

Pellegrini, G. (2021). Evaluating science communication: concepts and tools for realistic assessment. In B. Trench & M. Bucchi (Eds.), Routledge handbook of public communication of science and technology (3rd ed., pp. 305–319). doi:10.4324/9781003039242

-

Peterman, K., Garlick, S., Besley, J., Allen, S., Fallon Lambert, K., Nadkarni, N. M., … Wong, J. (2021). Boundary spanners and thinking partners: adapting and expanding the research-practice partnership literature for public engagement with science (PES). JCOM 20(07), N01. doi:10.22323/2.20070801

-

Rennie, L. J. (2001). Communicating science through interactive science centres: a research perspective. In Science communication in theory and practice (pp. 107–121). doi:10.1007/978-94-010-0620-0_7

-

Sanders, E. B.-N. (2002). From user-centered to participatory design approaches. In J. Frascara (Ed.), Design and the social sciences (pp. 1–8). doi:10.1201/9780203301302-8

-

Sanders, E. (2023). Empowering children to aspire to science. CERN Courier 63 (6), 49. Retrieved from https://cerncourier.com/a/empowering-children-to-aspire-to-science/

-

Sawyer, K., Church, M. & Borchelt, R. (2021). Basic research needs for communicating basic science. doi:10.2172/1836074

-

Schiele, B. (2021). Le musée dans la société. Les Dossiers de l’OCIM. Ocim.

-

Schwetje, T., Hauser, C., Böschen, S. & Leßmöllmann, A. (2020). Communicating science in higher education and research institutions: an organization communication perspective on science communication. Journal of Communication Management 24(3), 189–205. doi:10.1108/jcom-06-2019-0094

-

Wanzer, D. L. (2020). What is evaluation? Perspectives of how evaluation differs (or not) from research. American Journal of Evaluation 42(1), 28–46. doi:10.1177/1098214020920710

-

Zamora, A. (2020a). From primary schools to CERN science gateway: front-end evaluation of primary schools’ visits. CERN.

-

Zamora, A. (2020b). From primary schools to CERN science gateway: small-scale research exploring teachers’ experiences in Geneva and Pays de Gex.

-

Ziegler, R., Hedder, I. R. & Fischer, L. (2021). Evaluation of science communication: current practices, challenges and future implications. Frontiers in Communication 6, 669744. doi:10.3389/fcomm.2021.669744

Authors

Daria Dvorzhitskaia identifies as a science communication researcher. She has a BSc

degree in Science Communication and an MRes in Social Sciences. She is currently doing a

PhD at the Institute for Communication and Media Research (IKMZ) at the University of

Zürich, exploring public engagement at large scientific institutions. She is deeply

curious about CERN’s communication, which she has been researching since

2017.

E-mail: daria.dvorzhitskaia@gmail.com

Annabella Zamora holds a Sociology BA and MA from the University of

Geneva. She assisted exhibitions research and development in CERN’s exhibitions

team (2019–2022). Presently, she is pursuing a PhD at Sciences and Technologies

Studies Laboratory (STSlab) at the University of Lausanne, delving into STS

scholarship and sociology of innovation, with a focus on particle accelerators case

studies.

E-mail: annabella.zamora@unil.ch

Emma Sanders is a science communicator with over 30 years experience in exhibitions,

education, editorial, audience research and events. Emma is currently head of exhibitions

at CERN and leads the development of new exhibitions for Science Gateway. She has also

set up the laboratory’s heritage conservation programme and is a passionate advocate for

diversity. She is a member of advisory boards for museums in both France and

Switzerland.

E-mail: emma.sanders@cern.ch

Patricia Verheyden has a PhD in Sciences (Free University of Brussels). She was

involved in setting up the first Science Center in Flanders (Belgium) where she was

responsible for exhibitions, education and edutainment. In 2019 she joined CERN’s

exhibition team.

E-mail: patricia.verheyden@cern.ch

Jimmy Clerc studied physics for a year (University of Geneva), then moved on to

sociology, where he obtained his master’s degree in 2022 (University of Geneva). Since

2022, he has been involved in the Science Gateway project as member of the

CERN’s exhibition team for the evaluation of the hands-on, helping for the editing

of the content, and for the coordination and the installation of the exhibition’s

spaces.

E-mail: jimmy.clerc@cern.ch