1 Introduction

As the 2017 National Academies of Sciences, Engineering, and Medicine (NASEM) report on Communicating Science Effectively emphasized, science communication research and practice has been advancing and gaining attention and energy in the past decade, but several large gaps remain that require greater focus. In particular, the NASEM committee highlighted partnerships between researchers and practitioners as a necessary and promising area for furthering science communication. Such partnerships, the report described, would not only help bring research to on-the-ground science communication settings, such as museums, science festivals, and public forums, but would also benefit science communication research through enabling collaborators to co-develop research agendas that test “realistic and pragmatic hypotheses” for science communication best practices [National Academies of Sciences, Engineering, and Medicine, 2017 ]. A NASEM report on communicating chemistry, in particular, made a similar recommendation, calling for researchers in science communication and informal education to collaborate on projects to improve chemistry communication in informal settings [National Academies of Sciences, Engineering, and Medicine, 2016 ].

To further work in these areas, the Museum of Science, Boston collaborated with communication researchers and practitioners from science communication departments and organizations across the U.S. in a multi-year project to advance our understanding of how to communicate about chemistry with the public. The Museum of Science, Boston (MOS), Science Museum of Minnesota (SMM), Arizona State University, Sciencenter, National Informal STEM Education Network (NISE Net), and American Chemical Society (ACS) partnered to seek funding from the National Science Foundation (NSF) to create educational products for chemistry engagement in informal science education settings, particularly museums. We also wanted to understand how our findings from the museum floor could apply, or not, in larger communication settings, with broader publics. Thus, researchers from the field of informal science education collaborated with those in the academic field of science communication from the University of Wisconsin-Madison (UWM) to better understand what strategies can support people’s interest, relevance, and self-efficacy in chemistry across different communication contexts. This paper synthesizes insights we gained from that close collaboration between researchers and practitioners and between the museum and university research teams, which occurred as part of the NSF-funded project, ChemAttitudes: Using Design-Based Research to Develop and Disseminate Strategies and Materials to Support Chemistry Interest, Relevance, and Self-Efficacy (NSF DRL-1612482). This project broadly distributed materials and public facing resources under the name Let’s Do Chemistry , which will be used throughout this article.

As more funding sources and philanthropic organizations encourage collaborative work and partnerships that explore connections between practice and research, this practice insight piece is highly relevant to the field of research. Although many characteristics of the Let’s Do Chemistry project might be unique, the broad, generalizable lessons from this project are applicable to others, especially those looking to advance these kinds of partnerships in science communication research and practice. The hope is that future research collaborations focused on communication and educational engagement around important scientific and societal topics will benefit from hearing about the challenges, successes, and lessons learned through this project.

2 Advancing research and practice in chemistry communication through complementary strands of work

Chemistry is typically under-represented in informal science education environments in the U.S., such as science museums and centers, despite its relevance to a wide range of research, applications, and societal issues [Zare, 1996 ; Silberman, Trautmann and Merkel, 2004 ]. Chemistry also tends to receive less public focus or interest compared to other broad, related areas of science, such as biology and physics [Zare, 1996 ; National Science Board, 2002 ; Silberman, Trautmann and Merkel, 2004 ; National Research Council, 2011 ; Grunwald Associates and Education Development Center, 2013 ; National Academies of Sciences, Engineering, and Medicine, 2016 ]. To help address these needs, the Let’s Do Chemistry project focused on creating facilitated, hands-on activities that would support publics’ interest in, sense of relevance of, and feelings of self-efficacy towards chemistry. Beyond this goal, however, we also aimed to build knowledge for the science communication and informal science education fields about specific content, format, and facilitation strategies that could advance additional development of hands-on activities and other types of chemistry communication and engagement.

To benefit from the distinct expertise of each of the partners in this collaboration, the Let’s Do Chemistry project was designed to consist of two strands of data collection: one that took place primarily on the museum floor led by the museum team, and one that expanded on the findings from the museum floor to larger publics and a wider net of outcomes through online experiments led by the university team. Because these pieces of research were conducted by different teams, they underwent IRB approval separately. The Museum of Science Institutional Review Board (IRB #00005416) approved the museum study under protocol #2016.08. The Education and Social/Behavioral Sciences Institutional Review Board at the University of Wisconsin-Madison (IRB #00000368) approved the university studies under protocol #2017-1032.

The museum team involved educators and researchers from MOS and SMM. Their strand of the data collection employed a design-based research process (DBR) [Collins, Joseph and Bielaczyc, 2004 ] to iteratively test and refine facilitated, hands-on activities as well as an accompanying theoretical framework. Prior work and informal education literature was used to generate a beginning theoretical framework which hypothesized the specific content and format strategies that should be included in the activities to increase museum visitors’ interest in, perceptions of the relevance of, and feelings of self-efficacy towards chemistry. Educators created hands-on activities that included these design strategies, which were then studied by the museum research team to understand whether they led to improved chemistry attitudes as well as to look for additional strategies that were not hypothesized. For this research, observations, video recordings, and interviews were collected from museum groups and their activity facilitators. Analysis related to the theoretical framework focused on the 274 visitor interviews. The interview asked museum visitors, “Compared to when you walked into the museum today:

- How interested are you in chemistry after this activity?

- How relevant do you feel chemistry is to your life?

-

How confident are you in:

- Your understanding of the chemistry concepts in this activity?

- Talking to others about the chemistry concepts in that activity?

- Your ability to do a similar activity on your own?”

Visitors responded to these questions on a five point scale ranging from “much LESS [interested/relevant/confident]” to “a lot MORE [interested/relevant/confident]” with a neutral option to say they experienced “no change”. Visitors were then asked during the interview “What about the activity made you feel this way?” Responses to these questions were coded to help museum researchers uncover the design strategies that contributed to improved attitudes about chemistry. These data were also used to refine the hands-on activities. The final activities were shared as part of the Explore Science: Let’s Do Chemistry kit sent to 250 museums and other informal education institutions across the U.S. More information about the activities can be found on the project website: https://www.nisenet.org/chemistry-kit .

The other data collection piece was led by the university team, made up of researchers from UWM. Using online survey-embedded experiments, the university team collected data from beyond the museum floor to try and build on and replicate the findings from the museums. The experiments tested which features of chemistry content were most successful in increasing interest in, a sense of relevance of, and feelings of self-efficacy related to chemistry from a broader U.S. public. As described in greater detail below, these experiments also focused on understanding how to capture a wide picture of what interest, relevance, and self-efficacy in chemistry look like. These efforts were undertaken in order to complement the museum research work and to generate findings about how insights from the DBR could be applied to the larger U.S. population and to different facets of interest, relevance, and self-efficacy research.

The university team generated research questions and hypotheses based on the museum team’s findings of which chemistry activity content features seemed important in increasing interest, relevance, and self-efficacy. These three content areas included highlighting chemistry connections to: everyday life and applications, societal issues, and other science, technology, engineering, and math (STEM) fields. We hypothesized that participants randomly assigned to conditions that had the presence of any of these content features would end up with higher interest, relevance, and self-efficacy than the “control” group that received only information on a fourth content area, which was just focused on conveying a chemistry concept without these other three content features. The rest of the study then explored the overarching research question of how these different content features distinctly impact interest, relevance, and self-efficacy in chemistry for 1) this broader audience beyond the museum-goers, and 2) conveyed through simple text information rather than the interactive learning settings of the chemistry activities on the museum floor.

The university team ran two experiments to bridge from the museum team’s findings to broader generalizations of the impact of chemistry information. For one of the experiments, the two teams designed the content together, essentially translating some of the museum floor activities into short overview paragraphs about the main topics in those activities, with each description coded for one of the four content areas being tested. For the second experiment, the university team adapted their content findings to both chemistry and broader STEM contexts. This experiment design was chosen to more systematically test the impact of specific types of content in the information that participants received. The design kept as much of the wording and material as possible constant between the different experimental groups and altered just the particular chemistry or STEM content areas of focus. The second experiment also alternated whether participants received the information in the context of applications related to food or health, to start to control for differences in the application area or broader topic involved.

3 Situating the two research teams

The two research teams for this project took an insider-outsider role with respect to informal science education and museum work, in ways that provided greater support for the Let’s Do Chemistry project (as recommended to academic researchers in Feinstein and Meshoulam [ 2014 ]). The museum team brought deep knowledge of museum practice, existing relationships with the activity developers creating the hands-on products for the project, and knowledge of museum research literature. This was particularly helpful because, while literature from this field is available in some peer-reviewed settings, there is also an extensive amount of gray literature connected with informal science education and museum spaces, such as is found on https://www.informalscience.org/ . The position of the museum researchers as internal museum staff meant they were also able to bring awareness of the field’s general expectations for immediately usable information and serve as a bridge between the university researchers and the informal education practitioners involved in the project.

The university research team, on the other hand, brought new perspectives from the field of science communication, expertise with designing and running surveys for U.S. publics, and a willingness to push on questions that could be thought-provoking, relevant and expanded upon in the museum world. The university team, for example, often situated the museum work in a broader communication context. They focused on questions such as who goes to museums compared to the broader general public, why do we care at societal levels about communicating chemistry, and what do these mean for how we capture concepts like interest, relevance, and self-efficacy as they relate to chemistry. Each team brought familiarity with methods that are typically used in their settings to gather data. For the university team, this meant large-scale online surveys, while for the museum team, this entailed interacting with visitors at their sites and collecting data through in-person interviews, interactions, and videos of people engaging with the tested activities.

4 Recognizing and collaborating across different contexts

The two strands of data collection varied in fundamental ways largely due to the different contexts and approaches of the research teams. These differences are instructive and will be expanded upon in this section, and they also reflect one of the strengths of this research-practice partnership. By having two strands of research occurring in different settings, the Let’s Do Chemistry project was able to gain a richer understanding of people’s attitudes towards chemistry.

4.1 Differences in data collections

There was hope that even if the two teams could not share measures at the beginning of the project, they might be able to do so later after the university team collected some preliminary data. Differences in research contexts, however, meant that finding sets of existing measures that were applicable in both the museum and online settings was impractical. For instance, the museum data collection needed to gather feedback to improve hands-on chemistry activities for children and adults, while the university-run data collection effort was focused on understanding how U.S. adults feel about chemistry. Thus, age-appropriate wording to gather information about the project’s three attitudes was different for the museum study and the university studies. Further, differing timelines for the two pieces of work became a challenge. The museum team had to move quickly to develop instruments and gather data. The production team needed the activities to be finalized within a tight timeframe so that they could create the Let’s Do Chemistry kit and distribute to partners in time for National Chemistry Week. Therefore, it was not possible for the museum team to wait to do their data collection until the university team had completed their study to create survey items and constructs for chemistry interest, relevance, and self-efficacy.

Additionally, variations in study participants’ time constraints affected how each research team captured data. For the museum team, the interview instruments could not be longer than a few minutes because museum visitors were being recruited off the floor. It was expected that these subjects would use the chemistry activity with an educator for as long as they wanted and then participate in the data collection about that experience. Because a typical science museum visit lasts about two hours, the entire interaction needed to ideally take less than 30 minutes of their museum visit (leaving about 15 minutes for the activity and 15 minutes for the interview). The university team, on the other hand, was using an online panel survey during which subjects would be paid a small amount for their participation. This meant that there were different expectations the length of the data collection experience, and that the university team could ask more questions than could be included on the museum team’s instrument.

Beyond these differences, the distinct purposes of each data collection effort also meant that it was difficult to share measures. The larger focus of the museum team’s study was to understand visitor perceptions of which facilitation and design aspects (i.e., content, format) of the chemistry activities led to increases in interest, relevance, and self-efficacy. These findings would help refine the kits and inform future activity development. Therefore, it was most important to collect qualitative data to better understand specific content, design, and facilitation aspects of the activity experience that led to these outcomes. For the university team, on the other hand, it was necessary to gather an in-depth understanding of U.S. adults’ reactions to the topic of chemistry, and their feelings of interest, relevance, and self-efficacy in general, rather than in relation to a particular chemistry activity. Ultimately, the two data collection efforts had different question sets due to these competing factors and did not co-develop a shared set of measures for both data collection strands.

4.2 Finding common ground and common measures

Although the two teams could not find a set of existing measures or data collection methods that were usable for both situations, the differences in how each team asked the items offered a useful comparison. The university team was able to capture more nuance in testing how to capture feedback on chemistry interest, relevance, and self-efficacy, allowing for the creation and experimentation of large batteries of items. Building on the museum team’s interview instrument, the university team included some of the questions the museum asked on their interview, including one item in each of the batteries of six to eight items capturing interest, personal relevance, and self-efficacy. Because the university team was able to ask a larger sample of items, these research results were able to indicate which items best captured the project’s three attitudes. The teams have since discussed how the measures that the university team found to be especially strong items for capturing chemistry interest, relevance, and self-efficacy may be useful to the museum in future projects.

For the university team, it was also important to gather information that was generalizable to other chemistry communication contexts. This focus meant that the university team needed a detailed sense of people’s attitudes towards chemistry that would be best captured through quantitative data. Additionally, the university team ran experiments through online surveys, to expose people to different chemistry information and test how the content affected people’s interest, relevance, and self-efficacy in the topic. Due to the online format, this content was largely conveyed through text and visuals rather than the hands-on activities being used on the museum floors. This deviation from the museum team’s data collection, however, ended up complementing and reinforcing the findings across the two data collection efforts. Results in the online experiments confirmed some of the results from the museum floors in terms of what content most effectively increased interest, relevance, and self-efficacy across the different participants. Altogether, these differences in data collection methods and particular research questions were one of the top benefits of our collaborative approach.

5 Building on the results of each other’s work

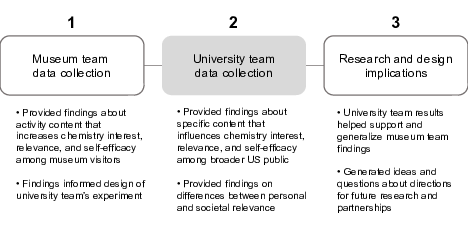

A critical aspect of the collaboration was regularly updating each other about findings, through official monthly virtual and occasional in-person meetings along with frequent informal discussions throughout the research design and analyses. Perhaps the best example of the exchange between the two teams was when the museums shared findings about what types of content and format supported interest, relevance, and self-efficacy in chemistry. The university team was able to use these data to craft a study design for their work. The university team’s research results in turn informed the museum team’s understanding of the connections between chemistry interest, relevance, and self-efficacy among a greater population. See Figure 1 for a visual depiction of this evolution of understanding based on the two data collection efforts.

The museum team found that the content strategies that appeared to be effective at increasing museum groups’ interest, relevance, and self-efficacy in chemistry were those that made connections to everyday life and applications, societal issues, or other STEM fields. As described above, using these findings (step 1 as shown in Figure 1 ), the university team designed vignettes capturing these different content areas using topics that had been incorporated in some of the hands-on activities and randomly assigned survey respondents to one of these distinct vignettes.

Based on the differences in responses to the batteries of interest, relevance, and self-efficacy items across experimental conditions (step 2, as shown in Figure 1 ), the university team found that content connecting chemistry to everyday applications seemed to be most effective at increasing interest, relevance, and self-efficacy. This effect of everyday application information appeared in both the results from participants who received the information designed to mirror the museum team’s cooking activity, which was at that time being tested on the museum side, and the results from participants in the experimental conditions in the second experiment that extended the content features into new topic areas, such as everyday chemistry applications for health or nutrition purposes.

Because of product development needs and time constraints, the museum research team was only able to understand the impact of content features on the museum visitors’ general perceptions of chemistry relevance. However, the university team was able to include more measures on surveys allowing them to learn about relevance in a more nuanced way. Findings from the university team show that when it came to the content’s impact on the general public’s perceptions of chemistry relevance, it was personal relevance (e.g., chemistry is important to me), rather than societal relevance (e.g., chemistry helps accomplish societal goals), that was largely impacted as a result of content features. Similarly, the data showed that personal relevance corresponded to changes in interest and self-efficacy much more than societal relevance did. Societal relevance, on the other hand, did not seem to be affected by the information that people received, and average perceptions of chemistry’s societal relevance were consistently high regardless. The results offered support for the museum team’s findings (step 3, as shown in Figure 1 ) of what chemistry content appeared to affect interest, relevance, and self-efficacy, and extended those findings to highlight how in a setting with much less interactivity than available on the museum floor, everyday applications were consistently the most effective type of content. Findings from the university team also provided insight for the museum team about the range of positive views of chemistry that people can hold, and what that might mean for capturing the impact of different communication approaches. For example, just because a group of people do not perceive personal relevance from chemistry, does not mean that they do not see or are dismissive of the broader relevance of the field and its applications.

For the university team, it was helpful to see how the museum team was defining and presenting interest, relevance, and self-efficacy to museum visitors. The approaches informed how the university team operationalized measures and thought about how their results could inform on-the-ground practices in informal chemistry learning. For example, the university team was interested in capturing a broader perspective of interest, relevance, and self-efficacy as it related to both chemistry and other science fields. But the university team was able to incorporate insights and needs from the museum team to test if the museum items aligned with these broader conceptualizations of interest, relevance, and self-efficacy. The university team was then able to see which items could be the most effective for capturing these concepts in different settings and with different instrument space constraints. The university team also benefited from seeing the museum research findings around content and format. These results informed the design of the university team’s experiment in which they created content that aligned with the categories that the museum team found to be effective in increasing interest, relevance, and self-efficacy, such as content focused on connections to everyday life and applications.

In addition to the benefits of how specific data collection efforts and results complemented each other, the diverse perspectives across the project had many broader advantages for the success of the collaboration and for building future projects. One such benefit was that the museum team could play a brokering role between the university team and activity developers, supporting access to internal project resources, translating differences in jargon at times, and encouraging immediate use of information learned through the university team’s research. Another benefit was that the university team could provide national context for the findings gathered from museum visitors. Moreover, the work done by both research teams was able to advance knowledge of the topic of chemistry in the informal science education field, where it had previously received little attention, and to provide some validations for each other’s results and widen their generalizability to new communication contexts. Overall, each team brought their unique expertise and methodological approaches, and gained by thinking about how the two data collection efforts could inform each other.

6 Take-aways for building future research-practice partnerships

The two research teams brought a productive mix of backgrounds, experience, and expertise. This experience led to lessons learned that others trying to collaborate through research-practice partnerships may want to consider.

First, it is important to recognize cultural differences across research professionals, contexts, and settings and to find ways to bridge and build on those differences. For the Let’s Do Chemistry project, a key strength was the inclusion of museum practitioners, university researchers, and museum researchers. In some ways, the museum researchers could act as a bridge between the other two groups because they understood the language and practices of broader research as well as the unique language and practices of museums. This was not explicitly planned, but it ended up being vital for communicating and coordinating research and practice strands throughout the project. Other research-practice partnerships should take time to understand cultural differences and look for cultural brokers, or boundary spanners [Wenger, 1998 ], so that they can have a productive partnership.

Differences in working style also mean there is a need to be flexible and to budget in more time to discuss and go back-and-forth on research questions and analyses than might seem necessary. Originally, the museum and university research teams wanted to work in a more cyclical manner. However, the museum educator timeline meant that the museum research team needed to speed up their process. The museum and university research teams found that they could still learn from each other and build on each other’s work, even if they had fewer cycles than originally expected. The sped-up timeframe offered helpful experience for the university team, especially the graduate students on the project, to learn some of the challenges of working on a faster timeframe than is common for academic research, but which can often be expected in on-the-ground engagement work. For other projects, it would be important to build flexibility into your study to allow for changes that happen as teams figure out how to work with one another.

Further, differences in context will likely require different methods, which offers an opportunity for building on and validating each other’s results by extending similar ideas to new approaches and settings. This requires strategic planning in the design process to ensure there is enough conceptual and operational overlap to draw connections between results. Originally, the university and museum research teams had hoped to use the same survey items for both of their strands of study. However, because the museums were working with people of a larger age range and because the university needed more nuanced understandings of interest, relevance, and self-efficacy, the teams decided to employ different methods. When working in informal education environments, it is important to understand that the age range of subjects is often much broader than for university studies. Additionally, informal education studies often need to employ quicker protocols because they cannot ask as much time of their subjects. Therefore, researchers may need to use methods that are new to them and collectively identify and develop ways to connect across these different methods.

The identification and development of science communication practices was exemplified through the results regarding activity content and format strategies and how these may affect attitudes towards chemistry. The bringing together of diverse foci across both strands of data collection lends insightful science communication research knowledge that could be applicable across a variety of settings, activity types/formats, topics and most importantly, audiences.

7 Conclusion

Other research/practitioner teams may benefit through similar collaborations that are overlapping and complementary but not directly comparable. Regular communication with each other about the methods and measures that were being used meant that the museum and university teams were able to share ideas across the project and develop measures that could be useful for future work. Findings shared between research teams enriched their interpretations and sparked new questions.

Some of these new questions quickly developed into new research projects and collaborations related to understanding and enhancing public engagement with chemistry. One such project was conducted by members of the university team in collaboration with the American Chemical Society (ACS). This project examined the audience makeup for online science videos, and factors that could explain variations in viewer engagement with those videos. With the declining influence of legacy media in science communication, there have been growing demands for the scientific community to use digital media to communicate directly with non-expert publics. YouTube is one of the most popular and promising tools for such science outreach. The ACS’s official YouTube channel, “Reactions”, provided a unique and comprehensive data set, on which subsequent analyses were conducted by the university team [Yang, Scheufele and Brossard, 2019 ].

Through the Let’s Do Chemistry project and off-shoots that developed through these collaborations, our partnership was able to provide insights into a needed area of research on how to facilitate chemistry communication and informal learning, as the recent NASEM reports called for. Our partnership allowed us to do so in a way that was grounded in both theoretical and rigorous methodological practices and in the realities of on-the-ground communication in museum and other informal learning settings. As a result, we were able to capture a more complete view of what types of information and experiences can increase people’s interest in, the perceived relevance of, and feelings of self-efficacy related to chemistry as we saw similar patterns of results across our different data collections.

More importantly, we were also able to inform each other’s broader practices and insights. The university researchers learned ways to connect their work to on-the-ground projects and address challenges of connecting broad theoretical ideas to adaptable practices. The museum team saw some of the ways that their work connects to bigger communication goals and practices and how they could capture different outcomes related to chemistry engagement. The experience also generated new research questions that shaped each team’s research programs and led to further collaborations, such as the YouTube project described above. Altogether, the collaboration shaped both the insights we were able to gain in this project and the broader perspectives for how our work and future partnerships can contribute to understanding of science communication research and practice.

Acknowledgments

As a collaboration between the National Informal STEM Education Network, the American Chemical Society, and the Museum of Science, Boston, many people contributed to this project. The authors would particularly like to thank the project PIs (Larry Bell, Mary Kirchhoff, Rae Ostman, and David Sittenfeld), activity developers (Thor Carlson, Angela Damery, Susan Heilman, Emily Hostetler, K.C. Miller, Jill Neblett, and Becky Smick), and former members of the research team (Nikki Lewis, Sarah Pfeifle, and Kaleen Tison Povis).

This material is based upon work supported by the National Science Foundation under Grant No. DRL-1612482.

References

-

Collins, A., Joseph, D. and Bielaczyc, K. (2004). ‘Design research: theoretical and methodological issues’. Journal of the Learning Sciences 13 (1), pp. 15–42. https://doi.org/10.1207/s15327809jls1301_2 .

-

Feinstein, N. W. and Meshoulam, D. (2014). ‘Science for what public? Addressing equity in American science museums and science centers’. Journal of Research in Science Teaching 51 (3), pp. 368–394. https://doi.org/10.1002/tea.21130 .

-

Grunwald Associates and Education Development Center (2013). Communicating chemistry landscape study. Prepared for the National Research Council Board on Chemical Sciences and Technology (BCST) and Board on Science Education (BOSE). URL: https://www.nap.edu/resource/21790/Chem_Comm_Landscape_Study.pdf .

-

National Academies of Sciences, Engineering, and Medicine (2016). Effective chemistry communication in informal environments. Washington, DC, U.S.A.: The National Academies Press. https://doi.org/10.17226/21790 .

-

— (2017). Communicating science effectively: a research agenda. Washington, DC, U.S.A.: The National Academies Press. https://doi.org/10.17226/23674 .

-

National Research Council (2011). Chemistry in primetime and online: communicating chemistry in informal environments. Workshop summary. Washington, DC, U.S.A.: The National Academies Press. https://doi.org/10.17226/13106 .

-

National Science Board (2002). Science and engineering indicators 2002. Arlington, VA, U.S.A.: National Science Foundation.

-

Silberman, R. G., Trautmann, C. and Merkel, S. M. (2004). ‘Chemistry at a science museum’. Journal of Chemical Education 81 (1), pp. 51–53. https://doi.org/10.1021/ed081p51 .

-

Wenger, E. (1998). Communities of practice: learning, meaning, and identity. New York, NY, U.S.A.: Cambridge University Press. https://doi.org/10.1017/cbo9780511803932 .

-

Yang, S., Scheufele, D. A. and Brossard, D. (2019). ‘Communicating chemistry on YouTube: science videos as outreach tools’. In: American Association for the Advancement of Science Annual Meeting (Washington, DC, U.S.A. 14th–17th February 2019).

-

Zare, R. N. (1996). ‘Where’s the chemistry in science museums?’ Journal of Chemical Education 73 (9), pp. 198–199. https://doi.org/10.1021/ed073pA198 .

Authors

Elizabeth Kunz Kollmann (she/her/hers) is the Director of Research and Evaluation at the Museum of Science, Boston. She has worked in the museum field for over fifteen years focusing her research on science communication, public engagement with science, and learning in informal science environments. Ms. Kollmann was a co-PI of the ChemAttitudes grant leading the museum research team as they studied how design and educator facilitation leads to increases in participant interest, relevance, and self-efficacy towards chemistry. E-mail: ekollmann@mos.org .

Marta Beyer, (she/her/hers) is a Research & Evaluation Associate at the Museum of Science, Boston. She has worked in the museum field for over ten years contributing to internal and cross-organizational projects. Her evaluation and research work has looked at informal learning opportunities and professional development offered by museums. Beyer has been integrally involved in the professional impacts evaluation studies for the NISE Network and was a key part of the research team for the ChemAttitudes project. E-mail: mbeyer@mos.org .

Emily Howell, Ph.D., is a postdoctoral fellow in the Department of Life Sciences Communication at the University of Wisconsin-Madison. Her work examines how to communicate controversial and policy-relevant science and technology issues. In particular, she focuses on how to increase communication and engagement across stakeholders with diverse experiences and opinions on important issues in science and society. E-mail: eleahyhowell@gmail.com .

Allison Anderson (she/her/hers) is a Senior Research and Evaluation Assistant at the Museum of Science, Boston. Her work at the Museum over the last five years has included a wide range experiences, including multi-site projects, program development, and exhibition evaluation. She has been a supporting member of the research and evaluation team for multiple NISE Network projects, including the ChemAttitudes project. E-mail: aanderson@mos.org .

Owen Weitzman is a Research and Evaluation Assistant at the Museum of Science, Boston. He has worked at the Museum for the past three years, during which time he has supported the evaluation of a range of informal science education efforts, both at the MOS and in conjunction with the NISE Network. E-mail: oweitzman@mos.org .

Marjorie Bequette is Director of Evaluation and Research in Learning at the Science Museum of Minnesota. Her research interests include learning in museum settings and the development of equity practices with public audiences and museum staff members. E-mail: mbequette@smm.org .

Gretchen Haupt (she/her/hers) is an Evaluation and Research Associate at the Science Museum of Minnesota in St. Paul. She has over a decade of experience in audience research in informal learning environments, with a particular focus on exhibits and programs at science centers and museums. Gretchen supported multiple NSF funded grants through the NISE Network project. E-mail: ghaupt@smm.org .

Hever Velázquez (he/him) is an Evaluation and Research Associate, Science Museum of Minnesota. Velázquez’s experience includes six years of combined experience in the research and evaluation for ISE programs and bilingual (English and Spanish) exhibit projects at science museums across a wide range of topics. Velázquez’s work advocates for the consideration and use of culturally responsive practices to serve all audiences with a focus on underrepresented communities. E-mail: hvelazquez@smm.org .

Shiyu Yang, (M.A., Marquette University) is a doctoral student in the Department of Life Sciences Communication at the University of Wisconsin-Madison. Her research primarily involves examining public discourse and opinion surrounding potentially controversial science and risk topics, and how digital media technologies affect these dynamics. E-mail: syang364@wisc.edu .

Dietram A. Scheufele is the Taylor-Bascom Chair in Science Communication and Vilas Distinguished Achievement Professor at the University of Wisconsin-Madison and in the Morgridge Institute for Research. His work examines the social effects of emerging science and technology. He is an elected member of the German National Academy of Science and Engineering, a lifetime associate of the National Research Council, and a fellow of the American Association for the Advancement of Science. E-mail: scheufele@gmail.com .