1 Introduction: breaking barriers to a dialogic encounter

Modern cosmology and astrophysics tell us that dark matter makes up 25% of the universe. While dark matter is five times more abundant than normal matter, it is almost impossible to detect, and its fundamental nature remains unknown. Through the cross-disciplinary collaboration between physicists and human-computer interaction experts described in this paper, we aim to create a multisensory, immersive experience that enables the public to feel dark matter with all their senses.

The dialogue model of public engagement demands a more participatory involvement of the public in all matters pertaining scientific research [Bucchi and Trench, 2008 ]. However, there are structural barriers to the implementation of a truly dialogic approach to public engagement. Much of our scientific knowledge is transmitted via intellectual means, based on abstract concepts and gained through reading and other mostly visual means. Often, acquiring understanding of a scientific subject requires high-level mathematical skills, thus creating an additional hurdle to engagement and interaction with the public at large.

This is especially true of astrophysics, whose objects of study are so far removed from the human scale that they are often hard to imagine. Yet, the public at large is fascinated by the cosmos, perhaps a lingering reflection of our atavic connection with the universe. In our experience, interest in astronomy and astrophysics is often cited as one of the main drivers for many prospective physics students applying to Imperial College London, one of the top ten research universities in the world.

In order to overcome such obstacles to a dialogic encounter with the public, we posited that grounding facts and abstract ideas in bodily experience might be a helpful way of creating meaning and widening participation, especially amongst non-expert and under-served audiences. Our aim is to design novel public engagement modalities that bypass traditional knowledge barriers between experts and non-experts, thus creating a forum for a participatory encounter between the scientists and the public [Trotta, 2018 ].

A well-established body of work already exists in making science — and in particular astronomy — more accessible by using touch and sound, mostly aimed at improving inclusivity for people with visual impairment (for a recent review, see [K. K. Arcand et al., 2017 ]; for a list of activities and resources, see [International Astronomical Union, 2019 ] ). For example, the “Tactile Universe” project created 3D models of galaxies, used to engage visually impaired children in astronomy [Bonne et al., 2018 ]. Similarly, the “Tactile Collider” is aimed at visually impaired children to engage with the field of particle physics, and demonstrate particle colliders through the use of 3D sound and large scale tactile models [Dattaro, 2018 ]. Other work in this area include, for example, astronomical activities specifically intended for people with special needs [Ortiz-Gil et al., 2011 ], 3D tactile representations of Hubble space telescope images [Grice et al., 2015 ], of data from the X-ray Chandra Observatory [K. Arcand et al., 2019 ], of the Subaru telescope structure [National Astronomical Observatory of Japan, 2018 ], of the Eta Carinae nebula [Madura et al., 2015 ], and of cosmic microwave background radiation anisotropies [Clements, Sato and Fonseca, 2017 ], which was later used for the tactile stimulation part of the g-ASTRONOMY pilot (see below). Sound has perhaps received less attention to date, but some sonification prototypes have been explored [Casado et al., 2017 ; Lynch, 2017 ]. Many of the educational projects funded by the International Astronomical Union’s Office of Astronomy for Development have a multisensory component [Office of Astronomy for Development, 2019 ].

2 An integrated multisensorial experience

While this project represents the first joint activity of the authors, each of them has experimented before with multisensory and innovative communication methods.

One of the authors (RT) explored some of the opportunities afforded by a multisensory approach with the project “g-ASTRONOMY”. g-ASTRONOMY aimed to break the assumption that astronomy and astrophysics can only be understood in terms of visual representation. In collaboration with a molecular gastronomy chef, RT created novel, elegant and edible metaphors for some of the universe’s most complex ideas. Evaluation demonstrated that this approach allows people to engage with some of the most important theories in astrophysics and astronomy in a new and accessible way. After acclaimed events [Trotta, 2016b ] at Imperial Festival and Cheltenham Science Festival in 2016, g-ASTRONOMY was re-designed in collaboration with the Royal National Institute of Blind People, exclusively for people with sight loss. The workshop ran in 2017 and provided an immersive and interactive experience without the need for visual clues. Visitors simultaneously felt (thanks to 3D printed models) and tasted the evolution of our universe from the big bang to the formation of galaxies, and experienced the multiverse theory through how different universes might taste, rather than how they look. Participants described the experience as “life-changing” [Trotta, 2016a ].

Another one of us (EJCM) initiated “What Matter’s”, an effort aimed at bringing together ten experimental and provocative design studios and ten innovative researchers from diverse backgrounds, ranging from historical preservation or artificial spider silk to nanotechnology and solar panels. The teams were given funding and six months to join forces and produce something. The guidelines were kept very broad on purpose. The results were combined into an exhibition that was premiered at the Dutch Design Week 2018. The outputs ranged from images of everyday objects using processes for the manufacture of graphene to a passive room-temperature control system based on the physics of nanotubes [FormDesignCenter, 2019 ]. The project was a collaboration between Art & Science Initiative, Form Design Center and S-P-O-K.

In another, separate exploration, the team from the Sussex Computer Human Interaction (SCHI) Laboratory was involved in the creation of Tate Sensorium, a multisensory art display in London’s Tate Britain [Ablart et al., 2017 ], which went on to win the 2015 Tate Britain IK Prize award. The aim of this project was to design an art experience that involved all the traditional five human senses. To achieve this goal, a cross-disciplinary collaboration between industry, sensory designers, and researchers was formed. Flying Object, a creative studio in London, led the project. The SCHI team advised on the design of the multisensory experiences, including new tactile sensations through a novel mid-air haptic technology [Carter et al., 2013 ] (which also formed part of this project), and on the evaluation of the visitors’ experiences [Obrist, Seah and Subramanian, 2013 ]. Tate Sensorium was open to the public between August 25 and October 4, 2015. Within this timeframe, 4,000 visitors experienced the selected art pieces in a new and innovative way. The authors’ collected feedback from 2,500 visitors through questionnaires and conducted 50 interviews to capture the subjective experiences of gallery visitors. Around 87 percent of visitors rated the experience as very interesting (at least 4 on a 5-point Likert scale), and around 85 percent expressed an interest in returning to the art gallery for such multisensory experiences [Vi et al., 2017 ].

Inspired by the ground-breaking possibilities of this approach, the SCHI Lab at the University of Sussex and a team of theoretical physicists and astrophysicists from Imperial College London joined forces to build a new platform for multisensory science communication. Astrophysics and cosmology offer the opportunity of exploring topics that are normally difficult to communicate to a non-expert audience. Multisensory representations allow for showcasing physical concepts while bypassing the need for technical and mathematical details by exploiting instead the full potential of the human sensory capabilities. Abstract concepts that, due to the constraints of traditional media, might be over-simplified in traditional public engagement approaches such as lectures, can find natural and powerful representations made possible by haptic technology, immersive audio, olfactory stimuli and even taste. This “embodiment of ideas” opens the door to a deeper emotional and intuitive response [Hamza-Lup and Stanescu, 2010 ; Gibbs, 2014 ; Obrist, Tuch and Hornbaek, 2014 ], rather than a purely intellectual one. Multi-channel sensory stimulation, including olfactory effects, have been reported to improve engagement, enjoyment and knowledge acquisition [Covaci et al., 2018 ; Olofsson et al., 2017 ; Brulé and Bailly, 2018 ].

A further potential benefit is the lowering of accessibility barriers, so as to reach audiences that might normally be overlooked by more traditional approaches, such as neurodiverse young adults or visually impaired people. It also has the potential to increase the “communication bandwidth”, with the combination of the five senses being larger than their sum. This would benefit not only people with sensorial or learning differences, but the public at large, as educational research shows [Metatla et al., 2019 ] that a more inclusive approach is beneficial to everybody.

This project takes one step further on previous work in this area, with the aim of seamlessly integrating all five senses to produce an even stronger sense of embodiment in the participants. We wished to explore and evaluate the following working hypotheses. Firstly, that a multisensory experience generates a stronger and longer-lasting sense of engagement with the concept; and secondly, that a metaphorical approach to expressing cosmological ideas leads to a higher perception of relevance and increased curiosity about the phenomenon. We do not necessarily wish to transmit a large amount of information to the public; rather, we aim at stimulating engagement, curiosity and long-lasting impressions, hopefully leading participants to a positive attitude towards the underlying science, and to their further exploration of the topic via other channels.

3 A multisensory dark matter experience

To kick-start of our collaboration and an exploration of the possibilities within, we embarked in the design and pilot implementation of a multisensory experience, showcasing the physics of dark matter. We chose dark matter as the subject of our pilot because it is an exciting concept at the frontiers of contemporary research, and intrinsically invisible in the conventional sense — thus symbolically tying in with the aims of our project. Dark matter is often discussed in the general media, so we wanted to propose a fresher, novel approach to an idea that the public at large might already have been exposed to, albeit in a more traditional manner.

Prompted by the concept of “a journey around the galaxy”, we set out to build a prototype experience that was engaging, exciting but also scientifically accurate and meaningful from a scientific point of view. Our target audience was a general public of young adults participating at science based social events. We carried out two events: our pilot exhibit took place at London’s Science Museum after-hours “Lates” event on October 31 , 2018 (henceforth, “the 2018 event”), open to an adult-only audience in the evening. A second exhibit, which built on an incorporated insights and public feedback from the pilot, took place at the same venue but as part of the daytime Great Exhibition Road Festival on June 29–30 , 2019 (“the 2019 event”), which targeted mainly families. Given the venues, both located in the central London “Albertopolis” area with a high concentration of iconic museums and cultural institutions, we could expect a public of science-savvy, typically well-educated adults and families of middle-to-high socio-economic background.

3.1 An intimate journey for two

Early in our design process, we identified the need to shelter participants from the general hubbub of the exhibition space. This was necessary in order to maximise intimacy and focus with the multisensory aspects. We thus decided that the dark matter experience was going to take place inside an inflatable planetarium. This presented the added benefit of a black, fully enclosed and mysterious-looking structure that would hide the experience from participants waiting to enter, thus ensuring maximum surprise. The planetarium is designed for up to 10 participants, but we restricted the experience to two participants at the time. This was because of space restrictions due to the equipment needed inside the planetarium, and also to create what we hoped would feel like an intimate, personal experience inside the enclosure.

We did not brief participants on what to expect inside as they were waiting in the queue (for up to 45 minutes!), except to ask them to read and sign a health and safety waiver describing relevant aspects of the experience in sufficiently general terms.

The presented narrative of the multisensory dark matter experience was that the participants were to embark on a metaphorically and scientifically accurate (but physically impossible) journey through our galaxy after being transformed into dark matter detectors by a mysterious pill (vaguely inspired by the 1999 science-fiction movie “The Matrix”, starring Keanu Reeves) taken at the beginning of the experience.

3.2 Coordinated sensorial stimuli

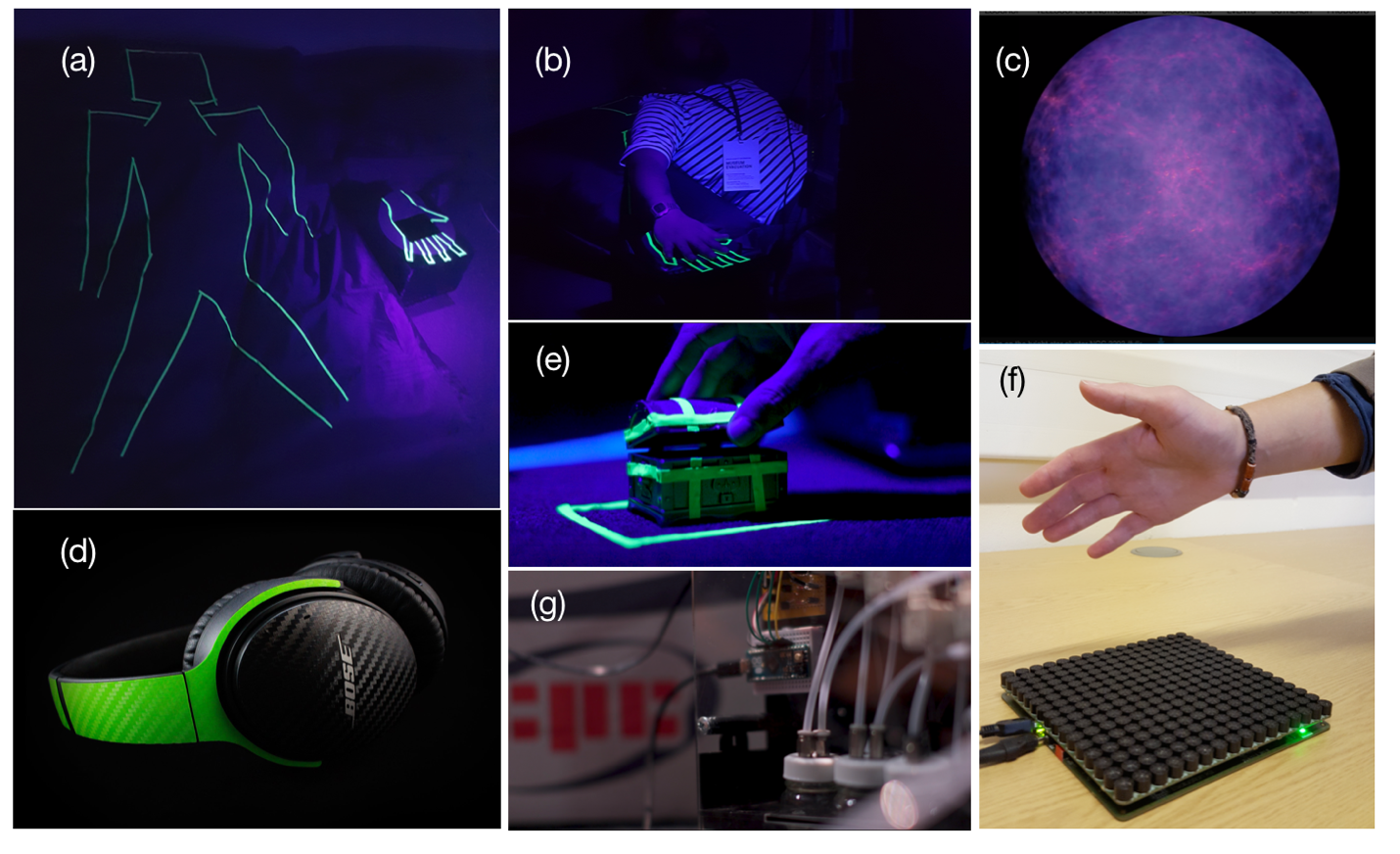

The journey was presented through timed visual, auditory, tactile, olfactory and gustatory stimuli within the planetarium. Upon reaching the head of the queue, each of two participants received noise-cancelling headphones (Bose QC35) and were led into the planetarium. There, they were directed to recline on black, large bean-bags lying on the floor, where a fluorescent outline of a human body indicated the position they needed to assume. Next to each bean-bag, a small metal container with fluorescent borders and a black box with a fluorescent outline of a hand were placed. Figure 1 shows an overview on the elements of the setup.

Once the participants were lying comfortably on the bean-bags, the auditory track started in the headphones. A deep, friendly voice described the narrative and instructed visitors to open the small metal container, where they would find an “experimental powder designed to increase the acuity of [their] senses beyond the physically possible”. After being given the option to take the pill, they were instructed to place one of their hands on top of the black box, within the hand outline. While participants settled in and received the introductory instructions through the headphones, a projection of the visual representation of scientific simulations of dark matter was presented in the planetarium. This was turned off to create darkness when other sensory stimulation kicked in, in order to re-direct the participants’ attention.

The voice then accompanied the participants along their metaphorical journey through our galaxy, while coordinated auditory, tactile and olfactory stimuli represented the dark matter wind and dark matter density along the journey. The trip started on Earth, then took the travelers to the outskirts of our galaxy and back, to finish by falling into the supermassive black hole at its center.

The coordinated stimuli were based, at different levels of sophistication, on scientific results and data. Below we provide a short description of each stimulus.

-

Audio:

a dark matter sound was artificially engineered for the experience,

generating a storm-like but unfamiliar auditory sensation. It varied in

intensity, pitch and texture to represent the concepts of dark matter wind

during an earth-year and its density profile in our galaxy, adapted from

mathematical models used in research.

A voice-over described the practical aspects for the participants to get set-up, illustrating the concept behind the journey and giving instructions. It then guided the participant through the journey, describing physical concepts and phenomena in complete coordination with all other stimuli. The voice-over was recorded by BBC presenter and Science Communication lecturer, Gareth Mitchell, in studio quality to maximise the listening experience. The language used was free of jargon. The tone and content was authoritative but slightly tongue-in-cheek. For example, towards the end of the journey, the voice said: “You are now travelling at faster-than-light speed towards the central black hole of our galaxy, where the dark matter density is greatest. This is a journey that can only end when you fall into oblivion in the singularity of the central black hole. [Pause; dark matter stimuli increase in intensity; then, total silence and all sensory stimuli stop] You have been annihilated, but have no fear: the atoms of your body will be recycled in the form of pure energy, in a few billions of years from today”. The audio track with the narrated voiceover is available online [Trotta et al., 2019 ].

- Video: participants were welcomed inside the dome by a dark matter simulation [European Southern Observatory, 2015 ] that set the tone for a suitably atmospheric experience. During the 2018 event, the video was switched off once the experience began to encourage focus on the other senses. During the 2019 event (which ran over two days), we switched the video off during Day 1, but kept it running during Day 2, in order to evaluate the relative weight of the visual stimulus over the others.

- Touch: an ultrasonic mid-air haptic device was placed inside the black box with the hand outline. Mid-air haptics describes the technological solution for generating tactile sensations on a user’s skin, utilizing acoustic radiation pressure, displayed in mid-air without any attachment on the user’s body [Carter et al., 2013 ]. We used a UHEV1 device manufactured by Ultraleap Limited (www.ultraleap.com). The device was able to produce the tactile sensations according to the change in dark matter wind during an earth-year, and its density profile in our galaxy. This was synchronized with the audio.

- Smell: smell is strongly connected with emotions and memories. Enhancing the dark matter experience with the added value of smell means to impact the emotions of the participants and thus boost the memory retention of the experience itself. A black-pepper essential oil was selected because of its cross-modal properties, freshness, coldness and pungency. It was delivered using a scent-delivery device developed at the SCHI Lab [Dmitrenko, Maggioni and Obrist, 2017 ]. The scent was synchronised with the other stimuli twice along the journey, with different intensities reflecting local spikes of dark matter density.

- Taste: the capsule offered to participants, made out of vegetable ingredients, contained unflavoured popping candy that dissolved into a sweet taste inside the mouth. Once consumed, it created a surprisingly strong crackling effect inside the mouth and skull, amplified by the subject wearing noise-cancelling headphones.

At the end of the journey, the voice invited participants to leave feedback upon exiting, and concluded with “May the dark matter be with you”. The whole experience lasted 3 minutes.

The 2018 event was filmed and footage was edited into a short (less than 4 minutes) video, whose purpose was both to document the evening and to serve to facilitate further diffusion. The video is available on YouTube (see: https://www.youtube.com/watch?v=zKRsjGqz5Ls accessed: April 2 2020).

4 Feedback and evaluation

After the experience finished, participants were asked to fill out a questionnaire to gather feedback once outside the planetarium. For the 2018 event, a short self-report questionnaire aimed to evaluate the overall liking of the experience and feeling of immersion, but no demographic data was collected. The liking of each single sensory modality (i.e., audio track, scent, touch) was captured on a 9-point Likert scale. Visitors were also asked how likely they were to learn more about dark matter, how confident did they feel explaining dark matter to a friend, and how much would they recommend the experience to others. At the end of the questionnaire, we asked the participants to use three words to describe the experience.

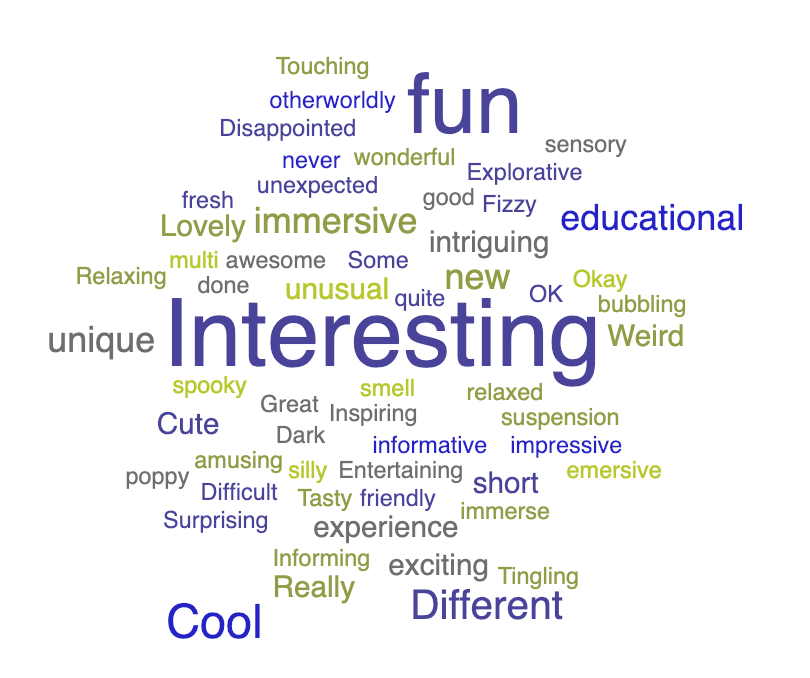

The 2018 installation was extremely popular, exceeding our expectations with people waiting up to 45 minutes to participate. There were an estimated 60–70 participants attending the planetarium during the 3 hours of the event, out of which 46 participants answered the self-report questionnaire. Visitors rated high the overall liking, immersion, and liking of each single sensory modality and the likelihood of recommending this experience to a friend (mean average rates of 7.0 on a 9-point Likert scale, standard deviation of 1.2 scores). Their self-reported confidence of explaining the dark matter concept to a friend was relatively low (mean average rates of 5.0 on a 9-point Likert scale, standard deviation of 2.0 scores). Figure 2 presents a word-cloud of the descriptors used by the participants, including for instance various references to ‘interesting’, ‘fun’, ‘cool’, and ‘educational’.

For the 2019 event, we iterated the questionnaire used, and introduced new evaluation methods suggested in the literature [Grand and Sardo, 2017 ], as well as following internal evaluation of the pilot. From the health and safety form (which all participants had to fill out before taking the experience) we had 108 visitors on Day 1 and 114 on Day 2, for a total of 222 visitors. Nominally, this translates to 18.5 visitors/hr with an average turnaround time per pair of visitors of 6.5 minutes. This however includes latency times due to technical difficulties. Improving on the 2018 event, for this second run we collected demographics data for 159 visitors (return rate 72%). Our survey results show that “Curiosity on multi-sensory aspects” was the second highest reason for participating to the event (44 people), almost as important as “Desire to learn” (48 people), when we asked “What mainly attracted you to the Dark Matter event?”. Prior knowledge about dark matter was fairly low (mean=3.4, SD=1.9, mode=2, on a Likert scale 0–9), but awareness was high (85% were aware of the concept). While the prior interest in science was unsurprisingly high (mean=7.3, SD=1.8, mode=9) given the type of event, the level of education and professional background of our public was fairly wide. We had a good gender balance and a relatively varied mix of ethnicity. The most represented age group was 10–14 years old, with a distribution of people across all ages (0 to 85+). We had 12 visitors who self-identified as having a disability.

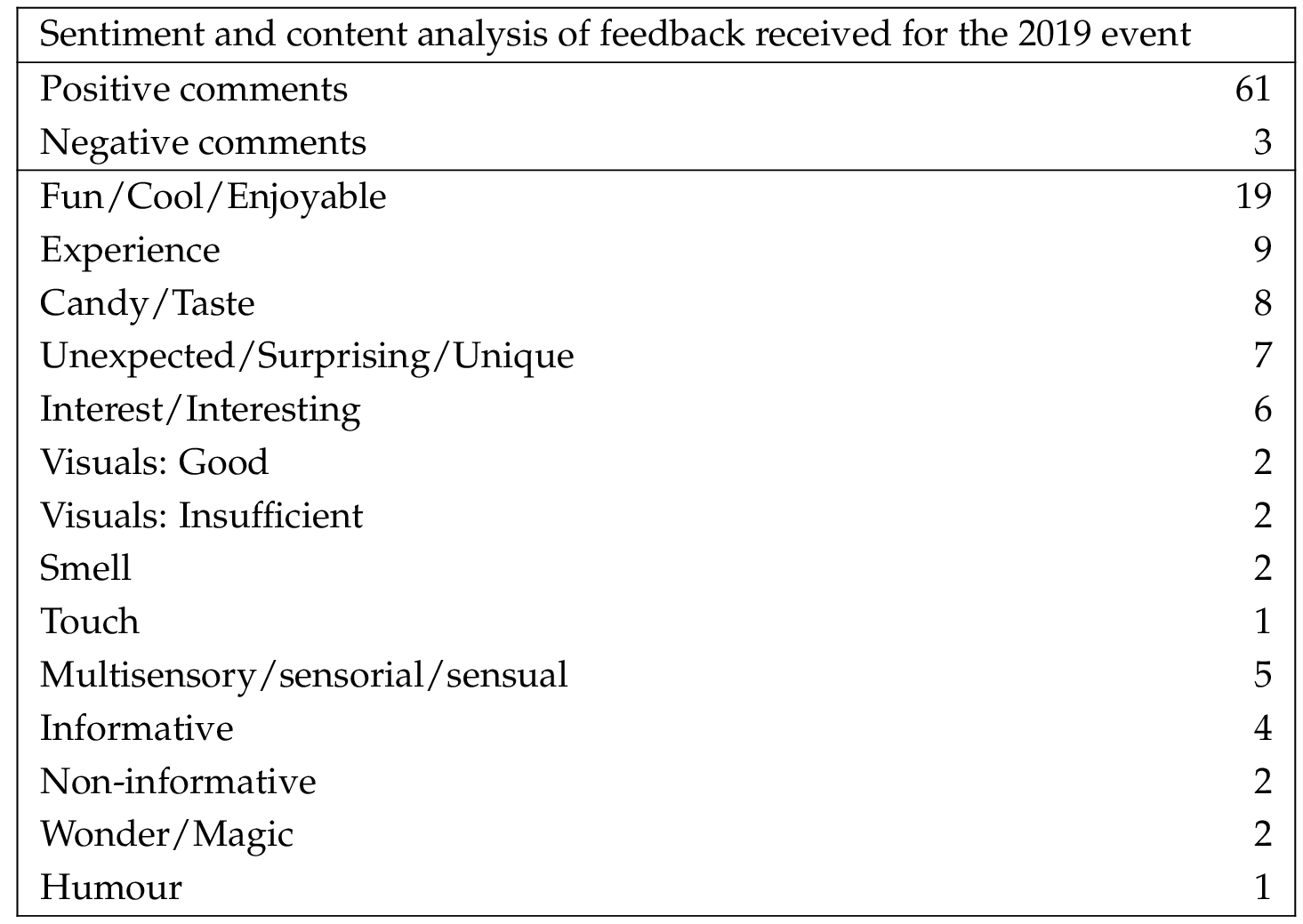

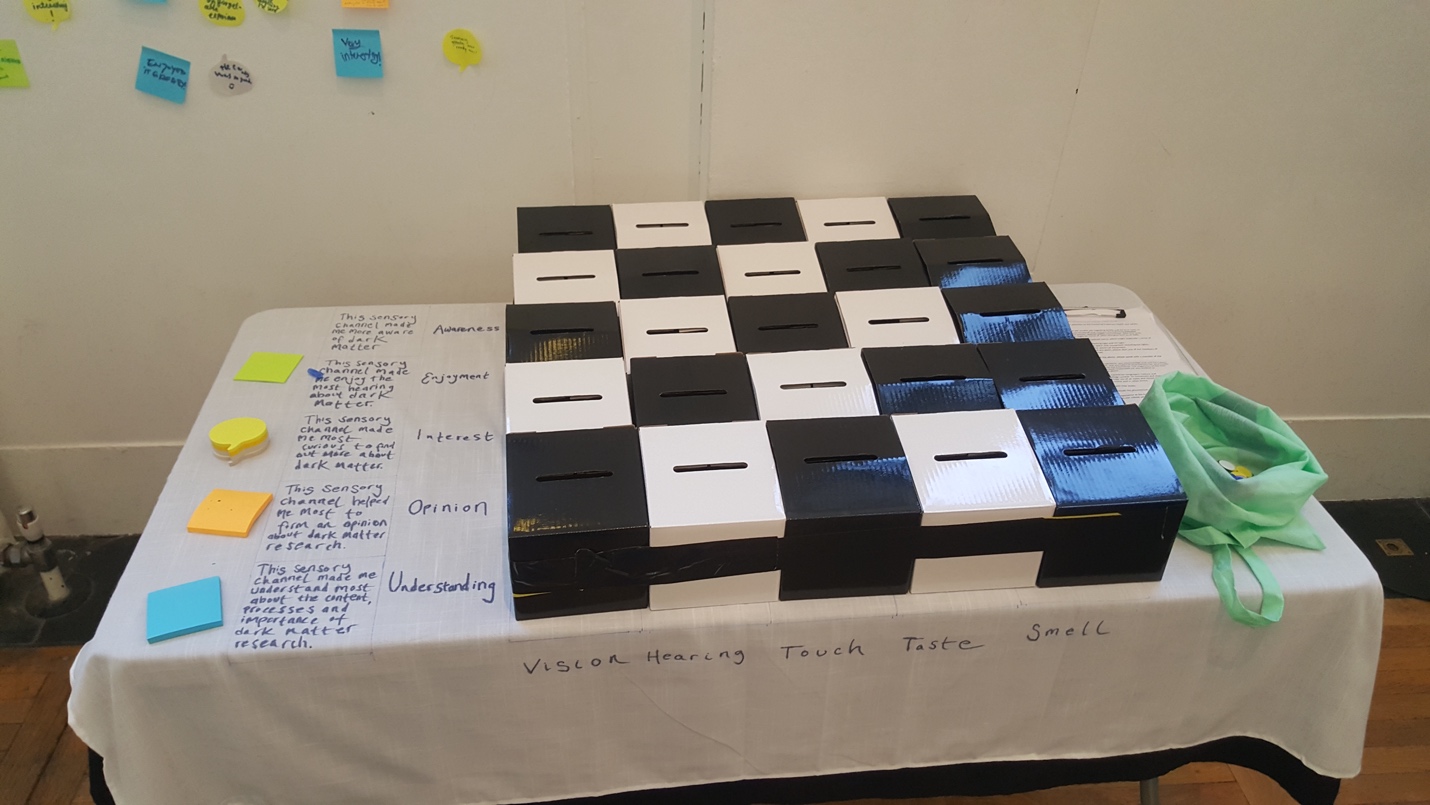

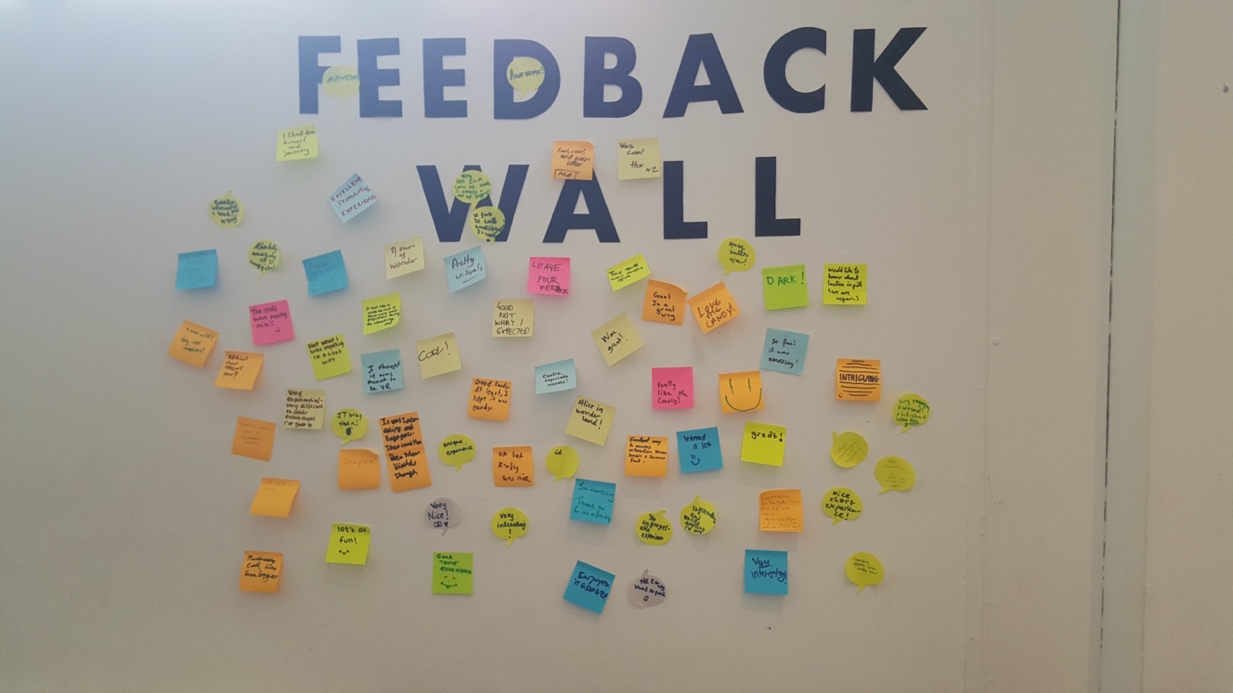

We evaluated the public’s experience using two mechanisms. A multi-dimensional feedback matrix of sensory channel vs. personal response, and the opportunity for the public to leave comments on post-its on a feedback wall right after the experience. A third evaluation method, a planned social media campaign, didn’t work due to lack of focus on getting it off the ground during the event. We categorized the post-it replies and grouped them according to themes and sentiment. Visitors left a total of 67 post-its on the wall (a return rate of 30%). 61 were positive, 3 neutral and 3 negative. A summary is shown in Table 1 and a photo of the actual wall in Figure 5 .

5 Lessons learnt

Our motivation for developing a proof-of-concept experience was both to explore the cross-disciplinary opportunities afforded by pooling our respective expert knowledge, as well as identifying shortcomings inherent in breaking new ground.

A significant source of improvements lies in the logistics of the experience itself. In the 2018 pilot, we were surprised and outpaced by the level of interest and sheer number of participants, with many leaving the queue, frustrated, after a long wait. Naturally our main focus was on the experience that the participants would have upon entering the planetarium. However, during the event we realized the one-on-one engagement potential of having a large number of people queuing with anticipation. While we did engage queuing people in meaningful conversations (Figure 3 ), this was not something for which we had a strategy as a complement to the multisensory experience itself. Engaging with the crowd can be used to both increase their awareness regarding the underlying science and technology, but also to instruct them and provide them with information that can streamline the logistics once it is their turn to participate. In the 2019 event, we engaged the public before the experience, via a purpose-built 5 MCQ quiz on Dark Matter. The quiz was a significant improvement. It engaged the public effectively. Once the correct answers were revealed with a UV pen, it also generated a great deal of discussions.

While the actual experience inside the planetarium was designed to last 3 minutes (thus allowing for a theoretical throughput of 40 people per hour), we found that considerable time was spent in changing over participants, thus reducing the number of people we could accommodate and dramatically increasing queuing time. One positive, sociological aspect of the long queue that formed outside the planetarium was that visitors to the museum (where many different stands and stalls were competing for the public’s attention) felt naturally drawn to what appeared to be an extremely popular attraction (the “herd effect”).

In our enthusiasm to deliver the 2018 pilot multisensory experience, we also overlooked putting a stronger emphasis on the novel nature of our collaboration. For some participants, the crucial fact that the experience was scientifically accurate and designed by actual dark matter researchers in collaboration with human-computer interaction experts did not come through. Similarly, the fact that cutting-edge haptic technology was being used and customized for this event was not properly communicated. Emphasizing such aspects might help drawing more attention to the details and science behind the experience, and give further direction to a perception-heavy experience.

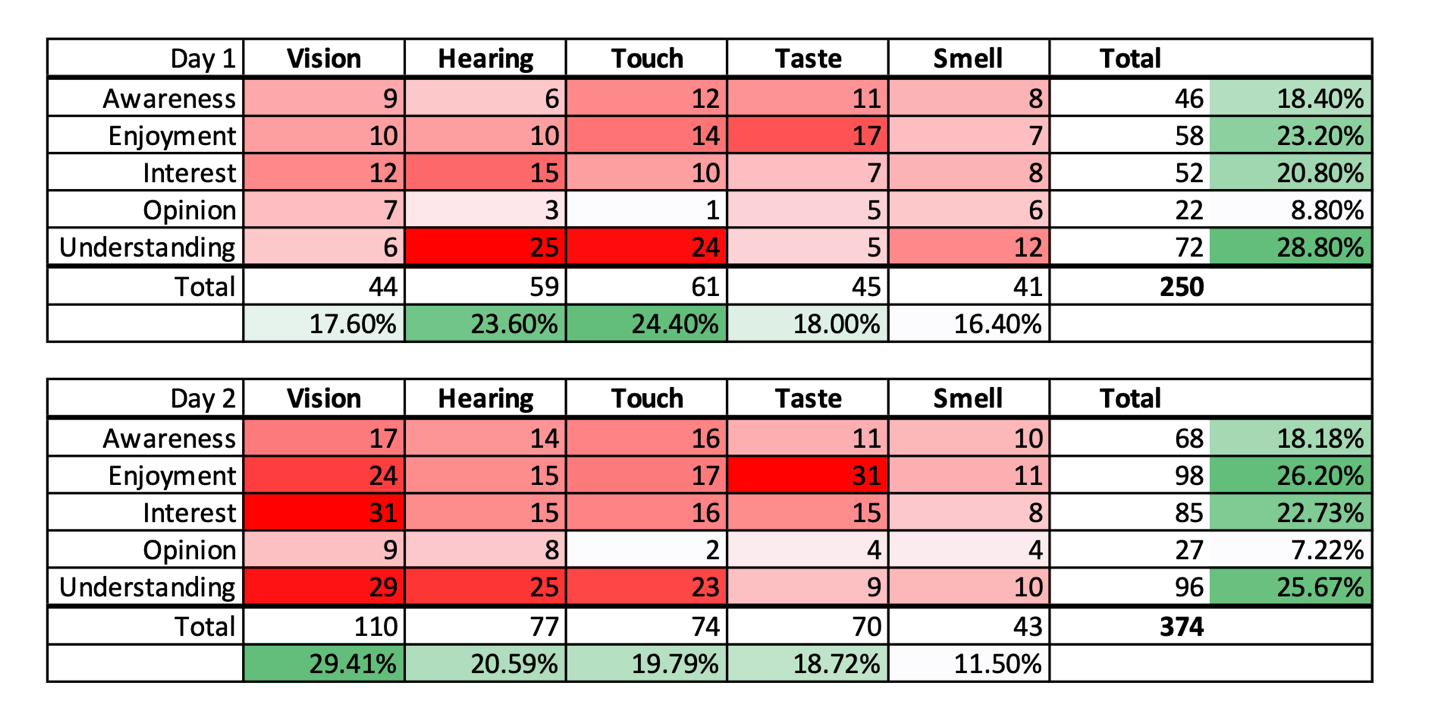

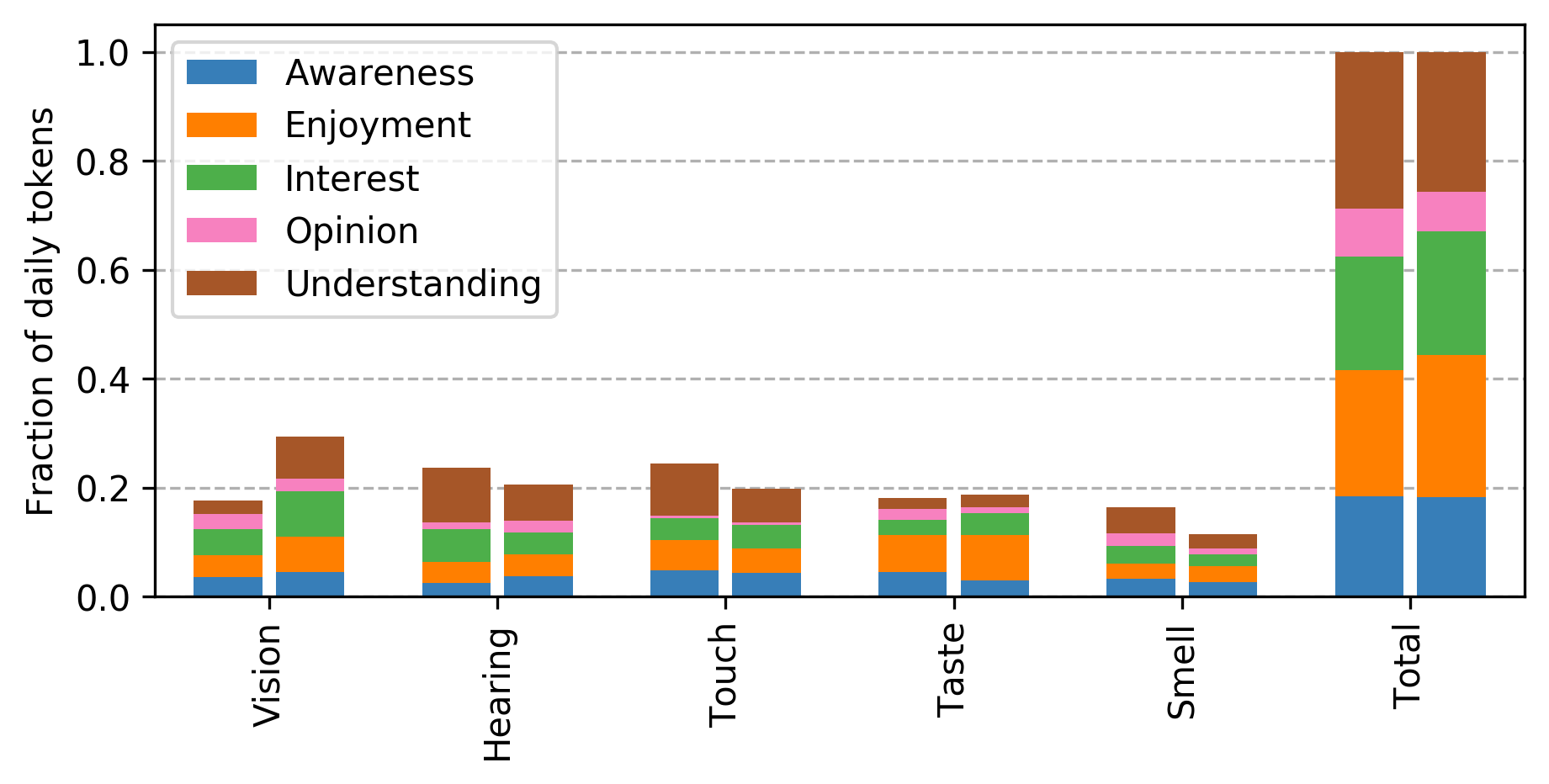

From participants’ qualitative feedback after the 2018 event, we concluded that we needed to understand better the emotional load behind the different stimuli and fine-tune their relative intensity. For example, some participants had a strong reaction to popping candy, which could be perceived as more distracting than enhancing. Others reported that the surprising effect of the candy set the tone for the whole experience that followed. For this reason, for the 2019 event we designed a mechanism to evaluate the role of sensory channels in personal responses evoked. For the multi-sensory matrix (see Figure 4 for setup and results) each participant was given 3 tokens to vote for the three strongest combinations of sensory channel-felt personal response. The results showed that on Day 1 participants thought that Hearing and Touch were the predominant sensory modalities, with Taste topping the Enjoyment category. On Day 2, we changed the way the dark matter visual stimulation was run, keeping it on for the entire stay inside the dome (differently from Day 1, when the visuals were switched off once the visitors settled down at the beginning of the recording). This change led to Vision becoming the most important sensory mode, topping even Hearing in the “understanding” category. These results suggest that taking away the visual modality (sensory deprivation) might enhance the perceived value of other senses. Taste remained the most popular contributing factor to Enjoyment in Day 2. In terms of Understanding, Smell and Taste both ranked much lower than any of the other senses, while Taste and Touch are comparatively more important for the Awareness dimension.

6 Conclusions and future work

We are continuing the development of the experience, in a rapid-response design approach. Based on the feedback received and critical reflection upon the pilot exhibition in the Science Museum, we are refining the multisensory experience design to better achieve our aims of embodiment and emotional response from the participants.

We are also aware that diffusion of the ideas behind our project is important if we want as many people as possible to experience it — one of the motivations for this article. In particular, we would like other science communicators to be able to reproduce the experience locally. An obvious obstacle to this ambition is the cost and technical complexity of some of the kit involved: not every science communicator will have easy access to a portable planetarium, a mid-air haptic display, wireless noise-cancelling headphones and scent delivery device! This is why we would like to design a more portable version of the experience, using everyday, easily accessible and cheap technology. We hope to make blueprints and instructions freely available online, including all necessary elements to reproduce locally the experience. We hope that this will further improve accessibility, while providing us with the opportunity to reach a larger public with our experimental science communication. We hope that this pilot project will help establish the value that a multisensory experience design approach can bring to science communication.

Acknowledgments

We would like to thank Gareth Mitchell (Centre for Languages, Culture and Communication, Imperial College London) for providing the voice-over to the experience; Wahidur Rahman and Thomas Binnie for supporting the event; the Outreach Team and the Director of Outreach at Imperial College London, Andrew Tabbutt, for providing the planetarium; Lina Kabbadj and Charlie Jones for filming and editing the video documenting the event; Aran Shaunak (Engagement Manager, London Science Museum) for giving us the opportunity to participate in the Lates event; the Science and Technology Facilities Council (U.K.) and the Imperial College London Societal Engagement Seed Fund for financial support, and the Science Communication Unit at Imperial College London for audiovisual support. We acknowledge the use of a visualization of the Aquarius Simulation by Volker Springel & the VIRGO Collaboration. The contribution by the SCHI Lab team at the University of Sussex has been supported by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program under the Grant No.: 638605.

References

-

Ablart, D., Velasco, C., Vi, C. T., Gatti, E. and Obrist, M. (2017). ‘The how and why behind a multisensory art display’. interactions 24 (6), pp. 38–43. https://doi.org/10.1145/3137091 .

-

Arcand, K. K., Watzke, M., DePasquale, J., Jubett, A., Edmonds, P. and DiVona, K. (2017). ‘Bringing cosmic objects down to earth: an overview of 3D modeling and printing in astronomy and astronomy communication’. Communicating Astronomy with the Public Journal 1 (22), pp. 14–20. URL: https://www.capjournal.org/issues/22/22_14.pdf .

-

Arcand, K., Jubett, A., Watzke, M., Price, S., Williamson, K. and Edmonds, P. (2019). ‘Touching the stars: improving NASA 3D printed data sets with blind and visually impaired audiences’. JCOM 18 (04), A01. https://doi.org/10.22323/2.18040201 .

-

Bonne, N. J., Gupta, J. A., Krawczyk, C. M. and Masters, K. L. (2018). ‘Tactile universe makes outreach feel good’. Astronomy & Geophysics 59 (1), pp. 1.30–1.33. https://doi.org/10.1093/astrogeo/aty028 .

-

Brulé, E. and Bailly, G. (2018). ‘Taking into account sensory knowledge: the case of geo-techologies for children with visual impairments’. In: Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems — CHI ’18 . New York, NY, U.S.A.: ACM Press. https://doi.org/10.1145/3173574.3173810 .

-

Bucchi, M. and Trench, B., eds. (2008). Handbook of Public Communication of Science and Technology. London, U.K. and New York, U.S.A.: Routledge.

-

Carter, T., Seah, S. A., Long, B., Drinkwater, B. and Subramanian, S. (2013). ‘UltraHaptics: multi point mid-air haptic gesture feedback on touch surfaces’. In: Proceedings of the 26th annual ACM symposium on User interface software and technology — UIST ’13 (St. Andrews, Scotland, U.K.). New York, NY, U.S.A.: ACM Press. https://doi.org/10.1145/2501988.2502018 .

-

Casado, J., Cancio, A., García, B., Diaz-Merced, W. and Jaren, G. (2017). ‘Sonification prototypes review based on human-centred processes’. In: Proceeding of the XXI Congreso Argentino de Bioingeniería — X Jornadas de Ingeniería Clínica (Universidad Nacional de Cordoba, Cordoba, Argentina).

-

Clements, D. L., Sato, S. and Fonseca, A. P. (2017). ‘Cosmic sculpture: a new way to visualise the cosmic microwave background’. European Journal of Physics 38 (1), p. 015601. https://doi.org/10.1088/0143-0807/38/1/015601 .

-

Covaci, A., Ghinea, G., Lin, C.-H., Huang, S.-H. and Shih, J.-L. (2018). ‘Multisensory games-based learning — lessons learnt from olfactory enhancement of a digital board game’. Multimedia Tools and Applications 77 (16), pp. 21245–21263. https://doi.org/10.1007/s11042-017-5459-2 .

-

Dattaro, L. (13th March 2018). ‘LHC exhibit expands beyond the visual’. Symmetry Magazine . URL: https://www.symmetrymagazine.org/article/lhc-exhibit-expands-beyond-the-visual (visited on 5th April 2019).

-

Dmitrenko, D., Maggioni, E. and Obrist, M. (2017). ‘OSpace: towards a systematic exploration of olfactory interaction spaces’. In: Proceedings of the Interactive Surfaces and Spaces on ZZZ — ISS ’17 (Brighton, U.K.). New York, NY, U.S.A.: ACM Press, pp. 171–180. https://doi.org/10.1145/3132272.3134121 .

-

European Southern Observatory (2015). Aquarius Simulation rendering for planetarium by European Southern Observatory . URL: https://www.eso.org/public/videos/aquarius_springel/ (visited on 1st April 2019).

-

FormDesignCenter (2019). What matter’s . URL: https://www.formdesigncenter.com/en/utstallningar/dutch-design-week/ (visited on 1st April 2019).

-

Gibbs, L. (2014). ‘Arts-science collaboration, embodied research methods and the politics of belonging: ‘SiteWorks’ and the Shoalhaven River, Australia’. Cultural Geographies 21 (2), pp. 207–227. https://doi.org/10.1177/1474474013487484 .

-

Grand, A. and Sardo, A. M. (2017). ‘What works in the field? Evaluating informal science events’. Frontiers in Communication 2. https://doi.org/10.3389/fcomm.2017.00022 .

-

Grice, N., Christian, C., Nota, A. and Greenfield, P. (2015). ‘3D printing technology: a unique way of Making Hubble Space Telescope images accessible to non-visual learners’. JBIR: Journal of Blindness Innovation and Research 5 (1). URL: https://nfb.org/images/nfb/publications/jbir/jbir15/jbir050101.html .

-

Hamza-Lup, F. G. and Stanescu, I. A. (2010). ‘The haptic paradigm in education: challenges and case studies’. The Internet and Higher Education 13 (1-2), pp. 78–81. https://doi.org/10.1016/j.iheduc.2009.12.004 .

-

International Astronomical Union (2019). Astronomy for equity and inclusion . IAU division C commission C1 WG3. URL: http://sion.frm.utn.edu.ar/iau-inclusion/?page_id=27 (visited on 1st April 2019).

-

Lynch, J. F. (2017). ‘Acoustics and astronomy’. Acoustics Today 13 (4), pp. 27–34. URL: https://acousticstoday.org/acoustics-astronomy-james-f-lynch/acoustics-and-astronomy-james-f-lynch/ .

-

Madura, T. I., Clementel, N., Gull, T. R., Kruip, C. J. H. and Paardekooper, J.-P. (2015). ‘3D printing meets computational astrophysics: deciphering the structure of Carinae’s inner colliding winds’. Monthly Notices of the Royal Astronomical Society 449 (4), pp. 3780–3794. https://doi.org/10.1093/mnras/stv422 .

-

Metatla, O., Maggioni, E., Cullen, C. and Obrist, M. (2019). ‘“Like popcorn”: investigating crossmodal correspondences between scents, 3D shapes and emotions in children’. In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems — CHI ’19 (Glasgow, Scotland, U.K.). New York, NY, U.S.A.: ACM Press. https://doi.org/10.1145/3290605.3300689 .

-

National Astronomical Observatory of Japan (2018). Making tactile models with a 3D printer: the Subaru Telescope . URL: http://prc.nao.ac.jp/3d/subaru_top_e.html (visited on 1st April 2019).

-

Obrist, M., Seah, S. A. and Subramanian, S. (2013). ‘Talking about tactile experiences’. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems — CHI ’13 (Paris, France). New York, NY, U.S.A.: ACM Press. https://doi.org/10.1145/2470654.2466220 .

-

Obrist, M., Tuch, A. N. and Hornbaek, K. (2014). ‘Opportunities for odor: experiences with smell and implications for technology’. In: Proceedings of the 32nd annual ACM conference on Human factors in computing systems — CHI ’14 (Toronto, ON, Canada). New York, NY, U.S.A.: ACM Press, pp. 2843–2852. https://doi.org/10.1145/2556288.2557008 .

-

Office of Astronomy for Development (2019). Projects funded by the Office of Astronomy for Development . URL: http://www.astro4dev.org/projects-funded/ (visited on 1st April 2019).

-

Olofsson, J. K., Niedenthal, S., Ehrndal, M., Zakrzewska, M., Wartel, A. and Larsson, M. (2017). ‘Beyond Smell-o-Vision: possibilities for smell-based digital media’. Simulation & Gaming 48 (4), pp. 455–479. https://doi.org/10.1177/1046878117702184 .

-

Ortiz-Gil, A., Collado, G. M., Nuñez, S. M., Blay, P., Guirado, J. C., Calvente, A. T. G. and Lanzara, M. (2011). ‘Communicating astronomy to special needs audiences’. CAPjournal 11, pp. 12–15. URL: https://www.capjournal.org/issues/11/11_12.pdf .

-

Trotta, R. (2016a). g-ASTRONOMY: the taste of the cosmos for people with visual impairment . [YouTube video]. URL: https://www.youtube.com/watch?v=_GEtpf1ai4o (visited on 5th April 2019).

-

— (28th June 2016b). Out of this world cuisine . [PhysicsWorld podcast]. URL: https://physicsworld.com/a/out-of-this-world-cuisine/ (visited on 27th March 2019).

-

— (2018). ‘The hands-on universe: making sense of the universe with all your senses’. Communicating Astronomy with the Public Journal 1 (23), pp. 20–25. URL: https://www.capjournal.org/issues/23/23_20.pdf .

-

Trotta, R., Hajas, D., Camargo-Molina, E., Cobden, R., Maggioni, E. and Obrist, M. (2019). Dark matter multisensory experience: voiceover . (narrated by Gareth Mitchell). URL: https://doi.org/10.5281/zenodo.3737785 .

-

Vi, C. T., Ablart, D., Gatti, E., Velasco, C. and Obrist, M. (2017). ‘Not just seeing, but also feeling art: mid-air haptic experiences integrated in a multisensory art exhibition’. International Journal of Human-Computer Studies 108, pp. 1–14. https://doi.org/10.1016/j.ijhcs.2017.06.004 .

Authors

Roberto Trotta. Roberto Trotta is Professor of Astrostatistics and a science communicator at Imperial College London, where he studies dark matter, dark energy and the Big Bang. He is the director of Imperial’s Centre for Languages, Culture and Communication. Roberto is the recipient of numerous awards for his public engagement activities, including the Lord Kelvin Award of the British Association for the Advancement of Science and the Annie Maunder Medal 2020 of the Royal Astronomical Society. His award-winning first book for the public, “The Edge of the Sky” explains the Universe using only the most common 1,000 words in English. Roberto was named as one of the 100 Global Thinkers 2014 by Foreign Policy, for “junking astronomy jargon”. E-mail: r.trotta@imperial.ac.uk .

Daniel Hajas. Daniel obtained a M.Phys in theoretical physics from the University of Sussex and is a second year PhD student at the SCHI Lab. Daniel is working on the intersection of mid-air haptics, science communication and Human-Computer Interaction. Daniel’s research is targeting the use of tactile experiences for purposes of provoking personal responses, which are known to be relevant in science communication, such as interest or enjoyment. With the help of multisensory technologies, Daniel hopes to contribute to making invisible phenomena of nature, more tangible, more ‘real’, and therefore more digestible for the public. E-mail: dh256@sussex.ac.uk .

José Eliel Camargo-Molina. Eliel is a theoretical physicist working in the intersection of particle physics and cosmology at Imperial College London, developing new theories on the origin of dark matter and dark energy, but also computational tools and methods for testing such ideas with experimental data. Eliel is co-founder and director of Art & Science Initiative (ASI), an organization aiming to facilitate and participate in interdisciplinary collaborations. The latest ASI project, “what matter_s”, was nominated for the 2019 NICE awards by the European centre for creative economy. E-mail: j.camargo-molina@imperial.ac.uk .

Robert Cobden. Robert is a computer scientist working as research technician and software developer in the SCHI Lab. His focus is on creating a toolkit to standardize the design of olfactory experiences and advance scent-delivery technology through innovative hardware designs. Coming from his recent work on various smell-based research, with applications such as notifications and virtual reality, Robert is also co-founder of OWidgets and aims to revolutionize the way we use scent in our everyday lives. E-mail: rc316@sussex.ac.uk .

Emanuela Maggioni. Emanuela is a Royal Academy of Engineering Enterprise Fellow at the University of Sussex and CEO & Co-Founder of OWidgets. Between 2016–2019, she was a research fellow in Multisensory Experiences at the SCHI Lab working on smell-based interaction solutions for in-car experiences, desktop notifications, and VR solutions. She received a PhD in Cognitive Psychology on smell, emotions and decision making in the context of consumer behaviour from University of Milano-Bicocca with visiting research periods at University of Oxford and UCL. She is passionate about the opportunities around smell and how smell can be used in multisensory interaction and experience design to affect emotions and behaviours, both in the real and virtual environments. Her Enterprise Fellowship started in March 2019 and aims to develop the ecosystem that enables everyone to design with smell. E-mail: E.Maggioni@sussex.ac.uk .

Marianna Obrist. Marianna Obrist is Professor of Multisensory Experiences and head of the Sussex Computer Human Interaction (SCHI ‘sky’) Lab at the School of Engineering and Informatics at the University of Sussex in the U.K. Her research ambition is to establish touch, taste, and smell as interaction modalities in Human-Computer Interaction (HCI). Before joining Sussex, Marianna was a Marie Curie Fellow at Newcastle University and prior to this an Assistant Professor at the University of Salzburg, Austria. More recently, Marianna was a visiting Associate Professor at Stanford University in summer 2017. She is an inaugural member for the ACM Future of Computing Academy, leading the co-creation working group with a special focus on the Future of Computing and Food. She was selected Young Scientist 2017 and 2018 to attend the World Economic Forum in the People’s Republic of China. More details on her research can be found at: http://www.multisensory.info . E-mail: M.Obrist@sussex.ac.uk .