1 Introduction

Because the products of science profoundly impact the goods and services we purchase as consumers, the policies of our governments, and the Earth’s ecosystems, there is a growing recognition that scientists have a responsibility to engage with non-scientists. This engagement with broader society can take many forms, including providing scientific counsel on policy issues [Baron, 2010 ; Groffman et al., 2010 ; Grorud-Colvert et al., 2010 ], developing outreach programs for elementary and secondary school students [Friedman, 2008 ], partnering with science museums [Bell, 2008 ], and engaging in independent, online outreach (e.g. blogs, podcasts, and films).

But traditional disciplinary training does not prepare scientists for these types of communication tasks. Effective communication with non-scientists requires training and practice beyond traditional course work and discipline-specific seminars. The need for this training — for both students and established scientists — has been the subject of numerous commentaries by scientific leaders and educators over the last decade [Brownell, Price and Steinman, 2013 ; Bubela et al., 2009 ; Leshner, 2007 ; Warren et al., 2007 ].

Although a small number of programs and courses train students and scientists in these skills, formal assessments of the effectiveness of this training have not been widely reported. We present an assessment of the Engage program, a graduate-student-created and led training program at the University of Washington. Using a pre-course/post-course study design, we examined student ability to deliver a short presentation appropriate for a public audience. In addition, we conducted surveys of students and alumni to provide a broader picture of the effects of the program. The purpose of this work is to describe our approach to science communication training and to assess the impact of this training program on the communication skills of trainees.

1.1 Opportunities for communication training

A number of organizations and initiatives are working to address the need for training and support for public communication activities [Neeley et al., 2014 ].

Workshops sponsored by the American Association for the Advancement of Science [Basken, 2009 ] and the Alan Alda Center for Communicating Science [Bass, 2016 ; Weiss, 2011 ] respond to the need for short, targeted training to develop practical skills. The European Science Communication Network (ESConet) [Miller, Fahy and the ESConet Team, 2009 ] has developed a set of twelve teaching modules, covering both practical skill development and theoretical aspects of science communication.

Scientific outreach programs have also served as a mechanism for training scientists to communicate with public audiences. Examples include a collaboration between Washington University and the St. Louis Science Center [Webb et al., 2012 ] and a program at the University of Texas at Austin in which graduate students visit middle schools to talk about their research [Clark et al., 2016 ].

University courses provide a way for students to supplement their disciplinary coursework with communication training. Examples of educational goals in these courses include development of skills for presenting to both scientific and non-scientific audiences [Stuart, 2013 ], engaging in various forms of informal science education [Crone et al., 2011 ], and implementing outreach activities consistent with the “broader impacts” criteria of the National Science Foundation [Heath et al., 2014 ].

Other initiatives include competitions to deliver short explanations of research which are appropriate for non-scientist audiences [Shaikh-Lesko, 2014 ] and partnerships with non-profit organizations [Smith et al., 2013 ]. Despite these science communication training opportunities, many students do not have easy access to training because courses are not offered by their university or program of study, and must rely on a “do it yourself” approach to gaining communication skills [Kuehne et al., 2014 ].

1.2 Assessing the effectiveness of communication training

With calls from scientific leaders to better serve society and requests from students to receive training that will better prepare them for the job market [Blickley et al., 2012 ; Cannon, Dietz and Dietz, 1996 ; Hundey et al., 2016 ], engagement between scientists and non-scientist audiences is poised to grow. But to ensure that an increase in engagement activities produces more effective engagement, it must be accompanied by a research agenda to better understand the role of science communication in society and how to fulfill this role [Fischhoff and Scheufele, 2014 ], to assess the impact of this communication [Jensen, 2011 ], and to evaluate the effectiveness of training programs in teaching communication skills [Neeley et al., 2014 ].

Without this research, it may be difficult for universities and graduate programs to justify support for instruction in science communication. In addition, because advisers of graduate students may view communication training as an unnecessary distraction from students’ research projects and discipline-specific learning, it is important to demonstrate that communication training provides skills relevant to securing employment and pursuing research in highly competitive fields [Smith et al., 2013 ].

During our literature search we identified nine communication training programs (workshops, courses, and outreach programs) that described a method of evaluation. The five workshop programs are:

- A series of twelve workshops (1–4 hours each) covering both practical skill development and theoretical aspects of public communication, developed for early-career scientists [Miller, Fahy and the ESConet Team, 2009 ].

- Three 4-hour workshops, plus development of a hands-on activity for a two-day exhibit at a local science museum, for graduate students in neuroscience [Webb et al., 2012 ].

- A 5-day workshop for graduate students to build skills in communication and engagement. Students produced a video in which they were interviewed about what they do and the significance of their research [Holliman and Warren, 2017 ].

- Two-day training sessions for UK Royal Society Research Fellows to prepare them for performing educational outreach [Fogg-Rogers, Weitkamp and Wilkinson, 2015 ].

- Four 2-hour workshops for STEM graduate students, plus development and refinement of a three-minute oral presentation [Rodgers et al., 2018 ].

The three university semester-long courses are:

- A course for undergraduates in written and oral communication, with projects including design and implementation of a public outreach event [Yeoman, James and Bowater, 2011 ].

- A course for graduate students focusing on outreach activities, with development and implementation of an activity consistent with National Science Foundation “Broader impacts” criteria [Heath et al., 2014 ].

- A course for graduate students on communication in informal science settings, with development and implementation of an activity for an outreach event [Crone et al., 2011 ].

The one outreach program for graduate students centers on development of a presentation for middle school students (“Present your PhD thesis to a 12-year-old”) [Clark et al., 2016 ].

Most training programs included surveys of trainees as a method of evaluation. Typical questions asked trainees to rate their levels of competence in various communication skills and their comfort level in speaking to a public audience, with responses indicated using a Likert scale. Five used both pre-training and post-training surveys [Crone et al., 2011 ; Webb et al., 2012 ; Yeoman, James and Bowater, 2011 ; Fogg-Rogers, Weitkamp and Wilkinson, 2015 ; Rodgers et al., 2018 ]. Two programs collected feedback using short surveys of audience members or outreach participants [Clark et al., 2016 ; Webb et al., 2012 ]. The study by Holliman and Warren [ 2017 ] used interviews instead of surveys, with students interviewed both immediately after the training and more than 12 months later.

An alternate approach to understanding the components of effective communication courses is to study the experiences of participants from many different courses and workshops. Silva and Bultitude [ 2009 ] used surveys and interviews to study a sample of trainees and trainers from 47 different courses and workshops, and then developed a list of best practices.

Surveys of trainees are appropriate for gauging self-perceptions of competency in communication skills, attitudes toward public communication, and level of enthusiasm. Surveys of public participants in outreach programs capture sense of learning, attitudes toward science, and level of enjoyment. But neither directly measures the effect of training programs on trainees’ skills and knowledge. Recent work by Rodgers et al. [ 2018 ] takes a more rigorous approach to evaluation by using a triangulated framework that includes surveys of graduate student trainees, evaluations of faculty trainers, and evaluations of trainees’ pre-workshop and post-workshop videos by external reviewers who are not trained in science.

Two assessment instruments for measuring the effectiveness of scientists’ communication with public audiences have been described in the scholarly literature. The first focuses on written communication [Baram-Tsabari and Lewenstein, 2013 ] and the second is designed to assess oral communication [Sevian and Gonsalves, 2008 ]. To our knowledge, there are no reports of either assessment being applied in the context of communication training programs to evaluate changes in trainees’ communication skills.

1.3 The Engage program

The Engage program is a graduate-student-created and led program at the University of Washington for graduate students in scientific disciplines [Clarkson et al., 2014 ]. We are previous instructors (JH, JR) and students (MDC, WC) of this program. Engage was founded by four graduate students who organized a series of public presentations by graduate students in 2010. In the fall of 2011, a course was developed and led by two of the founding members.The curriculum has been continuously improved based on feedback from students, science communication educators, and inspiration from science communication conferences and workshops.

The training focuses on the development of oral presentation skills for communicating with non-scientists. Participants in the Engage program enroll in a graduate-level course that teaches skills for communicating with public audiences, and their training culminates in the delivery of a 20 to 30-minute public presentation at Town Hall Seattle (a community cultural center located in downtown Seattle). Student’s talks are promoted as the “UW Science Now” lecture series.

-

Course design.

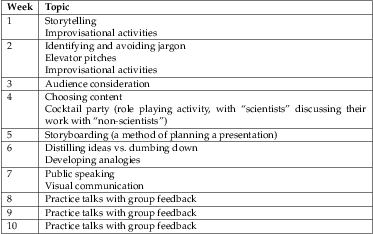

The 10-week course meets once a week for 3 hours per session (Table 1 ). It includes activities for building communication skills, discussions of techniques for communicating science, and development of students’ public presentations. Improvisation activities are also an important part of classroom activities because they help to develop a more comfortable and dynamic stage presence and a positive attitude toward unexpected events, such as difficult questions from audience members [Bernstein, 2014 ].

The course material was developed by the instructors of the first course and was strongly influenced by the book “Don’t be such a scientist” [Olson, 2009 ]. The schedule of course topics undergoes modification each year in response to ideas from instructors and feedback from students. The first seven weeks focus on different topics in communication, with guest speakers for weeks 2–7. During the last three weeks students practice their public presentations and receive feedback from classmates and instructors.

-

Students.

All interested students were accepted into the course the first three times it was offered. Beginning in 2014, an application process was established to limit class size. The instructors have found that a class size of 12–15 students best supports classroom discussions and provides each student time to receive feedback on the practice talk from peers and the instructor. Annual acceptance rates into the Engage program have been roughly 40%, while the completion rate has been about 95%.

Cohorts of students in the Engage program come from a mixture of disciplinary backgrounds. The philosophy behind this design is that having classmates with diverse backgrounds who use different research methodologies will help students to identify language and concepts that are common within their own discipline, but need to be translated or simplified for other audiences (i.e., jargon [Sharon and Baram-Tsabari, 2013 ]). Students of the Engage program have come from the College of the Environment, the College of Engineering, the College of Arts and Sciences, the School of Medicine, and the School of Public Health, among others. However, the College of the Environment has had the most representation (36 of 85 students). This is due to the high level of interest from this population, the large number of departments in this college, and because the College of the Environment promotes science communication and has funded the teaching assistantship for course instructors since 2013.

-

Governance.

Engage is governed by a Board of Directors composed of Engage alumni. Each year a new instructor is selected from the previous student cohort. The previous year’s instructor serves as an adviser to the new instructor.

2 Assessment of speaking skills

To determine whether the Engage course had an effect on the quality of students’ oral presentation skills when communicating to non-scientists, we captured videos of short pre-course and post-course presentations. Each video was assessed by both the presenting student and three external reviewers. This methodology allowed us to (1) assess skill levels before and after the course, and (2) to compare self-assessment ratings with ratings by external reviewers.

2.1 Methods

The Human Subjects Division at the University of Washington determined that this study was exempt from review.

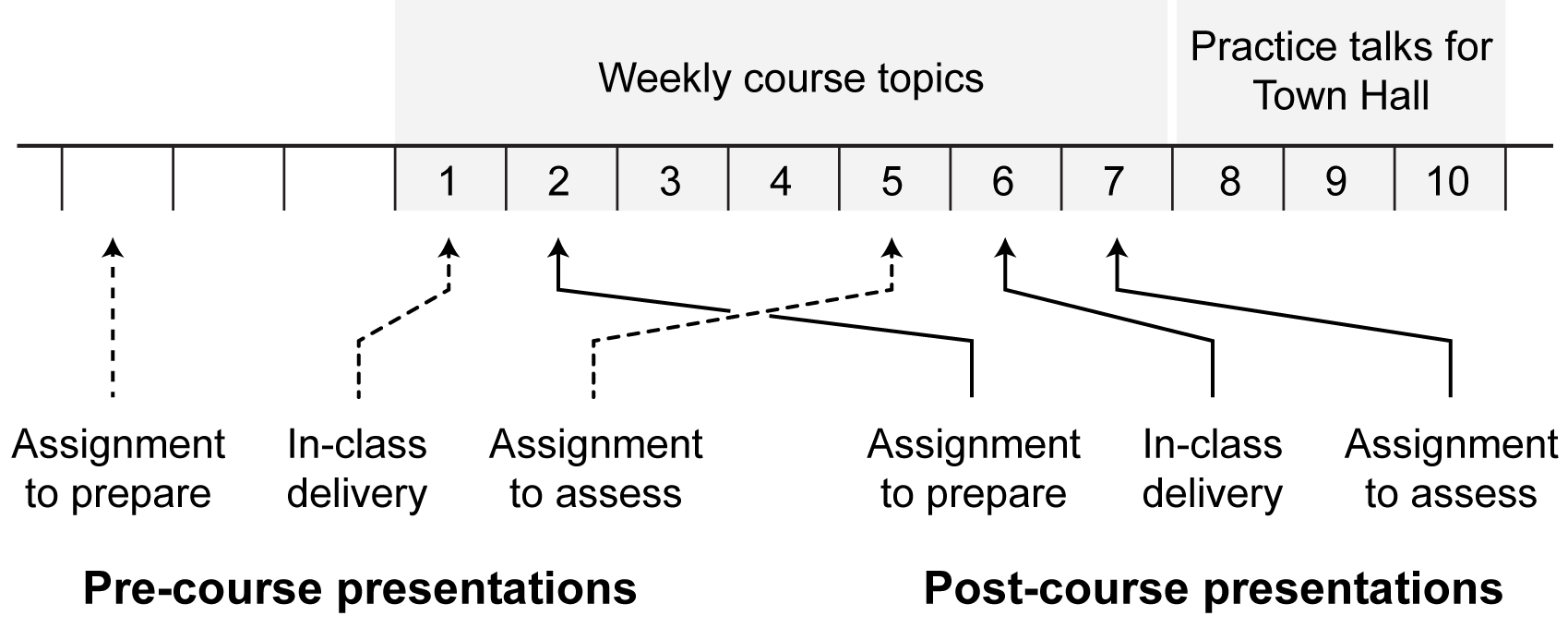

We assessed changes in the speaking skills of the 2015 cohort of Engage students via two short presentations intended for non-scientist audiences. Preparation, delivery and self-assessment of these presentations occurred during the course (Figure 1 ). The “pre-course” presentation was delivered on the first day of the course. We refer to the presentation delivered in the sixth week of the course as the “post-course” presentation because the students had received instruction in most of the course content by that week.

The assignment for the pre-course presentation was emailed to students two-and-a-half weeks before the course began. Students were asked to “prepare a 2-minute description of your research, such as you would give to President Obama if you had two minutes to tell him about your work.” These pre-course presentations were delivered in front of the class without visual aids. Video recordings were captured.

The post-course presentation was assigned during the second week, with the same instructions as for the pre-course presentation, and delivered during the sixth week of the course. Approximately eight members of the Engage Board of Directors attended the post-course presentations to emulate the audience of unfamiliar people that students experienced during the pre-course presentation. Fourteen students completed both the pre- and post-course presentations.

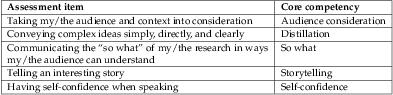

We designed the assessment of presentations in consultation with the Office of Educational Assessment at the University of Washington to reflect five core competencies of Engage training (Table 2 ). The assessment (provided in supplementary material S1) consisted of the prompt “How well did you do in the following areas:” (for the self-assessment), or “Rate the student’s success in:” (for the external assessment), followed by the five assessment items corresponding to core competencies. A four-step rating scale was used (needs a lot of work, needs a little work, pretty good as is, excellent as is).

For purposes of assessment, individual videos were posted to the video-sharing site Vimeo. Assessments were administered using Google Forms.

For self-assessments, each student was provided the URL to his or her pre-course video in the fifth week of the course and post-course video in the seventh week and asked to watch the video and complete the assessment.

After the Engage course was complete, 17 reviewers were recruited from the university and broader community. This pool of reviewers consisted of seven Engage founders or alumni, two professionals in fields related to science communication, two engineering undergraduates and two science graduate students with no training in science communication, and four people not affiliated with the university and with no training in science communication . Each external reviewer was assigned five videos using a stratified random approach (ensuring each reviewer viewed at least one pre-course and one post-course video). Therefore, each video was assessed by three reviewers. Reviewers were blind to the pre-course or post-course status of the video, and no reviewer saw both a pre-course and post-course video from the same student. Reviewers were provided with a video review guide that included links to two example videos, one which had much room for improvement and one that was excellent. This review guide (excluding the video examples) is provided in supplementary material S2.

2.2 Results

-

Self-assessments.

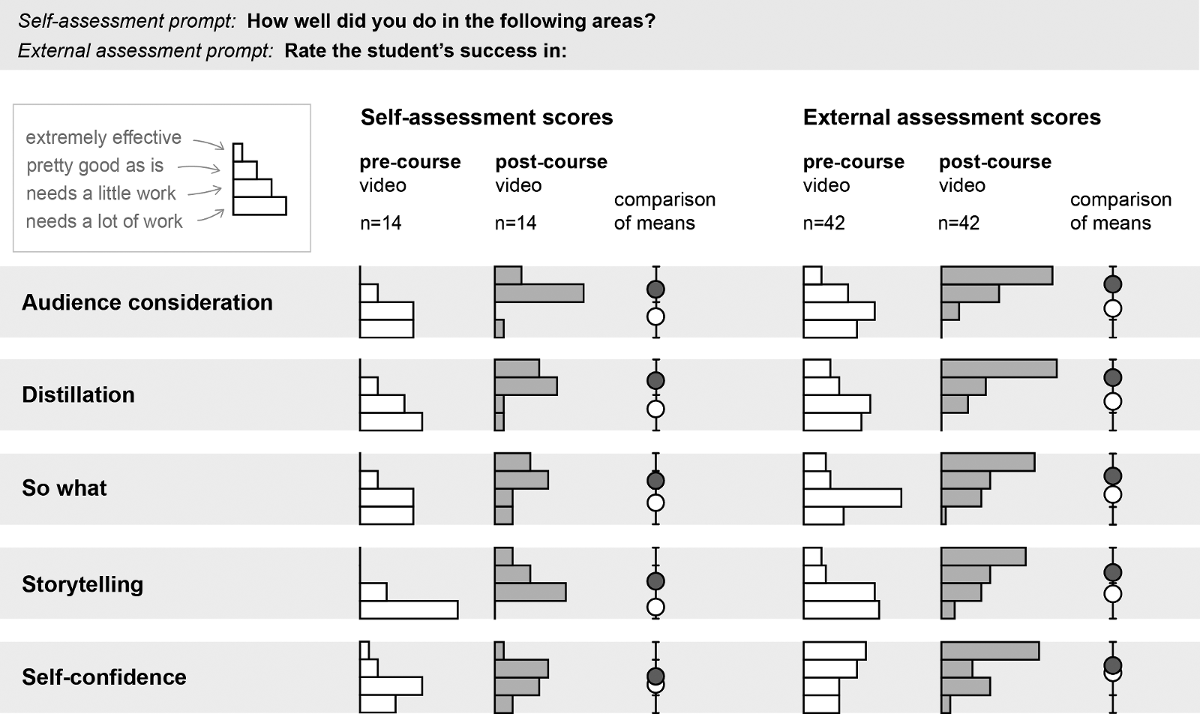

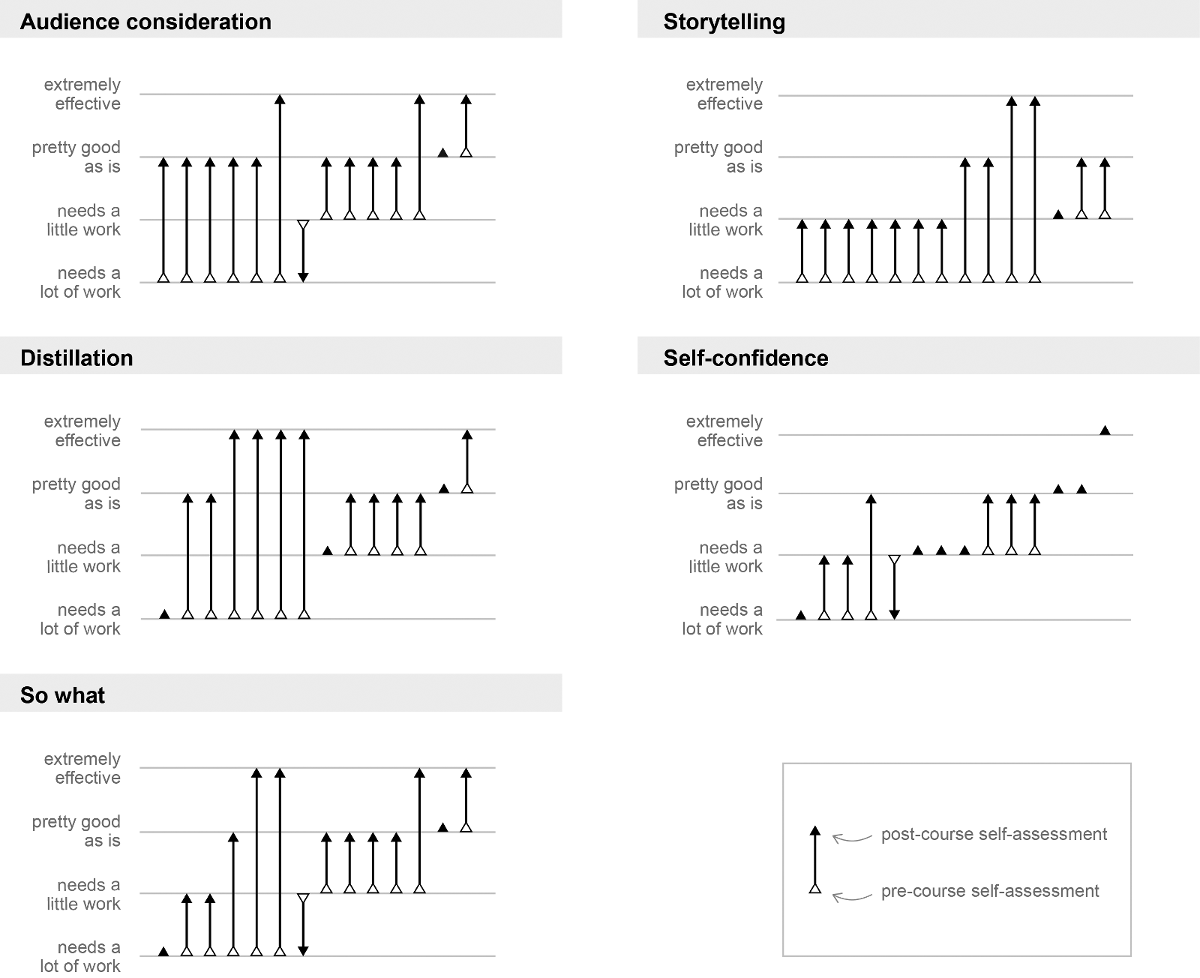

Across the five competencies, students overwhelmingly scored their post-course video as more effective than their pre-course video. For all competencies except self-confidence, mean scores for post-course videos were higher than those of the pre-course videos by at least a full step on the four-step rating scale (Figure 2 ). Self-confidence also increased, but by less than a full step. As shown in Figure 3 , out of 70 paired responses (14 students 5 questions) post-course videos were rated lower in only three cases. Scores remained the same in 14 cases, dominated by assessments of self-confidence.

Figure 2: Scores from assessment of videos . Frequency plots of scores from self-assessments and external assessments are shown for each assessment item. The four-step rating scale is shown in the upper left corner. To aid in visually comparing pre-course and post-course data, the means are plotted on a four-step rating scale corresponding to the axis of the frequency plots.

Figure 3: Self-assessments for pre-course and post-course videos . Paired data for each student’s pre-course and post-course videos are shown for each of the five assessment items. A single arrowhead indicates that both videos were given the same rating.

-

External assessments.

Consistent with the results from the self-assessment, external reviewers saw the least amount of improvement in ability to project self-confidence. For the other four competencies, mean scores for post-course videos were higher than those of the pre-course videos by at least a full step on the four-step rating scale (Figure 2 ). See supplementary material S5 for a statistical analysis providing evidence that for each metric except self-confidence, the training influenced the likelihood that a student’s post-course video was scored higher than his or her post-course video.

-

Comparison of self and external assessments.

Students may be biased (e.g. overly self-critical) in assessing their own communication skills. The design of this study allowed us to compare self-assessment scores with predicted scores from a model of reviewer assessment. Supplementary material S5 provides this analysis, showing that students tended to be more critical of their post-course videos when rating audience consideration, distillation, and storytelling then external reviewers. They also rated themselves as less successful in projecting self-confidence in their pre-course video than the external reviewers.

3 Surveys of students and alumni

We used pre-course and post-course surveys to investigate whether the Engage course had an effect on students’ knowledge about effectively communicating with the public, self-perceived competence in communication skills, and self-confidence in public speaking. To help determine whether the training had an effect beyond the duration of the course, alumni were asked to participate in a similar survey. Additional questions inquired about the effect of the training on their participation on communication and professional activities.

3.1 Method

Surveys were developed in consultation with the Office of Educational Assessment at the University of Washington. Drafts of survey questions were tested with Engage alumni who helped with the design of this study and were revised as needed. Surveys were administered using Google Forms. All survey questions and instructions are included in supplementary material S3. Data is provided in supplementary material S4.

Students from the 2015 cohort received an email two-and-a-half weeks before the course began that asked them to complete the anonymous pre-course survey. Nine weeks after the conclusion of the course students were asked to complete a post-course survey. Fifteen students completed the pre-course survey and fourteen completed the post-course survey. The method used to administer the anonymous surveys did not allow us to pair students’ responses for pre-course and post-course responses, so we present a summary of the data that does not make use of paired analysis.

Engage alumni of the 2010–2014 cohorts were contacted at their last known email address in January 2015 and asked to complete an anonymous survey. A reminder email was sent three weeks later. Alumni were excluded if they helped in the design of this study. Of the 50 alumni contacted, 30 completed the survey (a 60% response rate).

3.2 Survey topics

Topics of the survey questions included:

- Beliefs about effective techniques for public communication: students and alumni were asked to indicate how important they believe various techniques are for communicating with public audiences. The techniques included both those emphasized in the Engage course (audience consideration, distillation, explaining the “so what”, and storytelling), and three competencies relevant to communication with other scientists (but not public audiences). We distinguish these two types of competencies as “public-communication competencies” and “scientist-communication competencies”. The scientist-communication competencies were included in our survey questions to help determine whether changes between pre-course and post-course surveys were due to Engage training or other causes.

- Self-perception of competency in communication skills: students and alumni were asked to rate their level of skill for the five public-communication competencies emphasized in the Engage course (audience consideration, distillation, explaining the “so what”, storytelling, self-confidence), plus the three scientist-communication competencies.

- Self-confidence: students were asked the extent to which they agree or disagree with the statement “I am confident in my ability to present material to a public audience”.

- Alumni reflection on areas of learning: alumni were asked to reflect on how much the Engage program advanced their learning for the five public-communication competencies and the three scientist-communication competencies.

- Alumni reflection on the effect of Engage on communication and professional activities: alumni were asked “to what extent, if at all, did your participation in the Engage program make it easier for you to seek out or engage in” each of five types of professional activities and employment opportunities. Alumni were also asked if they felt their participation in these activities and interest from employers had increased, stayed the same, or decreased as a result of participation in Engage.

3.3 Results of student surveys

-

Beliefs about effective techniques for public communication.

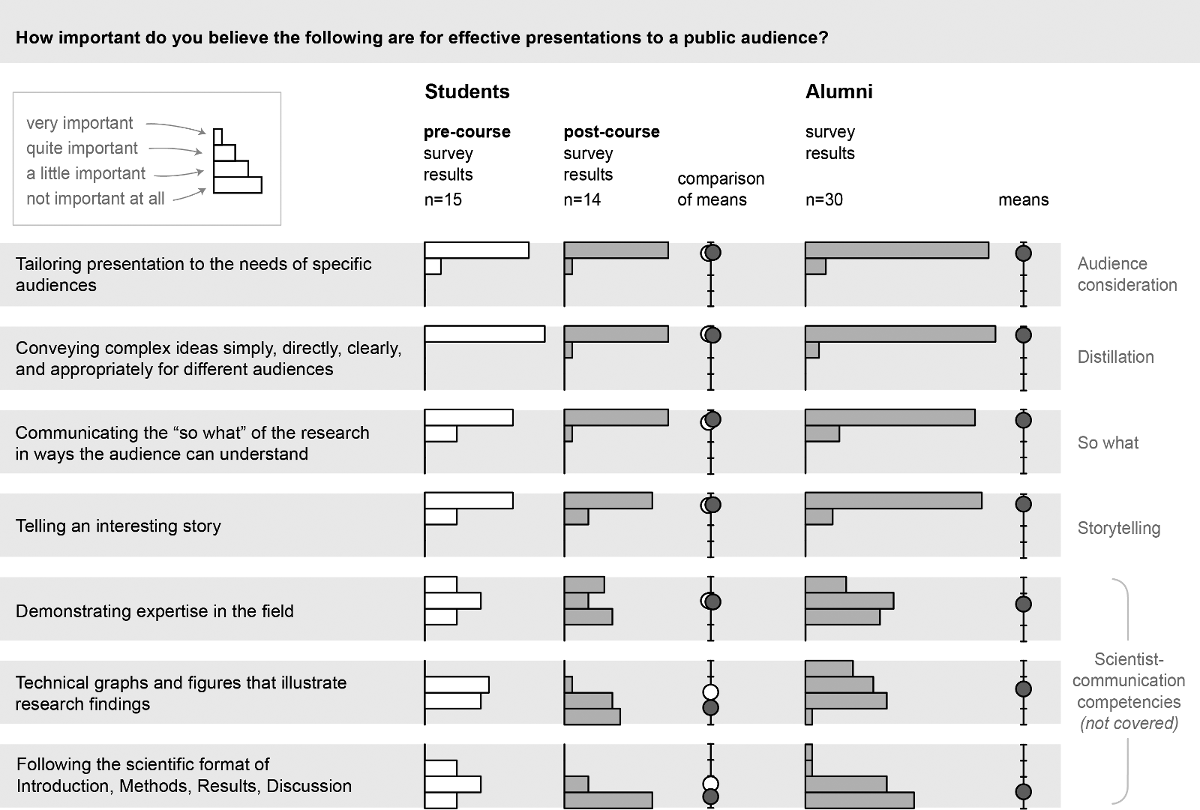

In both the pre-course and post-course surveys, students were able to distinguish between communication strategies appropriate for public audiences and those for scientific audiences (Figure 4 ). This indicates that the students in the cohort we studied had some preexisting knowledge of the differences in communicating with public and scientific audiences. The only substantial changes between pre-course and post-course results were for two scientist-communication competencies, which students tended to rate as less important on the post-course survey than the pre-course survey.

Figure 4: Beliefs about effective techniques for public communication . This group of assessment items was answered in response to the prompt “How important do you believe the following are for effective presentations to a public audience:”. The four-step rating scale is shown in the upper left corner. Results are displayed as frequency plots. Mean values are plotted on a four-step rating scale corresponding to the axis of the frequency plots. The right margin identifies four public-communication competencies of Engage and the set of scientist-communication competencies.

-

Self-perceptions of competency in communication skills.

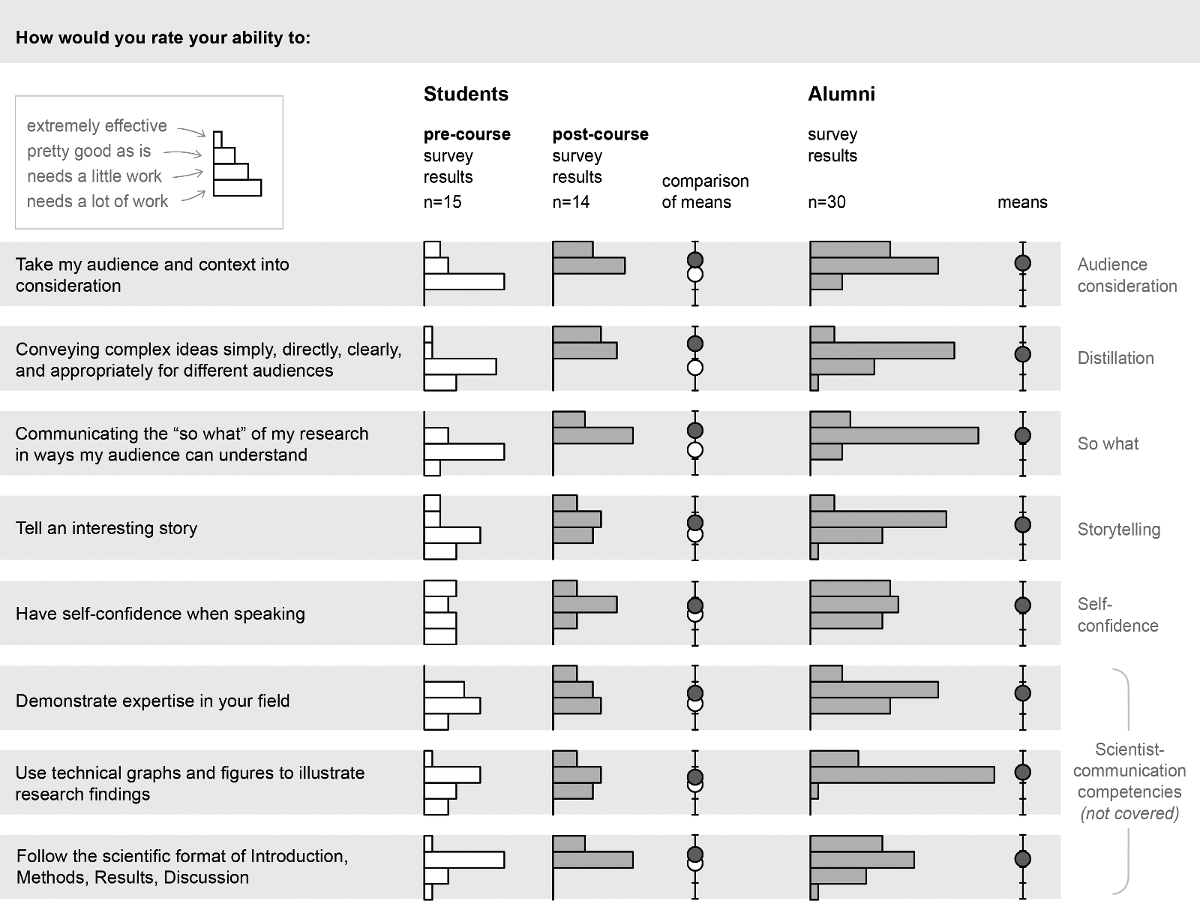

Students rated themselves at a higher skill level for each of the communication competencies on the post-course survey than on the pre-course survey (Figure 4 ). For two of the public-communication competencies (distillation and explaining the “so what”), the change exceeded a full step on the four-step rating scale. The smallest changes occurred in ratings for self-confidence and the three scientist-communication competencies.

Figure 5: Self-perception of communication skills . This group of assessment items was answered in response to the prompt “How would you rate your ability to:”. The four-step rating scale is shown in the upper left corner. Results are displayed as frequency plots. Mean values are plotted on a four-step rating scale corresponding to the axis of the frequency plots. The right margin shows the five public-communication competencies of Engage and the set of scientist-communication competencies.

-

Self-confidence.

On the pre-course survey, 11 of the 15 students indicated they “somewhat agree” with the statement they are confident in their ability to present material to a public audience. Responses shifted on the post-course survey, with equal numbers of students choosing “somewhat agree” and “strongly agree”.

3.4 Results of alumni surveys

-

Beliefs about effective techniques for public communication.

Alumni were able to distinguish between communication strategies appropriate for public audiences and those appropriate for scientific audiences (Figure 4 ). Similar to the students, they tended to rate the public-communication competencies as “very important”. Alumni views on the importance of demonstrating expertise and using technical graphs and figures when communicating with the public was mixed. The strategy of following the format of Introduction, Methods, Results, and Discussion was rated as least effective.

-

Self-perceptions of competency in communication skills.

For each of the scientific-communication and public-communication competencies, the distribution of alumni responses centered around the “pretty good as is” rating (Figure 5 ). The confidence ratings provided by alumni were similar to, but did not exceed, the confidence ratings by students in the post-course survey.

-

Reflection on areas of learning.

Alumni tended to rate their amount of learning on public-communication competencies as “quite a bit” and “very much”. For the competency of self-confidence, responses were nearly evenly distributed among “a little”, “quite a bit”, and “very much”. The lowest ratings were given to the scientist-communication competencies, which were not emphasized in the training.

-

Reflection on the effect of Engage on communication and professional activities.

Alumni reported that involvement in Engage had the largest effect on helping them to find opportunities for public speaking and public outreach activities and had a moderate effect on participation in interdisciplinary collaborations and finding employment opportunities. They generally reported little or no effect on opportunities for authorship of scientific publications or writing for other venues.

Alumni tended to report an increase in public speaking and public outreach activities due to Engage training, while most reported no change for the other items. Reports of decreases were rare, with only three recorded across 180 responses (6 activities 30 alumni respondents).

4 Discussion

4.1 Assessment of presentation skills

External reviews of pre-course and post-course videos demonstrated that the Engage course had positive influences on the quality of student presentations (supplementary material, Table S5-1). This provides evidence that this program is effective in improving students’ oral communication skills. The video presentations were captured at the beginning of the first and sixth class sessions of the 10-week course, so this effect was achieved with only a moderate amount of instructional time and out-of-class work.

Both the evaluation by Rodgers et al. [ 2018 ] and this study use external reviewers to rate the effectiveness of communication documented in pre-training and post-training videos. We note one difference in our methodologies that future researchers should consider. Our reviewers saw only one video from a trainee (the pre-course or post-course). By preventing reviewers from comparing videos we minimize the chance that reviewers will guess whether a video was captured before or after training, which could bias the ratings the reviewers assign.

This study revealed an intriguing discrepancy between external reviews and self-assessments, suggesting that self-assessments of speaking skills may not be sufficient for program evaluation. While students in the study cohort did perceive improvements in their communication skills (audience consideration, distillation, explaining the “so what”, and storytelling) (Figure 4 ), external reviewers saw greater improvement than the students for three of these four skills (supplementary material, Figure S5-1). One explanation for this discrepancy is that as students learn more about methods of effective communication, they become more critical of their performance.

In addition to showing the importance of external assessments, this work contributes to the body of research on student self-assessments [Brown, Andrade and Chen, 2015 ]. Interestingly, our results stand in contrast to recent studies of self-assessments of oral presentations in undergraduate psychology [Grez, Valcke and Roozen, 2012 ] and labor organization [Bolívar-Cruz, Verano-Tacoronte and González-Betancor, 2015 ] courses, which showed that the students tended to assess their presentation skills at a higher level than instructors and peers.

4.2 Self-confidence

For science communication training programs to be effective, programs must be designed to foster self-confidence in addition to teaching communication skills. Without sufficient self-confidence, participants may not fully capitalize on future opportunities to utilize and advance their communication skills.

Our study shows that cultivating self-confidence can be more difficult for students than learning communication techniques such as storytelling. For many people — students, professional scientists, and those in other careers — speaking in front of an audience is an experience characterized by fear, anxiety, and self-doubt [Daly, Vangelisti and Lawrence, 1989 ; Bader, 2016 ]. Neither the self-assessments nor external reviews found a substantial change in students’ ability to project self-confidence as a result of Engage training. However, the analysis presented in supplementary material S5 suggests that external reviewers perceived greater self-confidence in pre-course presentations than students reported in their self-assessments.

This suggestion that students may be able to project self-confidence without feeling particularly self-confident aligns with the work by Lundquist et al. [ 2013 ], who studied communication skills the context of a course that prepared pharmacy students to speak with other healthcare providers. In this study, pharmacy students were given time to prepare to discuss a patient case, they role-played with a faculty member who asked clinically relevant questions, and then both the student and faculty member completed a brief rubric to assess the student’s communication skills.

4.3 Issues to consider in the design of science communication training programs

-

Characteristics of the learning environment.

The structure of the course we evaluated is quite different than the typical lecture-focused and laboratory-based courses common in science curricula. Students bring their own research interests and projects into the course as the starting point from which they build their identities as science communicators. Classroom discussions and feedback from peers provide an environment that helps students to make links between their discipline-specific knowledge, information of interest to non-scientist audiences, and ways to effectively communicate that information.

-

Providing opportunities for authentic communication experiences.

The structure of Engage training pairs a 10-week course with a public presentation at Town Hall Seattle. This structure intentionally combines classroom learning with practice of the acquired science communication skills in a real-world setting with a public audience.

Instructors also make students aware of opportunities to participate in science communication activities outside Engage. Our survey results indicate that alumni felt that Engage training helped them to find opportunities in public speaking and public outreach. This suggests that training programs may have a role in connecting trainees with community organizations interested in learning about science topics.

5 Limitations and future work

Limitations include the small number of students per cohort, that our assessments of speaking skills were limited to a single cohort, and that we did not investigate whether the program produces long-term effects on communication skills.

This study evaluates a graduate-level communication course that students elected to take in addition to their required coursework. Further studies could expand this training and evaluation to additional student populations in two ways: (1) exploring modifications to make the training appropriate for an undergraduate population, and (2) determining whether a similar curriculum is effective when delivered as a required course.

The methods of assessment we used were designed to reflect the core competencies of Engage, but have not been validated by other studies. The rubric by Sevian and Gonsalves [ 2008 ] has been designed for assessing the effectiveness of scientific explanations within oral presentations, and that of Schreiber, Paul and Shibley [ 2012 ] is for general public speaking. Several of the items in the rubric by Evia et al. [ 2017 ] for self-assessment of competency in engaging with the public are also appropriate. Rubrics such as these should be considered for use in future studies.

6 Conclusion

We have shown that the Engage course has a positive effect on trainees’ skills in communicating with public audiences, as demonstrated through external assessments, self-assessments, and survey data. This result is notable because this training does not rely on instructors with extensive education in science communication. Instead, these results were achieved in a course taught by previous trainees — suggesting that lack of faculty who specialize in science communication is not a barrier to establishing a training program in science communication. In addition, we demonstrate that these methods can be successfully integrated into the timeline of a 10-week university course.

7 Acknowledgements

We thank Sharon Greenblum, Michelle Weirathmueller, and Natalie Jones for their help in planning this study. We also acknowledge the efforts of the 2015 Engage cohort, Engage alumni, and external reviewers in making this study possible. We thank Catharine Beyer of the University of Washington Office of Educational Assessment for her help with designing the assessments. We thank Julia Parrish and the College of the Environment at the University of Washington for support of the Engage program. Engage would not be possible without the dedicated efforts of the founders (Rachel Mitchell, Eric Hilton, Phil Rosenfield, and Cliff Johnson). We especially thank the instructors for the first two cohorts of students (R. Mitchell, E. Hilton, and Tyler Robinson). We are also grateful to the nearly 80 individuals who made financial contributions to our crowdfunding campaign, as well as the staff of the Experiment.com crowdfunding platform. We thank Lauren Kuehne, Jennifer Davison, and Suguru Ishizaki for critical feedback on the manuscript draft.

References

-

Bader, C. D. (May 2016). The GfK Group Project Report for the National Survey of Fear — Wave 3 . URL: http://www.chapman.edu/wilkinson/research-centers/babbie-center/\_files/codebook-wave-3-draft.pdf .

-

Baram-Tsabari, A. and Lewenstein, B. V. (2013). ‘An Instrument for Assessing Scientists’ Written Skills in Public Communication of Science’. Science Communication 35 (1), pp. 56–85. https://doi.org/10.1177/1075547012440634 .

-

Baron, N. (2010). ‘Stand up for science’. Nature 468 (7327), pp. 1032–1033. https://doi.org/10.1038/4681032a .

-

Basken, P. (28th May 2009). ‘Often distant from policy making, scientist try to find a public voice’. The Chronicle of Higher Education . URL: http://chronicle.com/article/Often-Distant-From-Policy/44410 .

-

Bass, E. (2016). ‘The Importance of Bringing Science and Medicine to Lay Audiences’. Circulation 133 (23), pp. 2334–2337. https://doi.org/10.1161/circulationaha.116.023297 .

-

Bell, L. (2008). ‘Engaging the Public in Technology Policy’. Science Communication 29 (3), pp. 386–398. https://doi.org/10.1177/1075547007311971 .

-

Bernstein, R. (2014). ‘Communication: Spontaneous scientists’. Nature 505 (7481), pp. 121–123. https://doi.org/10.1038/nj7481-121a .

-

Blickley, J. L., Deiner, K., Garbach, K., Lacher, I., Meek, M. H., Porensky, L. M., Wilkerson, M. L., Winford, E. M. and Schwartz, M. W. (2012). ‘Graduate Student’s Guide to Necessary Skills for Nonacademic Conservation Careers’. Conservation Biology 27 (1), pp. 24–34. https://doi.org/10.1111/j.1523-1739.2012.01956.x .

-

Bolívar-Cruz, A., Verano-Tacoronte, D. and González-Betancor, S. M. (2015). ‘Is University Students’ Self-Assessment Accurate?’ In: Sustainable Learning in Higher Education . Ed. by M. Peris-Ortiz and J. M. M. Lindahl, pp. 21–35. https://doi.org/10.1007/978-3-319-10804-9_2 .

-

Brown, G. T. L., Andrade, H. L. and Chen, F. (2015). ‘Accuracy in student self-assessment: directions and cautions for research’. Assessment in Education: Principles, Policy & Practice 22 (4), pp. 444–457. https://doi.org/10.1080/0969594x.2014.996523 .

-

Brownell, S. E., Price, J. V. and Steinman, L. (2013). ‘Science communication to the general public: why we need to teach undergraduate and graduate students this skill as part of their formal scientific training’. The Journal of Undergraduate Neuroscience Education 12 (1), E6–E10.

-

Bubela, T., Nisbet, M. C., Borchelt, R., Brunger, F., Critchley, C., Einsiedel, E., Geller, G., Gupta, A., Hampel, J., Hyde-lay, R., Jandciu, E. W., Jones, S. A., Kolopack, P., Lane, S., Lougheed, T., Nerlich, B., Ogbogu, U., O’riordan, K., Ouellette, C., Spear, M., Strauss, S., Thavaratnam, T., Willemse, L. and Caulfield, T. (2009). ‘Science communication reconsidered’. Nature Biotechnology 27 (6), pp. 514–518. https://doi.org/10.1038/nbt0609-514 .

-

Cannon, J. R., Dietz, J. M. and Dietz, L. A. (1996). ‘Training Conservation Biologists in Human Interaction Skills’. Conservation Biology 10 (4), pp. 1277–1282. https://doi.org/10.1046/j.1523-1739.1996.10041277.x .

-

Clark, G., Russell, J., Enyeart, P., Gracia, B., Wessel, A., Jarmoskaite, I., Polioudakis, D., Stuart, Y., Gonzalez, T., MacKrell, A., Rodenbusch, S., Stovall, G. M., Beckham, J. T., Montgomery, M., Tasneem, T., Jones, J., Simmons, S. and Roux, S. (2016). ‘Science Educational Outreach Programs That Benefit Students and Scientists’. PLOS Biology 14 (2), e1002368. https://doi.org/10.1371/journal.pbio.1002368 .

-

Clarkson, M. D., Footen, N. R., Gambs, M. F., Gonzalez, I. F., Houghton, J. and Smith, M. L. (2014). ‘Extended abstract: Engage, a model of student-led graduate training in communication for STEM disciplines’. In: 2014 IEEE International Professional Communication Conference (IPCC), Pittsburgh, PA, U.S.A. Pp. 1–2. https://doi.org/10.1109/ipcc.2014.7111143 .

-

Crone, W. C., Dunwoody, S. L., Rediske, R. K., Ackerman, S. A., Petersen, G. M. Z. and Yaros, R. A. (2011). ‘Informal Science Education: A Practicum for Graduate Students’. Innovative Higher Education 36 (5), pp. 291–304. https://doi.org/10.1007/s10755-011-9176-x .

-

Daly, J. A., Vangelisti, A. L. and Lawrence, S. G. (1989). ‘Self-focused attention and public speaking anxiety’. Personality and Individual Differences 10 (8), pp. 903–913. https://doi.org/10.1016/0191-8869(89)90025-1 .

-

Evia, J. R., Peterman, K., Cloyd, E. and Besley, J. (2017). ‘Validating a scale that measures scientists’ self-efficacy for public engagement with science’. International Journal of Science Education, Part B 8 (1), pp. 40–52. https://doi.org/10.1080/21548455.2017.1377852 .

-

Fischhoff, B. and Scheufele, D. A. (2014). ‘The Science of Science Communication II’. Proceedings of the National Academy of Sciences 111 (Supplement_4), pp. 13583–13584. https://doi.org/10.1073/pnas.1414635111 .

-

Fogg-Rogers, L. A., Weitkamp, E. and Wilkinson, C. (April 2015). Royal Society education outreach training course evaluation. Project report. Royal Society. URL: http://eprints.uwe.ac.uk/25834/ .

-

Friedman, D. P. (2008). ‘Public Outreach: A Scientific Imperative’. Journal of Neuroscience 28 (46), pp. 11743–11745. https://doi.org/10.1523/jneurosci.0005-08.2008 .

-

Grez, L. D., Valcke, M. and Roozen, I. (2012). ‘How effective are self- and peer assessment of oral presentation skills compared with teachers’ assessments?’ Active Learning in Higher Education 13 (2), pp. 129–142. https://doi.org/10.1177/1469787412441284 .

-

Groffman, P. M., Stylinski, C., Nisbet, M. C., Duarte, C. M., Jordan, R., Burgin, A., Previtali, M. A. and Coloso, J. (2010). ‘Restarting the conversation: challenges at the interface between ecology and society’. Frontiers in Ecology and the Environment 8 (6), pp. 284–291. https://doi.org/10.1890/090160 .

-

Grorud-Colvert, K., Lester, S. E., Airame, S., Neeley, E. and Gaines, S. D. (2010). ‘Communicating marine reserve science to diverse audiences’. Proceedings of the National Academy of Sciences 107 (43), pp. 18306–18311. https://doi.org/10.1073/pnas.0914292107 .

-

Heath, K. D., Bagley, E., Berkey, A. J. M., Birlenbach, D. M., Carr-Markell, M. K., Crawford, J. W., Duennes, M. A., Han, J. O., Haus, M. J., Hellert, S. M., Holmes, C. J., Mommer, B. C., Ossler, J., Peery, R., Powers, L., Scholes, D. R., Silliman, C. A., Stein, L. R. and Wesseln, C. J. (2014). ‘Amplify the Signal: Graduate Training in Broader Impacts of Scientific Research’. BioScience 64 (6), pp. 517–523. https://doi.org/10.1093/biosci/biu051 .

-

Holliman, R. and Warren, C. J. (2017). ‘Supporting future scholars of engaged research’. Research for All 1 (1), pp. 168–184. https://doi.org/10.18546/rfa.01.1.14 .

-

Hundey, E. J., Olker, J. H., Carreira, C., Daigle, R. M., Elgin, A. K., Finiguerra, M., Gownaris, N. J., Hayes, N., Heffner, L., Razavi, N. R., Shirey, P. D., Tolar, B. B. and Wood-Charlson, E. M. (2016). ‘A Shifting Tide: Recommendations for Incorporating Science Communication into Graduate Training’. Limnology and Oceanography Bulletin 25 (4), pp. 109–116. https://doi.org/10.1002/lob.10151 .

-

Jensen, E. (2011). ‘Evaluate impact of communication’. Nature 469, p. 162.

-

Kuehne, L. M., Twardochleb, L. A., Fritschie, K. J., Mims, M. C., Lawrence, D. J., Gibson, P. P., Stewart-Koster, B. and Olden, J. D. (2014). ‘Practical Science Communication Strategies for Graduate Students’. Conservation Biology 28 (5), pp. 1225–1235. https://doi.org/10.1111/cobi.12305 .

-

Leshner, A. I. (2007). ‘Outreach Training Needed’. Science 315 (5809), pp. 161–161. https://doi.org/10.1126/science.1138712 .

-

Lundquist, L. M., Shogbon, A. O., Momary, K. M. and Rogers, H. K. (2013). ‘A Comparison of Students’ Self-Assessments With Faculty Evaluations of Their Communication Skills’. American Journal of Pharmaceutical Education 77 (4), p. 72. https://doi.org/10.5688/ajpe77472 .

-

Miller, S., Fahy, D. and the ESConet Team (2009). ‘Can Science Communication Workshops Train Scientists for Reflexive Public Engagement?’ Science Communication 31 (1), pp. 116–126. https://doi.org/10.1177/1075547009339048 .

-

Neeley, L., Goldman, E., Smith, B., Baron, N. and Sunu, S. (2014). GRADSCICOMM: Report and recommendations . URL: http://www.informalscience.org/sites/default/files/GradSciComm_Roadmap_Final.compressed.pdf .

-

Olson, R. (2009). Don’t be such a scientist: talking substance in an age of style. Washington, DC, U.S.A.: Island Press.

-

Rodgers, S., Wang, Z., Maras, M. A., Burgoyne, S., Balakrishnan, B., Stemmle, J. and Schultz, J. C. (2018). ‘Decoding Science: Development and Evaluation of a Science Communication Training Program Using a Triangulated Framework’. Science Communication 40 (1), pp. 3–32. https://doi.org/10.1177/1075547017747285 .

-

Schreiber, L. M., Paul, G. D. and Shibley, L. R. (2012). ‘The Development and Test of the Public Speaking Competence Rubric’. Communication Education 61 (3), pp. 205–233. https://doi.org/10.1080/03634523.2012.670709 .

-

Sevian, H. and Gonsalves, L. (2008). ‘Analysing how Scientists Explain their Research: A rubric for measuring the effectiveness of scientific explanations’. International Journal of Science Education 30 (11), pp. 1441–1467. https://doi.org/10.1080/09500690802267579 .

-

Shaikh-Lesko, R. (1st August 2014). The Scientist Magazine . URL: http://www.the-scientist.com/?articles.view/articleNo/40572/title/Science-Speak/ .

-

Sharon, A. J. and Baram-Tsabari, A. (2013). ‘Measuring mumbo jumbo: A preliminary quantification of the use of jargon in science communication’. Public Understanding of Science 23 (5), pp. 528–546. https://doi.org/10.1177/0963662512469916 .

-

Silva, J. and Bultitude, K. (2009). ‘Best practice in communications training for public engagement with science, technology, engineering and mathematics’. JCOM 08 (02), A03. URL: https://jcom.sissa.it/archive/08/02/Jcom0802%282009%29A03 .

-

Smith, B., Baron, N., English, C., Galindo, H., Goldman, E., McLeod, K., Miner, M. and Neeley, E. (2013). ‘COMPASS: Navigating the Rules of Scientific Engagement’. PLoS Biology 11 (4), e1001552. https://doi.org/10.1371/journal.pbio.1001552 .

-

Stuart, A. E. (2013). ‘Engaging the audience: developing presentation skills in science students’. Journal of Undergraduate Neuroscience Education 12 (1), A4–A10.

-

Warren, D. R., Weiss, M. S., Wolfe, D. W., Friedlander, B. and Lewenstein, B. (2007). ‘Lessons from Science Communication Training’. Science 316 (5828), 1122b. https://doi.org/10.1126/science.316.5828.1122b .

-

Webb, A. B., Fetsch, C. R., Israel, E., Roman, C. M., Encarnación, C. H., Zacks, J. M., Thoroughman, K. A. and Herzog, E. D. (2012). ‘Training scientists in a science center improves science communication to the public’. Advances in Physiology Education 36 (1), pp. 72–76. https://doi.org/10.1152/advan.00088.2010 .

-

Weiss, P. S. (2011). ‘A Conversation with Alan Alda: Communicating Science’. ACS Nano 5 (8), pp. 6092–6095. https://doi.org/10.1021/nn202925m .

-

Yeoman, K. H., James, H. A. and Bowater, L. (2011). ‘Development and Evaluation of an Undergraduate Science Communication Module’. Bioscience Education 17 (1), pp. 1–16. https://doi.org/10.3108/beej.17.7 .

Authors

Melissa Clarkson is an information designer working at the intersection of informatics, design, and life sciences. The goal of her work is to make scientific information meaningful, engaging, and accessible. She is currently an Assistant Professor in the Division of Biomedical Informatics at the University of Kentucky. E-mail: mclarkson@uky.edu .

Juliana Houghton received her M.S. degree from the University of Washington’s School of Aquatic and Fishery Sciences in 2014, studying how boats contribute to noise experienced by endangered killer whales. She is currently working as a fish biologist in environmental compliance and endangered species coordination in Seattle. E-mail: houghtonjuliana@gmail.com .

William Chen is a graduate of the Quantitative Ecology and Resource Management program at the University of Washington in Seattle, Washington, USA. He is currently the Marketing Intern for the Seattle, Washington office of The Nature Conservancy (TNC), where he designs digital content to communicate the importance of the environment to society and the role of TNC in enacting environmental solutions. E-mail: wchen1642@gmail.com .

Jessica Rohde is Web Manager / Communication Officer at the Interagency Arctic Research Policy Committee where she connect scientists across disciplines and sectors to accelerate the pace of Arctic research. E-mail: jessica.rohde.jro@gmail.com .